Published 2025-10-19.

Last modified 2025-10-21.

Time to read: 2 minutes.

llm collection.

- Claude Code Is Magnificent, But Claude Desktop Is a Hot Mess

- Gemini vs. Sonnet 3.5 and 4.6 for Meticulous Work

- Google Gemini Code Assist

- Google Antigravity

- AI Planning vs. Waterfall Project Management

- Best Local LLMs for Coding

- Running an LLM on the Windows Ollama app

- Early Draft: Multi-LLM Agent Pipelines

- MiniMax-M2 and Mini-Agent Review

- MiniMax Web Search with ddgr

- LLM Societies

- CodeGPT

CodeGPT features an AI assistant creator (or GPTs), an Agent Marketplace, a Copilot for software engineers, and an API for advanced solutions. The CodeGPT Visual Studio Code extension works with many models from many vendors. Including Claude Sonnet 4.5.

$ code --install-extension DanielSanMedium.dscodegpt

I had to give my GitHub email to Judin Labs to sign up and use the extension.

-

To display the CodeGPT pane, I pressed CTRL+SHIFT+P,

then typed

codegpt. - One of the choices that appeared was View show CodeGPT Chat, which I selected.

- CTRL+ALT+B revealed the alternate sidebar.

- Then I carefully clicked on the CodeGPT heading in the sidebar, and dragged it to the alternate (right) sidebar, where I prefer to chat.

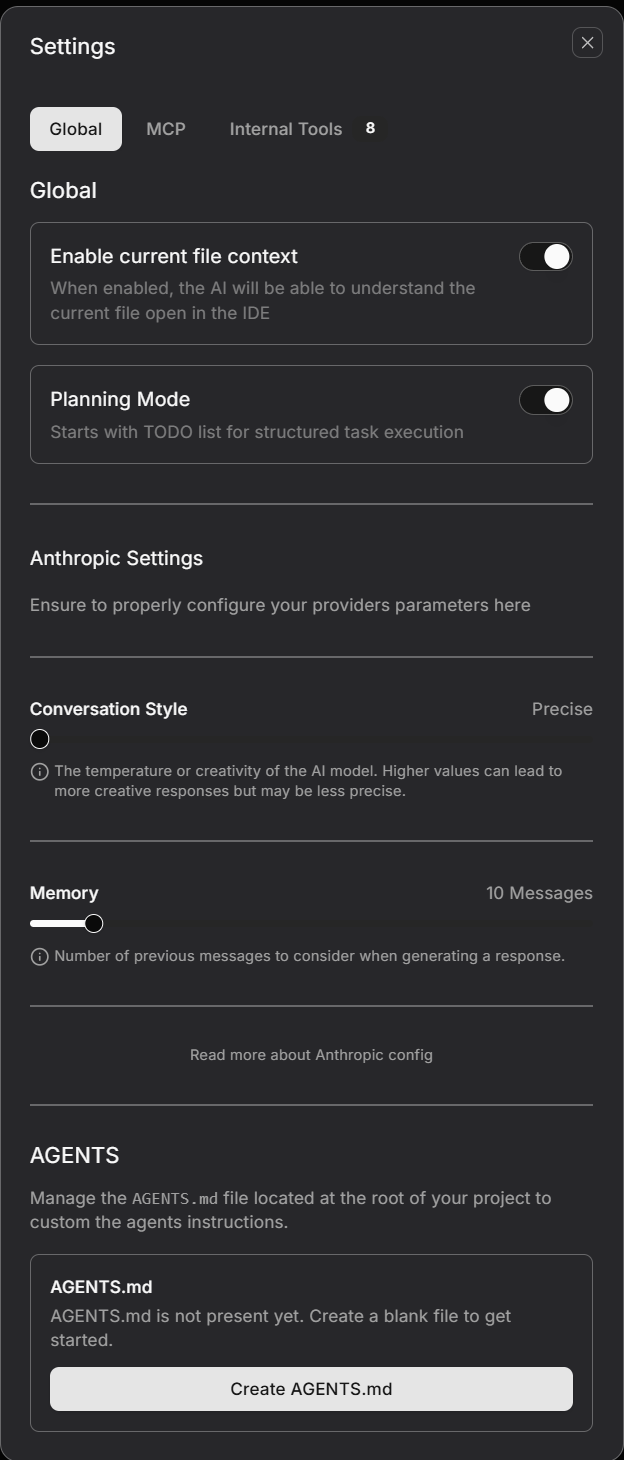

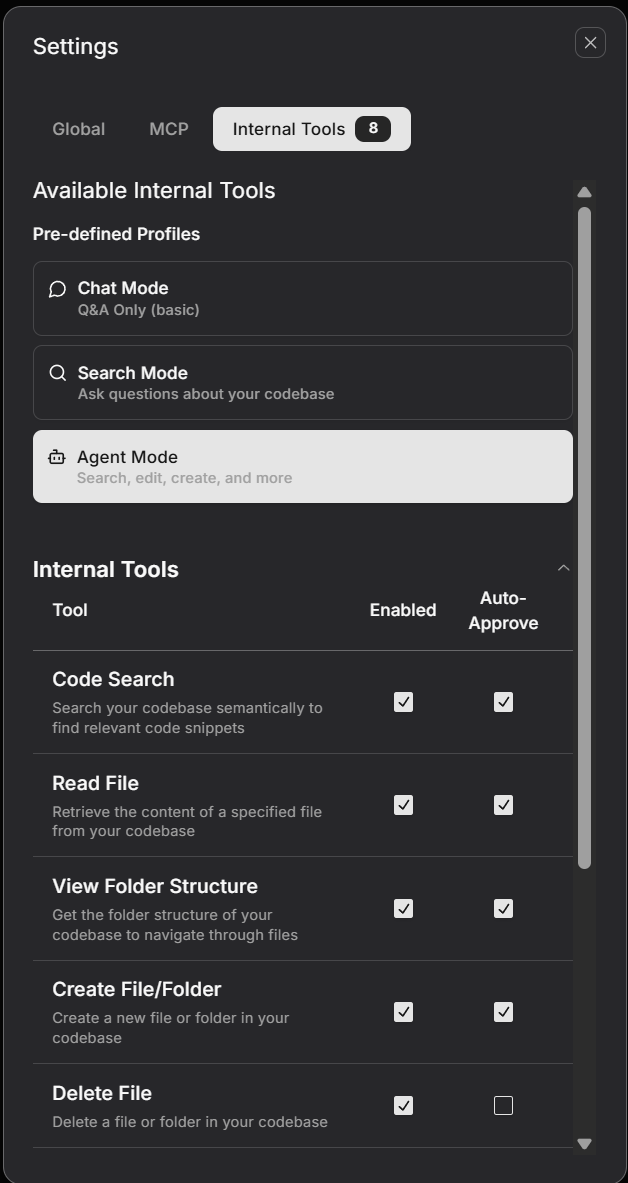

- Clicking on the settings icon displayed the following:

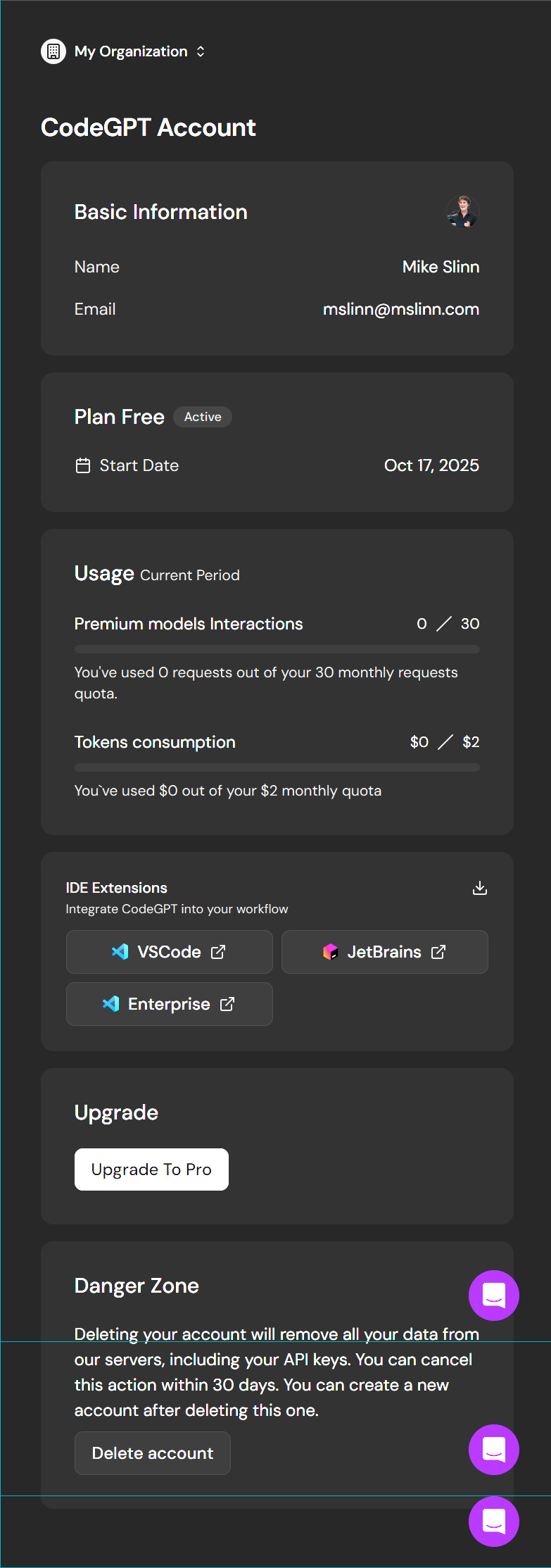

Clicking on the person icon at the top right of the CodeGPT panel displayed this in my web browser:

Ah, yet another money grab. A few days after installation, at 7:30 AM on a Sunday (ET) I saw an alert in Visual Studio saying that the CodeGPT servers were overloaded and that autocomplete might not work. The message urged me to upgrade to Pro; apparently only the free service was affected. That felt bogus, so I deleted the extension instead.

- Claude Code Is Magnificent, But Claude Desktop Is a Hot Mess

- Gemini vs. Sonnet 3.5 and 4.6 for Meticulous Work

- Google Gemini Code Assist

- Google Antigravity

- AI Planning vs. Waterfall Project Management

- Best Local LLMs for Coding

- Running an LLM on the Windows Ollama app

- Early Draft: Multi-LLM Agent Pipelines

- MiniMax-M2 and Mini-Agent Review

- MiniMax Web Search with ddgr

- LLM Societies

- CodeGPT