Introduction

The dictionary definitions for the word ‘control’ have not been updated since before the advent of computing machinery, approximately 80 years ago. While the previous definitions still apply and are valid, they do not provide a nuanced definition suitable for discussing the control of current technology.

These changes took several human generations to evolve; they did not all happen at once. While writing this document, I came to realize that legal precedent from 150 years ago is becoming increasingly relevant. The advent of new technology has brought us full circle. Read on to learn more.

I wrote this article over a period of 4 weeks and have revised it extensively since then as my thinking developed. As with all the articles on this website, I will continue to update this article as new information becomes available. Furthermore, any such definition would be well served by vigorous discussion.

Control, Defined

Let's start the discussion with a few dictionary definitions for ‘control’, as a noun and a verb. This is not meant to be exhaustive.

- to direct the behavior of (a person or animal) : to cause (a person or animal) to do what you want

- to have power over (something)

- to direct the actions or function of (something) / to cause (something) to act or function in a certain way

- to exercise restraining or directing influence over : REGULATE

- power or authority to guide or manage

- a device or mechanism used to regulate or guide the operation of a machine, apparatus, or system

- the power to influence or direct people's behavior or the course of events

- determine the behavior or supervise the running of

- n. the power to direct, manage, oversee and/or restrict the affairs, business, or assets of a person or entity.

- v. to exercise the power of control.

Lawinsider.com has some definitions, based on analyzing a corpus of documents, however their corpus appears to only have been drawn from corporate law, and the definitions offered are narrow in scope as a result. More than a dozen definitions are offered. The one that seems most applicable to software is:

– From LawInsider.com

Control v. Ownership

In a nutshell, the traditional definition of control is influence or authority over, while ownership is defined as the state of having legal title, which means legal control of the status of something. Ownership might require a legal responsibility to provide some measure of control. Responsibility for outcomes might derive from exercising or failing to exercise proper control. Further examination of specific examples might be instructive and might require a restatement of this short definition.

Control is possible without ownership; multiple scenarios are possible, some of which are mentioned in this article.

Pwned

No discussion these days of the word ‘control’ in a software context would be complete without mentioning the word ‘pwned’. Pwned is a portmanteau of the words ‘power’ and ‘owned’ or ‘perfectly owned’. For video gamers and increasingly in the trade press, the word means an opponent has been defeated, or ‘owned’. Listen to this short video to hear how the word is pronounced.

Note the conflation of the concepts of ownership, control, and dominance.

haveibeenpwned.com

– From F-Secure, a company that provides enterprise-level security products.

Assessing Control

Feedback

A person generally considers themselves to control a thing, an animal, or a person when they receive feedback appropriate for the action that they took, including their inaction. For example, flicking a light switch should cause lights that are connected to shine. If a light does not shine, the person might consider the switch or the light bulb to be broken, or perhaps the circuit might be suspect.

If for some reason feedback is suppressed or imperceptible, then the person would also likely believe that they were not in control, unless they believed that plausible explanations existed for the lack of feedback. For example, a person with complete color blindness would likely be aware of their inability to perceive color. Similarly, a person with a hearing defect that prevented them from hearing sounds that might indicate a device is responding to their actions would not expect to hear those sounds.

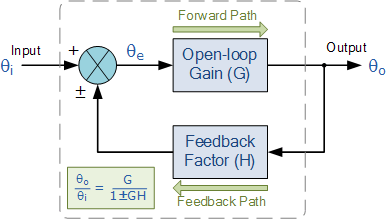

Control systems theory is usually taught to electrical engineers starting in the third year. I'm not going to get into that material here. In case you would like to know more, below is a technical diagram that suggests some basic concepts, including feedback, for closed-loop control systems. The course linked to has more detail.

Learning New Skills

Learning a skill can enhance one’s ability to control something. For example, learning to ride a bicycle or learning to fly a kite. Regarding flying kites, complete control is impossible because the wind cannot be controlled, but learning how to respond to wind changes is an essential part of learning to fly a kite.

Thus, absolute control over some types of things might not be possible, even if some measure of control can be attained or enhanced through practice.

Inert Objects

Scissors are useful for cutting paper. As inert objects, scissors do nothing on their own. The person holding the scissors decides what to cut, when to cut it, and how and where to perform the action. Sharp scissors can be dangerous when handled improperly, when handled by children, or if a properly trained adult attempts to use them when inebriated.

It is easy to determine who controls inert objects like scissors: whoever is holding the scissors has control of them, provided the inert object is not attached to anything else or is subject to physical forces such as magnetism that might affect the object to a greater degree than the person can reliably overcome.

Someone holding the scissors might be conforming to directions provided by another person, or perhaps the scissors were left out in easy reach of children or the public. Any discussion of liability is out of scope for my expertise.

Losing Control

Someone at the top of a tall ski hill covered in several inches of slightly wet snow could make a large snowball. They completely control the large snowball at this point. However, if they push hard and start rolling it downhill, the snowball could grow rapidly as it accelerates, causing injury, death, and destruction. The snowball would be out of control for most of its downward journey.

This example shows that someone could have control over something and then lose control.

Devices With Computational Capability

One major consideration for devices that contain computational elements stems from the engineering practice of layering successively higher functionality, from hardware to firmware, and software; even the software itself is built in layers. Another term, similar to ‘layers’ that is equally applicable for defining control, is ‘hierarchy’.

Devices that contain general-purpose computational elements have a hierarchy of control categories.

Context

The type of device, and the question of whether it contains a general-purpose computational element, provide a contextually dependent meaning for the word ‘control’. This is true whether the word is used as a noun or verb.

Control Categories

It is useful to distinguish between the following categories of control regarding software. This is not an exhaustive list. The preceding dictionary definitions encompass the categories listed below; the following information enhances the definitions shown earlier without contradicting them.

-

Manipulating user interface controls

(users manipulating the user interface or providing data, as designed, such that the software provides a benefit to the user).

Perception varies between devices, depending on the larger context.

This sub-category distinguishes between normal usage of software and the ability to modify the operational parameters of the software.

- Car drivers and airplane pilots are tasked with controlling their vehicles, even though they just manipulate the user interface controls. Many or most modern vehicles use software to interpret user actions; physical connections between user interface elements and control surfaces are increasingly rare, especially in large vehicles and electric vehicles. Pilots and drivers are required to exercise judgment and maintain situational awareness while operating the vehicle, such that it remains under their control.

- Data entry clerks also manipulate the user interface controls in order to type in data, but few would argue that they are in control. These people operate in a very narrow context, where situational awareness is not a factor, and no judgment beyond how to interpret the written data is required. There is nothing that a data entry clerk could normally do during their work that might affect the state of the entire system that they interact with.

- More examples would likely be instructive.

- Administrative control: Higher privileged users changing the status of regular users and the data contained in the system.

- Operational control: Installing and maintaining the software in a physical or virtual system, including physically (re)locating the system.

- Malicious control: Bad actors altering the access privilege of authorized users, granting access to unauthorized users, altering the data in the system, suppressing or changing the inputs to the system. From the point of view of those responsible for the proper operation of the system, they would perceive malicious control of the system as the system being out of control.

- Social Control: Affecting the perception of a system, such that the behavior of the users of that system is influenced while interacting with it, or the timing of their interaction is influenced, or their desire to interact with the system is suppressed. Perception is reality, in some sense; in fact, controlling people’s perception of a device is as significant as controlling access to the physical device. For example, if a person believes that an angry software god will strike them dead if they touch a sacred keyboard, the priest advocating such nonsense effectively controls the device.

Denying Control

If someone can prevent another from controlling something, is this not itself a form of control? This could be accomplished by the following:

- Breaking or damaging the device, or otherwise rendering it inoperative

- Blocking access to the device

- Masking the device’s responses

- Suppressing the effects of the user’s actions, or redirecting those actions elsewhere

- Turning off the device

- Associating attempts to control the device with negative consequences, for example, electrically shocking anyone who touches the control surface

- … and oh, so many more reasons!

Artificial Intelligence and Machine Learning

Software capable of learning has become commonplace in business software. Machine Learning (ML) learns and predicts based on passive observations by applying sophisticated statistical methods, whereas Artificial Intelligence (AI) implies an agent interacting with the environment to learn and take actions that maximize its chance of successfully achieving its goals.

There is controversy in the software community on whether ML is a subset of AI or a separate field. For the purposes of this introductory discussion, this is a distinction without a difference; however, one should be aware of the inconsistency of terminology when reading literature. The distinctions might take on more significance in a more advanced discussion, however, depending on the topic. By late 2021, most AI installations were, in fact, ML installations.

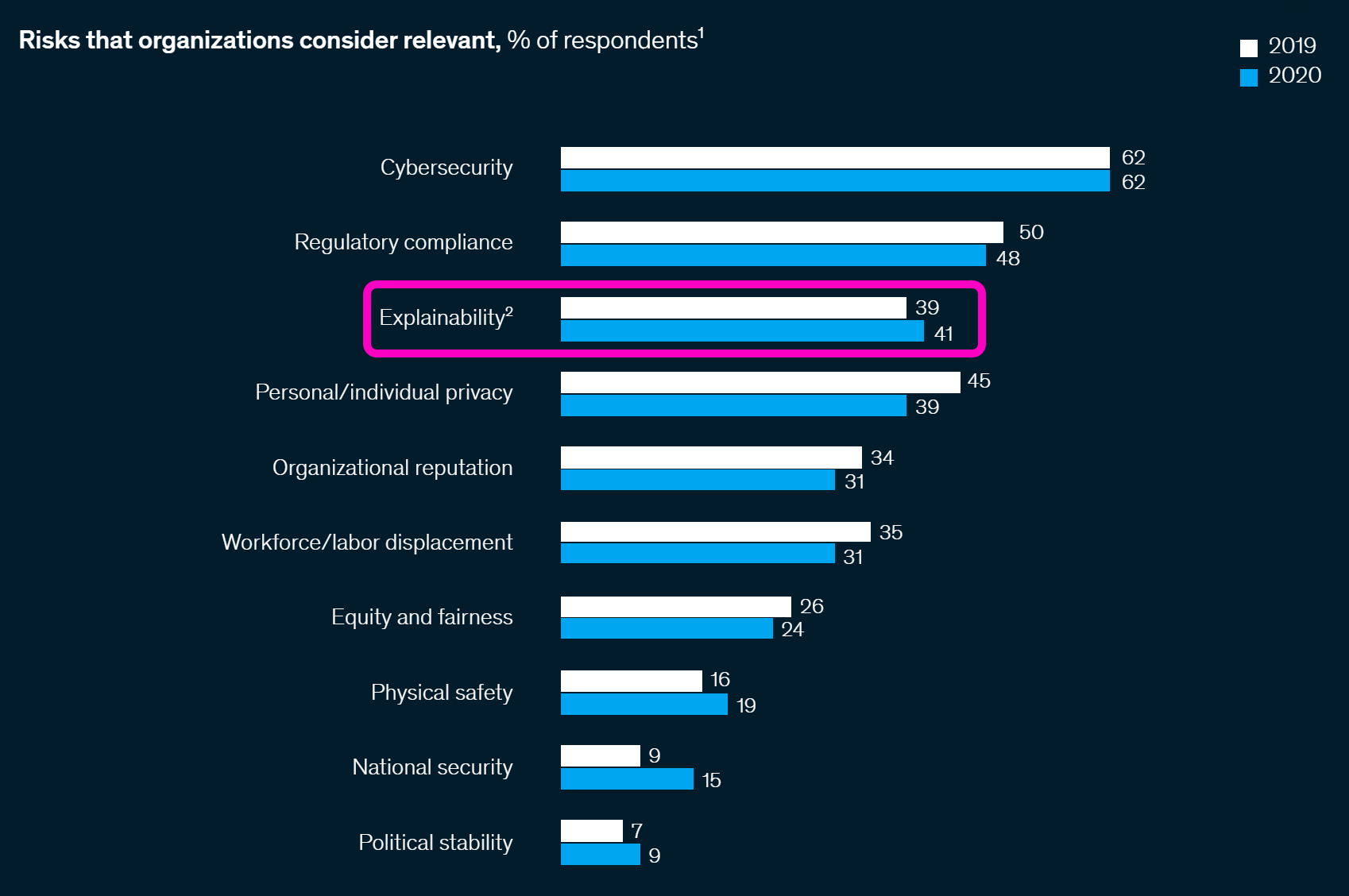

–From McKinsey & Company: The State of AI in 2020

Magical Results

ML systems must be trained before they can be used. The training data fed into an ML system defines its future responses.

Although many research papers from 2021 discuss ways to show how results by ML systems could be explained, the reality is that most of these systems currently have no way to explain their results; they operate as a black box, and they are vulnerable to learning unstated bias introduced during initial training.

Magicians seem to perform magic because they do not explain their amazing results. For the ML systems that cannot explain the rationale behind an output or state change, could anyone be said to have complete control over them?

Again, more could be said on this topic, but I will save that discussion for another time.

Unacknowledged Bias

–From Artificial Intelligence: Explainability, ethical issues and bias, published Aug 3, 2021 in the Annals of Robotics and Automation by Dr. Alaa Marshan, Department of Computer, Brunel University London, College of Engineering, Design and Physical Sciences Science, Public University in Uxbridge, England.

Is AI Impossible To Control?

Completely autonomous AI is upon us, and many well-informed technologists are gravely concerned that such a thing cannot be controlled.

It is instructive to consider a parent’s often futile desire to control their teenage offspring. When teenagers are alone with their friends, the best parents can hope for is that their children were provided good examples while growing up, and were shown how to deal with peer pressure effectively.

ML systems are much the same; the results they produce are due to their training. ML systems cannot be micromanaged to produce correct results.

–Alan Turing (1950), widely considered to be the father of theoretical computer science and artificial intelligence, published in Computing Machinery and Intelligence, Mind.

On March 10, 2022 the US eliminated the human controls requirement for fully automated vehicles. Whom or what controls the vehicle? Can a thing be held accountable for its actions?

Examples

Close analogs exist in the fields of enterprise software, particularly e-commerce,

enterprise resource planning (ERP), and software-as-a-service (SaaS).

Examples include recommender systems, image recognition and generation,

speech recognition and generation, traffic prediction, weather prediction, email filtering,

security, fraud detection, dynamic pricing, and much more.

Following are two examples, drawn from the physical world, with the intention that most readers would find them relatable.

Vacuum Cleaners

The concept of controlling a classically constructed device, such as a traditional vacuum cleaner, differs from controlling a device with a general-purpose computational element, such as the iRobot Scooba® floor washing robot, first available in 2005.

The older vacuum cleaner would only have an on/off switch, perhaps a power selector, and perhaps the ability to adjust various optional attachments. The operator would be able to control where they place the vacuum head by lifting and placing the head in the location where they want to clean.

In contrast, consider a robotic cleaner, such as the 2005 iRobot Scooba® wet vacuum cleaner pictured above. Robotic cleaners find their path to clean autonomously. This type of device usually has sensors that cause them to employ various cleaning strategies when it encounters something that triggers a specific cleaning algorithm.

Clearly, the finer points of the concept of ‘controlling’ a vacuum cleaner vary depending on the nature of the device.

Transportation

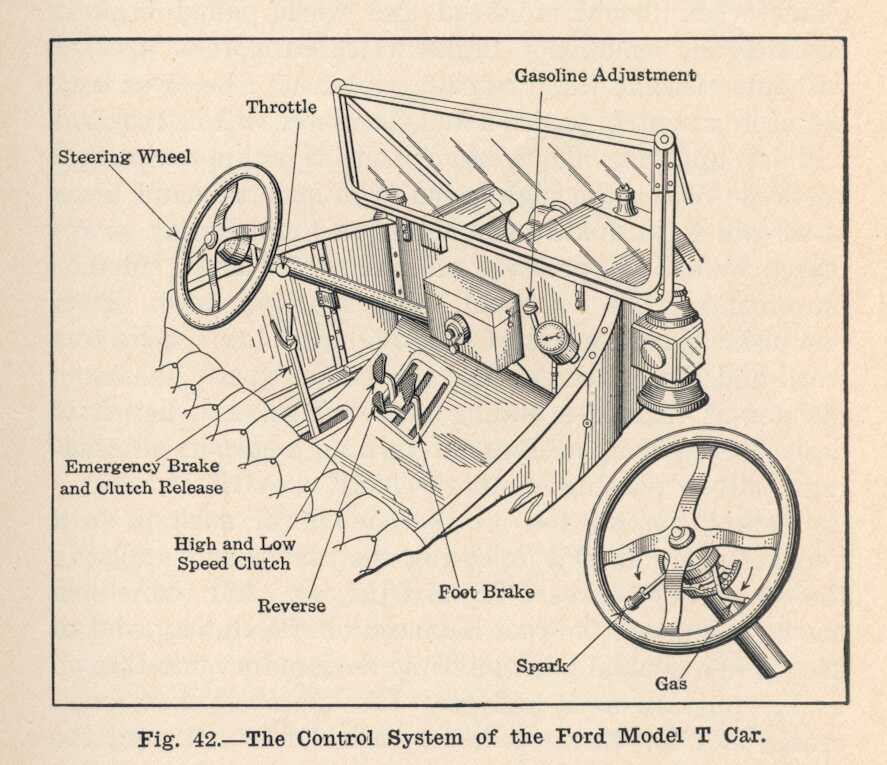

The Ford Model T was named the most influential car of the 20th century by the 1999 Car of the Century competition. No one alive today had yet been born when the Model T was first offered for sale in 1908, so here are a few reminders of what technology was like at that time.

The Model T had no battery. The only use of electricity by early versions of this car was for the 4 spark plugs, and they received power produced by a flywheel magneto system. Acetylene gas lamps were used for headlights, and kerosene lamps were used for side and tail lights.

Controlling a Ford Model T was somewhat different from modern cars: once the Model T was started, drivers could set the throttle with their right hand, press on the brake, clutch and reverse pedals, turn the steering wheel, and operate the hand brake. This diagram is from the Model T Ford Club of America:

Cadillac began offering cars with push-button starters in 1912, but few people could afford such a luxury car; instead, the Model T was started with a hand crank. Power steering would not be invented for several years, so the steering wheel was mechanically connected to the steering mechanism for the front wheels. There were no power windows; in fact, early Model Ts did not even have windows in the doors; this could be uncomfortable in bad weather.

Transistors were invented decades later, in 1956, and vacuum tubes were still exotic and expensive in 1918. Consequently, car radios did not appear for another decade, and they would use vacuum tubes until the mid-1960s. Cars would not be mass-produced with automatic transmissions until General Motors introduced the Hydramatic three-speed hydraulic automatic in 1939.

In contrast, the average vehicle in 2022 contains about 50 interconnected microprocessors, and most of those vehicles also have at least one camera. Vehicles from various manufacturers use multiple cameras to park themselves autonomously, for both parallel and regular perpendicular parking.

Some vehicles in 2023 also feature assisted reversing, which assumes steering control to mirror the path the vehicle most recently took going forward. This system makes backing out of a confined parking place easy. All the driver has to do is operate the accelerator and brakes and monitor the surrounding area, while the steering follows the exact path the car took to enter the space.

When using either of these modern features, what controls the car when parking or reversing? The car continually uses its many sensors and internal guidance system to make course corrections. All the driver does is indicate their intent, and the only action they can take is to control the speed, pause or abort the procedure. This seems rather similar to how a rider controls a horse, yet differences do exist.

What should you do on the trail when your horse just won’t settle down—and even tries to bolt?

Clearly, the finer points of the concept of controlling a car differ depending on the nature of the car, and the last word has yet to be spoken on this matter.

Six Levels of Vehicle Autonomy

The Society of Automotive Engineers (SAE) defines six levels of driver assistance technology. These levels have been adopted by the U.S. Department of Transportation (US DOT). The following is taken from the US National Highway Traffic Safety Administration (NHTSA), which is a division of the US DOT:

| Level 0 | The human driver does all the driving. |

|---|---|

| Level 1 | An advanced driver assistance system (ADAS) on the vehicle can sometimes assist the human driver with either steering or braking/accelerating, but not both simultaneously. |

| Level 2 | An advanced driver assistance system (ADAS) on the vehicle can itself actually control both steering and braking/accelerating simultaneously under some circumstances. The human driver must continue to pay full attention (“monitor the driving environment”) at all times and perform the rest of the driving task. |

| Level 3 | An automated driving system (ADS) on the vehicle can itself perform all aspects of the driving task under some circumstances. In those circumstances, the human driver must be ready to take back control at any time when the ADS requests the human driver to do so. In all other circumstances, the human driver performs the driving task. |

| Level 4 | An automated driving system (ADS) on the vehicle can itself perform all driving tasks and monitor the driving environment – essentially, do all the driving – in certain circumstances. The human need not pay attention in those circumstances. |

| Level 5 | An automated driving system (ADS) on the vehicle can do all the driving in all circumstances. The human occupants are just passengers, and never need to be involved in driving. |

Autonomous Cars

Self-driving cars have analogs in machine learning systems, which are increasingly incorporated into enterprise software.

Tesla cars were advertised as being designed to provide self-driving features; however, when this was written, many of those features were not enabled yet for all customers, and it seemed uncertain if all of them would ever be enabled. Those advanced features include autopilot, autosteer, smart summon, full self-driving, taking direction from a calendar instead of a human, and self-parking.

Tesla’s Autopilot feature is classified as Level 2 vehicle autonomy,

which means the vehicle can control steering and acceleration,

but a human in the driver’s seat can must be able to take control at any time.

Regardless of whether the claims made by Tesla are supportable, the concept of controlling such a vehicle is nuanced. When I read articles like Tesla Recalls 362,758 Vehicles Over Full Self-Driving Software Safety Concerns, I wonder if litigation will arise.

Tesla Autopilot Full Self-Driving Hardware from Tesla.

– From A Tesla on autopilot killed two people in Gardena. Is the driver guilty of manslaughter?, Los Angeles Times, 2022-01-19.

– Mercedes-Benz Says Self-Driving Option Ready to Roll, published in The Detroit Bureau.

–From Living With Artificial Intelligence, Lecture 1 by Prof. Stuart Russell, University of California at Berkeley, 2021.

More could be said regarding autonomy and context, but this serves to introduce the topic.

Update 2023-11-01 Tesla Wins Another Lawsuit

Tesla wins first US Autopilot trial involving fatal crash. I am sure we have not heard the last of this issue.

Update 2023-12-06 GM’s Cruise Dismisses 900 Employees, Cutting 24% of Workforce

After several high-profile accidents, California regulators suspended Cruise’s license to operate as authorities accused the company of hiding details. BNN Bloomberg has more details.

Update 2023-12-14 Massive Tesla Recall

Conclusion

This article is meant to stimulate discussion of a more modern and contextually aware definition for the word ‘control’. Devices that employ computational capability may require a more nuanced definition of control, while devices that go beyond general computational capability and employ machine learning and/or artificial intelligence may require an even more specialized definition.

I have not offered any such definitions; an entire book could be dedicated to deriving them. However, for a specific circumstance, a nuanced and contextually relevant definition could be derived.

Implications

We have discussed the similarity between the concept of controlling a sentient being such as a horse and controlling an autonomous device such as a self-driving vehicle or robotic vacuum cleaner. Court cases that cite horse-and-buggy precedents from 100 years or more ago may soon arise.

Doctrine Of Precedent

On a related note, in 2012, Kyle Graham, Assistant Professor of Law, Santa Clara University, discussed how new technology is gradually included into stare decisis (the doctrine of precedent) in Of Frightened Horses and Autonomous Vehicles: Tort Law and its Assimilation of Innovations.

– An imaginary attorney at some time in the not-too-distant future.

The more things change, the more they stay the same.

– Jean-Baptiste Alphonse Karr

Addendums

Gone: US Blueprint for an AI Bill of Rights

The White House Office of Science and Technology Policy, while under the Biden administration, released the Blueprint for an AI Bill of Rights as a public policy document. Subsequently, IBM published What is the AI Bill of Rights? The second Trump administration (POTUS 47) removed the document.

The document included five protections, the following 5 core ethical principles, and a call to action to protect the American public’s rights in an automated world.

- Safe and Effective Systems: protection from unsafe or ineffective systems.

- Algorithmic Discrimination Protections: people not face discrimination by algorithms, and systems should be used and designed in an equitable way.

- Data Privacy: people should be protected from abusive data practices via built-in protections, and you should have agency over how their data is used. Big tech companies will fight this every way they can.

- Notice and Explanation: People should know that an automated system is being used and understand how and why it contributes to outcomes that impact them. Good luck with that; today's technology is not designed with that capability in mind.

- Alternative Options: People should be able to opt out, where appropriate, and have access to a person who can quickly consider and remedy problems.

– From About the US Blueprint for an AI Bill of Rights

European AI Act and Liability Directive

Europe is leading the way towards a legal framework for AI implementations with the European Artificial Intelligence Act.

– From European Artificial Intelligence Act

“The AI Liability Directive is just a proposal for now, and has to be debated, edited, and passed by the European Parliament and Council of the European Union before it can become law,” as reported by The Register September 29, 2022 in Europe just might make it easier for people to sue for damage caused by AI tech.

– From Questions & Answers: AI Liability Directive

2024-01-22

The final draft was unofficially published.

TAIHA: Proposed Hazard Analysis

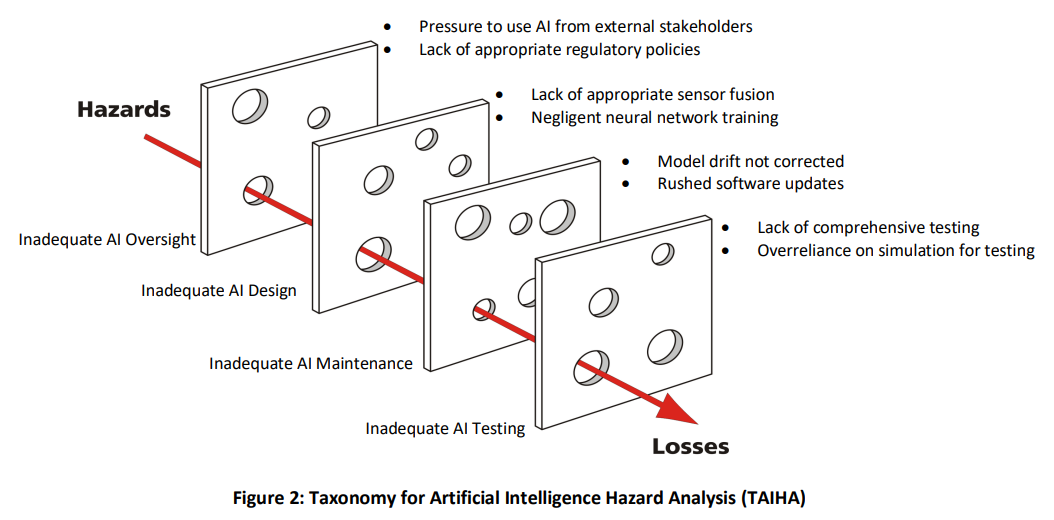

Missy Cummings, Professor and Director of Mason Autonomy and Robotics Center at George Mason University, has written A Taxonomy for AI Hazard Analysis. This paper will be published in the Journal of Cognitive Engineering and Decision Making.

References

- ‘Control’ from Merriam-Webster Dictionary

- ‘Control’ from Oxford Dictionary

- ‘Control’ from Law.com Dictionary

- ‘Control’ from LawInsider.com

- Have I Been Pwned goes open source, bags help from FBI

haveibeenpwned.com- What steps should you take when your email has been pwned?

- ‘Context’ from Lexico.com

- McKinsey & Company: The State of AI in 2020

- ‘Black box’ from Merriam-Webster Dictionary

- Artificial Intelligence: Explainability, ethical issues and bias

- Computing Machinery and Intelligence, Mind

- U.S. eliminates human controls requirement for fully automated vehicles

- 1999 Car of the Century

- Acetylene gas lamps

- Model T Ford Club of America

- BMW driver assistance

- Society of Automotive Engineers

- NHTSA: Automated Vehicles for Safety

- US National Highway Traffic Safety Administration

- Telsa autopilot

- Tesla Recalls 362,758 Vehicles Over Full Self-Driving Software Safety Concerns

- Tesla Autopilot Full Self-Driving Hardware

- Tesla videos on Vimeo

- A Tesla on autopilot killed two people in Gardena. Is the driver guilty of manslaughter?

- Mercedes-Benz Says Self-Driving Option Ready to Roll

- Living With Artificial Intelligence, Lecture 1

- Tesla wins first US Autopilot trial involving fatal crash

- BNN Bloomberg has more details.

- Tesla forced to recall just about every car it has ever built – Regulators are fuming over the Autopilot driver assist system

- Of Frightened Horses and Autonomous Vehicles: Tort Law and its Assimilation of Innovations

- White House Office of Science and Technology Policy

- Blueprint for an AI Bill of Rights

- What is the AI Bill of Rights?

- European Artificial Intelligence Act

- Europe just might make it easier for people to sue for damage caused by AI tech

- Unofficially published

- Missy Cummings

- A Taxonomy for AI Hazard Analysis