Published 2024-01-18.

Last modified 2025-09-21.

Time to read: 5 minutes.

llm collection.

Stable Diffusion is a type of large language model (LLM), called a text-to-image generator. Its code and model weights have been open sourced, and it can run on most consumer hardware equipped with a modest GPU with at least 10 GB VRAM.

This article introduces terminology necessary for working with Stable Diffusion, explained without math. The reader should be able to converse intelligently about the concepts behind Stable Diffusion after reading this article.

The next article,

stable-diffusion-webui

discusses and demonstrates a powerful and user-hostile user interface for Stable Diffusion by

AUTOMATIC1111 that requires a determinated effort to master.

The article after that, ComfyUI,

discusses and demonstrates a next-generation user interface for Stable Diffusion.

When both user interfaces are installed on a system they can share the same data and use the same Stable Diffusion engine.

Is is Magic?

This technology allows the essential features of input data to be summarized, categorized and extensively manipulated before being re-emitted as new data.

The theoretical foundation of stable diffusion seems like real-life magic. Words are correlated to images, and incantations become reality after a brief computational pause.

Some heavy mathematical concepts are employed to make this possible, along with emergent properties of neural networks that hint at a relatively new form of physics. The age-old question of whether mathematics was invented or discovered seems to be answered: math was discovered, not invented, and mere awareness is sufficient to gift aware people with new creative power.

Knowing when and how to recite incantations can change reality. That is a classical definition of magic, is it not?

Terminology

- Latent

-

The adjective latent is used to convey the idea

that the underlying structure or hidden relationships

within input data has been captured, and is now available for modification.

When you read or hear latent used as an adjective in a machine learning context, think “hidden”.

Do not confuse latent with potential or dark, which are adjectives often used by physicists. The term Dark energy refers to some unknown and unexplainable characteristics of the Universe.

By any definition, latent implies invisible. Pure energy is also invisible. Both potential energy and latent energy are terms for two types of pure energy.

These three concepts are distinct from one another, yet they share the similarity of all being abstact concepts with real-world implications. - Latent features

-

Latent features or, hidden features are features that are not directly observed,

but can be extracted by an algorithm.

Machine learning models transform input data into abstract (and invisible) data representations, called latent features, within a latent space. - Latent space

-

This is the invisible workshop where LLMs perform their magic.

The latent space is the portion of a machine learning system that represents the latent features found in the data. It is often used for clustering, visualization, and interpolation.

The latent space is the working portion of an LLM's data model. It is an abstract, lower-dimensional representation of high-dimensional data. It the complex data structures in the original data are been simplified, making it easier to discover latent features (hidden patterns) in the data.

Once input data is transformed into latent space, it can easily be manipulated. LLMs transform input data and create new data within the latent space. Once the transformation process is complete, the data within the latent space is transformed back into a higher-dimensional form for our enjoyment. - Latent representation

- Latent representation aims at exploiting semantic-close (semantically similar) words, based on their occurrence in a text (context) to establish a meaning (meaningful relationship).

- Latent variable

- In machine learning, a variable that can be inferred from the data, but cannot be directly observed or measured is called a latent variable.

- Latent model

- Latent models project data from a higher-dimensional space to a lower-dimensional space (latent space), forming a condensed representation of the data.

- Projecting into a latent space

-

Projecting input data into a latent space captures the essential attributes

of the data in fewer dimensions.

You can think of this as a form of data compression.

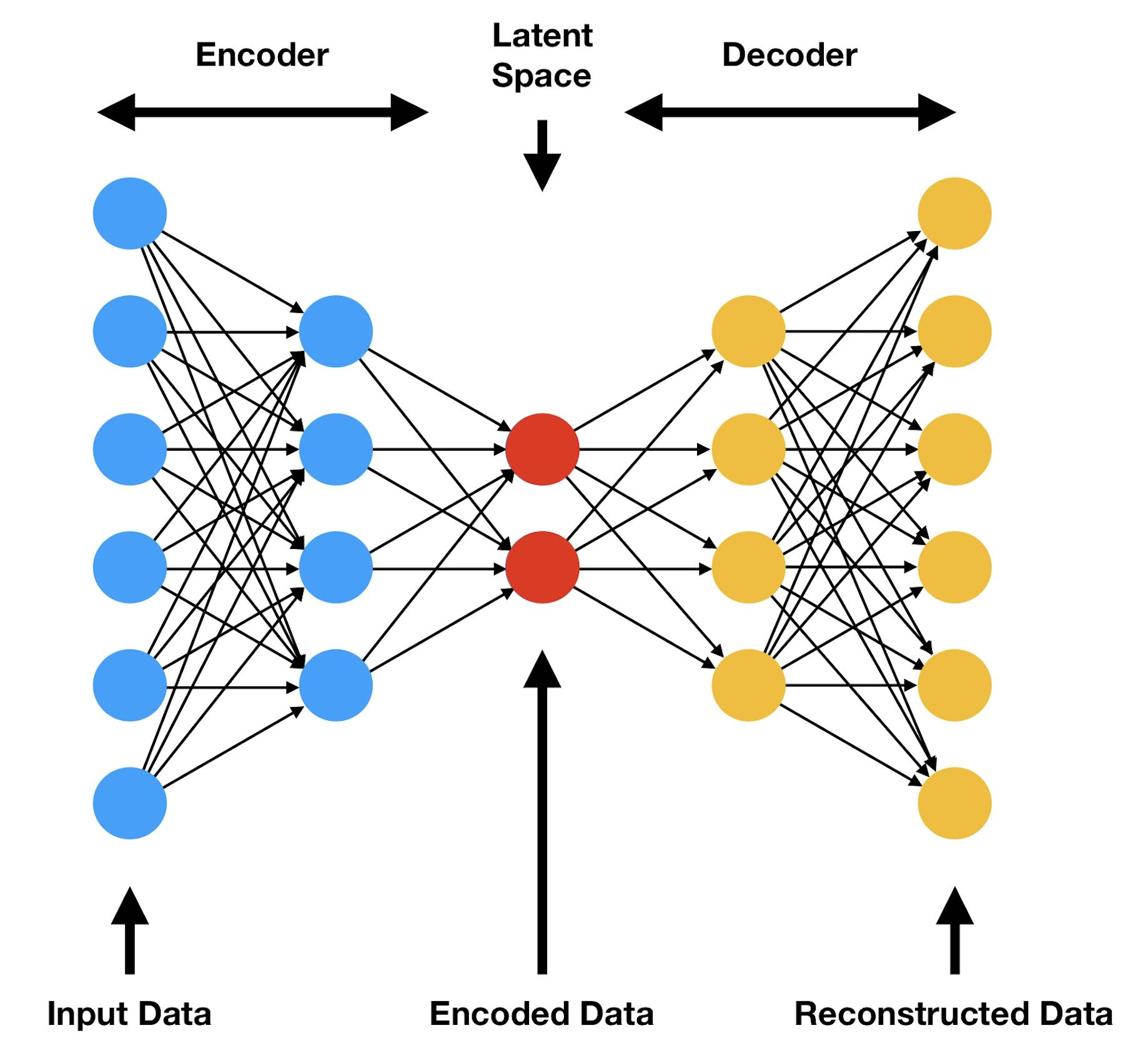

Neural network can perform many types of tasks, such as classification, regression, and image reconstruction. The usual process is to extract features through many neural network layers. Common types of layers include convolutional, recurrent, and pooling network layers. The function that maps the input data to the penultimate layer projects it onto the latent space. - Autoencoder

-

An autoencoder is a neural network comprised of an encoder and a decoder.

The encoder encodes the input data into a latent space,

and the decoder reconstructs the encoded data using the original input.

Autoencoders do not generate new data.

- Generative Adversarial Network (GAN)

- A method of using adversarial training that utilizes two opposing networks, a generator and a discriminator, to push both of them to improve the new data that they generate across multiple iterations.

- Variational autoencoders (VAE)

-

Variational autoencoders normalize (regularize) the distribution of their encodings during

the training in order to ensure that their latent space has good properties.

This allows VAEs to generate new data.

The adjective “variational” comes from the similarity between the regularisation process and the statistical method called variational inference. - Negative prompt

- Describe undesirable attributes of the generated data. Recommendation for professionals who implement these systems: Include default negatives for all models, like: nudity, racism, oppression, exploitation.

TAESD-

TAESDis an autoencoder which uses the same latent API as Stable Diffusion. TAESD can decode Stable Diffusion's latents into full-size images at nearly zero cost. Since TAESD is very fast, you can use TAESD to watch Stable Diffusion's image generation progress in real time. However, the output is somewhat smudged; for best quality, use the default audoencoder.

Below is a good video which introduces commonly used terminology.

Stan Tse (@Gonkee),

the author, is a young man in full Dad mode.

I find his behavior entertaining, and his information useful.

Transcribe This Video

This next video blew me away. It is a no-holds-barred, python-code-writing tour-de-force that explains and then implements some of the main concepts in the above video. Get a cup of coffee before watching it. The code is on GitHub.

You also might want to read a transcription of the this video. It is a very dense condensation of knowledge, and the material flies by fast. Reading along with a transcript greatly assists comprehension; merely turning on YouTube captions is inusfficient to comprehend the verbal firehose that this video subjects you to.

Please understand, I mean this in the nicest way. The speaker has mastered his subject, and he is merciless in his joyful recounting of the story. Use every advantange you can to assimilate the information.

I explained how easy it is to get such a transcript in OpenAI Whisper.

Just visit the free Whisper Large V3 public instance,

click on the YouTube tab, and paste in the url for the YouTube video: https://www.youtube.com/watch?v=vu6eKteJWew,

then press the Submit button.

Here is the transcription; all I did was wrap it at 72 columns:

In this video, I'll cover the implementation of diffusion models. We'll create DDPM for now, and in later videos, move to stable diffusion with text prompts. In this one, we'll be implementing the training and sampling part for DDPM. For our model, we'll actually implement the architecture that is used in latest diffusion models, rather than the one originally used in DDPM. We'll dive deep into the different blocks in it, before finally putting everything in code, and see results of training this diffusion model on grayscale and RGB images. I'll cover the specific math of diffusion models that we need for implementation very quickly in the next few minutes. But this should only act as a refresher, so if you're not aware of it and are interested in knowing it, I would suggest to first see my diffusion math video that's linked above. The entire diffusion process involves a forward process where we take an image and create noisier versions of it step by step, by adding Gaussian noise. After a large number of steps, it becomes equivalent to a sample of noise from a normal distribution. We do this by applying this transition function at every time step t, and beta is a scheduled noise which we add to the image at t-1 to get the image at t. We saw that having alpha as 1-beta and computing cumulative products of these alphas at time t allows us to jump from original image to noisy image at any time step t in the forward process. We then have a model learn the reverse process distribution and because the reverse diffusion process has the same functional form as the forward process which here is a Gaussian, we essentially want the model to learn to predict its mean and variance. After going through a lot of derivation from the initial goal of optimizing the log likelihood of the observed data, we ended with the requirement to minimize the KL divergence between the ground truth renoising distribution conditioned on X0, which we computed as having this mean and this variance, and the distribution predicted by our model. We fixed the variance to be exactly same as the target distribution and rewrite the mean in the same form. After this, minimizing KL divergence ends up being minimizing square of difference between the noise predicted and the original noise sample. Our training method then involves sampling an image, timestep t, and a noise sample and feeding the model the noisy version of this image at sample timestep t using this equation. The cumulative product terms needs to be coming from the noise scheduler, which decides the schedule of noise added as we move along time steps. And loss becomes the MSC between the original noise and whatever the model predicts. For generating images, we just sample from our learnt reverse distribution, starting from a noise sample xt from a normal distribution, learned reverse distribution, starting from a noise sample X from a normal distribution and then computing the mean using the same formulation, just in terms of X and noise prediction and variance is same as the ground truth denoising distribution conditioned on X. Then we get a sample from this reverse distribution using the reparameterization trick and repeating this gets us to X. And for X we don't add any noise and simply return the mean. This was a very quick overview and I had to skim through a lot. For a detailed version of this, I would encourage you to look at the previous diffusion video. So for implementation, we saw that we need to do some computation for the forward and the reverse process. So we will create a noise scheduler which will do these two things for us. For the forward process, given an image and a noise sample and timestep t, it will return the noisy version of this image using the forward equation. And in order to do this efficiently, it will store the alphas, which is just 1 minus beta, and the cumulative product terms of alpha for all t. The authors use a linear noise scheduler where they linearly scale beta from 1e-4 to 0.02 with 1000 time steps between them and we will also do the same. The second responsibility that this scheduler will do is given in xt and noise prediction for a model it will give us xt-1 by sampling from the reverse distribution. it'll give us xt-1 by sampling from the reverse distribution. For this, it'll compute the mean and variance according to their respective equations and return a sample from this distribution using the reparameterization trick. To do this, we also store 1-alpha t, 1-the cumulative product terms, and its square root. Obviously, we can compute all of this at runtime as well, but pre-computing them simplifies the code for the equation a lot. So let's implement the noise scheduler first. As I mentioned, we'll be creating a linear noise schedule. After initializing all the parameters from the arguments of this class, we'll create betas to linearly increase from start to end such that we have beta t from 0 till the last time step. We'll then initialize all the variables that we need for forward and reverse process The add underscore noise method is our forward process. So it will take in an image, original noise sample and time step t. The images and noise will be of B cross C cross H cross W and time step will be a 1D tensor of size b. For the forward process we need the square root of cumulative product terms for the given time steps and 1 minus that and then we reshape them so that they are b cross 1 cross 1 cross 1. Lastly we apply the forward process equation. The second function will be the guy that takes the image xt and gives us a sample from our learned reverse distribution. For that we'll have it receive xt and noise prediction from the model and timestep t as the argument. We'll be saving the original image prediction x0 for visualizations and get that using this equation. This can be obtained using the same equation for forward process that takes from x0 to xt by just rearranging the terms and using noise prediction instead of the actual noise. Then for sampling we'll compute the mean and noise is only added for other time steps. The variance of that is same as the variance of ground truth, renoising which was this. And lastly we'll sample from a Gaussian distribution with this mean and variance using the reparameterization trick. This completes the entire noise scheduler which handles the forward process of adding noise and the reverse process of sampling first. Let's now get into the model. For diffusion models we are actually free to use whatever architecture we want as long as we meet two requirements. The first being that the shape of the input and output must be same and the other is some mechanism to fuse in time step information. Let's talk about why for a bit. The information of what time step we are at is always available to us, whether we are at training or sampling. And in fact, knowing what time step we are at would aid the model in predicting original noise, because we are providing the information that how much of that input image actually is noise. So instead of just giving the model an image, we also give the timeep that we are at. For the model, I'll use unit, which is also what the authors use, but for the exact specification of the blocks, activations, normalizations and everything else, I'll mimic the stable diffusion unit used by HuggingFace in the diffusers pipeline. That's because I plan to soon create a video on stable diffusion, so that'll allow me to reuse a lot of code that I'll create now. Actually, even before going into the unit model, let's first see how the time step information is represented. Let's call this the time embedding block which will take in a 1D tensor of time steps of size b which is batch size and give us a t underscore emb underscore dim size representation for each of those timeeps in the batch. The time embedding block would first convert the integer timesteps into some vector representation using an embedding space. That will then be fed to two linear layers separated by activation to give us our final timestep representation. For the embedding space, the authors used the sinusoidal position embedding used in transformers. For activations, everywhere I have used sigmoid linear units, but you can choose a different For the embedding space, the authors used the sinusoidal position embedding used in transformers. For activations, everywhere I have used sigmoid linear units, but you can choose a different one as well. Okay, now let's get into the model. As I mentioned, I'll be using UNET just like the authors, which is essentially this encoder-decoder architecture, where encoder is a series of downsampling blocks where each block reduces the size of the input, typically by half, and increases the number of channels. The output of final downsampling block is passed to layers of midblock which all work at the same spatial resolution. And after that we have a series of upsampling blocks. These one by one increase the spatial size and reduce the number of channels to ultimately match the input size of the model. The upsampling blocks also fuse in the output coming from the corresponding downsampling block at the same resolution via residual skip connections. Most of the diffusion models usually follow this unit architecture, but differ based on specifications happening inside the blocks. And as I mentioned, for this video I have tried to mimic to some extent what's happening inside the stable diffusion unit from Hugging Phase. Let's look closely into the down block and once we understand that, the rest are pretty easy to follow. Down blocks of almost all the variations would be a ResNet block followed by a self-attention block and then a downsample layer. For our ResNet plus self-attention block, we'll have group norm followed by activation followed by a convolutional layer. The output of this will again be passed to a normalization, activation and convolutional layer. We add a residual connection from the input of first normalization layer to the output of second convolutional layer. This entire thing is what will be called as a ResNet block, which you can think of as two convolutional blocks plus residual connection. This is then followed by a normalization and a self-attention layer, and again residual connection. We have multiple such ResNet plus self-attention layers, but for simplicity our current implementation will only have one layer. The code on the repo however will be configurable to make as many layers as desired. We also need to fuse the time information and the way it's done is that each ResNet block has an activation followed by a linear layer. And we pass the time embedding representations through them first before adding to the output of the first convolutional layer. So essentially this linear layer is projecting the t underscore emb underscore dim timestep representation to a tensor of same size as the channels in the convolutional layer's output. That way these two can be added by replicating this timestep representation across the spatial dimension. Now that we have seen the details inside the block, to simplify, let's replace everything within this part as a ResNet block and within this as a self-attention block. The other two blocks are using the same components and just slightly different. Let's go back to our previous illustration of all three blocks. We saw that down block is just multiple layers of ResNet followed by self-attention. And lastly we have a down sampling layer. Up block is exactly the same, except that it first upsamples the input to twice the spatial size, and then concatenates the down block output of the same spatial resolution across the channel dimension. Post that, it's the same layers of resnet and self-attention blocks. The layers of mid block always maintain the input to the same spatial resolution. The Hugging Phase version has first one ResNet block, and then followed by layers of Self-Attention and ResNet. So I also went ahead and made the same implementation. And let's not forget the Timestep information. For each of these ResNet blocks, we have a Timestep projection layer. This was what we just saw, an activation followed by a linear layer. The existing timestep representation goes through these blocks before being added to the output of first convolution layer of the ResNet block. Let's see how all of this looks in code. The first thing we'll do is implement the sinusoidal position embedding code. This function receives B-sized 1D tensor timesteps, where B is the batch size, and is expected to return B x T underscore EMB underscore DIMM tensor. We first implement the factor part, which is everything that the position, which here is the timestep integer value, will be divided with inside the sine and cosine functions. This will get us all values from 0 to half of the time embedding dimension size, half because we will concatenate sine and cosine. After replicating the time step values, we get our desired shape tensor and divide it by the factor that we computed. This is now exactly the arguments for which we have to call the sine and cosine function. Again all this method does is convert the integer timestep representation to embeddings using a fixed embedding space. Now we will be implementing the down block. But before that, let's quickly take a peek at what layers we need to implement. So we need layers of resnet plus self-attention blocks. Resnet will be two norm activation convolutional layers with residual and self-attention will be norm followed by self-attention. We also need the time projection layers which will project the time embedding onto the same dimension as the number of channels in the output of first convolution feature map. I'll only implement the block to have one layer for now hence we'll only need single instances of these. And after ResNet and self-attention, we have a downsampling. Okay back to coding it. For each downblock, we'll have these arguments. in underscore channel is the number of channels expected in input. out underscore channels is the channels we want in the output of this downblock. Then we have the embedding dimension. I also add a downsample argument, just so that we have the flexibility to ignore the downsampling part in the code. Lastly num underscore heads is the number of heads that our retention block will have. This is our first convolution block of ResNet. We make the channel conversion from input to output channels via the first conv layer itself. So after this everything will have out underscore channels as the number of channels. Then these are the time projection layers for this ResNet block. Remember each ResNet block will have one of these and we had seen that this was just activation followed by a linear layer. The output of this linear layer should have out underscore channels so that we can do the addition. This is the second gone block which will be exactly same except everything operating on out underscore channels as the channel dimension. And then we add the attention part, the normalization and multihead attention. The feature dimension for multihead attention will be same as the number of channels. This residual connection is 1x1 conglare and this ensures that the input to the entire ResNet block can be added to the output of the last conv layers. And since the input was in underscore channels, we have to first transform it to out underscore channels so this just does that. And finally we have the downsample layer which can also be average pooling but I've used convolution with stride 2 and if the arguments convey to not downsample then this is just identity. The forward method will be very simple. We first pass the input to the first con block and then add the time information and then after going through the second con block we add the residual but only after passing through the 1 cross 1 con player. Attention will happen between all the spatial HxW cells, with out underscore channels being the feature dimensionality of each of those cells. So the transpose just ensures that the channel features are the last dimension. And after the channel dimension has been enriched with self-attention representation, we do the transpose back and again have the residual connection. If we would be having multiple layers then we would loop over this entire thing but since we are only implementing one layer for now, we'll just call the downsampling convolution after this. Next up is mid block and again let's revisit the illustration for this. For mid block we'll have a ResNet block and then layers of self-attention, followed by resnet. Same as down block, we'll only implement one layer for now. The code for mid block will have same kind of layers, but we need 2 instances of every layer that belongs to the resnet block, so let's just one difference, that is we call the first Resonant Block and and then self-attention and second ResNet block. Had we implemented multiple layers, the self-attention and the following ResNet block would have a loop. Now let's do up block, which will be exactly same as down block except that instead of down sampling we'll have a up sampling layer. We'll use conf transpose to do the up sampling for us. In the forward method, let's first copy everything that we did for down block. Then we need to make three changes. Add the same spatial resolutions down block output as argument. Then before ResNet plus self-attention blocks, we'll upsample the input and concat the corresponding down block output. Another way to implement this could be to first concat, followed by resnet and self-attention and then upsample, but I went with this one. Finally we'll build our unit class. It will receive the channels in input image as argument. We'll hardcode the down channels and mid channels for now. The way the code is implemented is that these 4 values of down channels will essentially be converted into 3 down blocks, each taking input of channel i dimensions and converting it to output of channel i plus 1 dimensions. And same for the mid blocks. This is just the downsample arguments that we are going to pass to the blocks. Remember our time embedding block had position embedding followed by linear layers with activation in between. These are those two linear layers. This is different from the timestep layers which we had for each ResNet block. This will only be called once in an entire forward pass, right at the start to get initial timestep representation. We'll also first have to convert the input to have the same channel dimensions as the input of first down block and this convolution will just do that for us. We then create the down blocks, mid blocks and up blocks based on the number of channels provided. For the last up block, I simply hardcode the output channel as 16. The output of last up block undergoes a normalization and convolution to get us to the same number of channels as the input image. We'll be training on MNIST dataset to the same number of channels as the input image. We'll be training on MNIST dataset, so the number of channels in the input image would be one. In the forward method, we first call the conv underscore in layer, and then get the timestep representation by calling the sinusoidal position embedding, followed by our linear layers. Then we just call the down blocks, and we keep saving the output of down blocks because we need it as input for the up block. During up block calls, we simply take down outputs from that list one by one and pass that together with the current output. And then we call our normalization, activation and output convolution. Once we pass a 4x1x28x28 input tensor to this, we get the following output shapes. So you can see because we had downsampled only twice, our smallest size input to any convolution layer is 7x7. The code on the repo is much more configurable and creates these blocks based on whatever configuration is passed and can create multiple layers as well. We'll look at a sample config file later, but first let's take a brief look at the dataset, training and sampling code. The dataset class is very simple, it just takes in the path where the images are and then stores the filename of all those images in there. Right now we are building unconditional diffusion model, so we don't really use the labels. Then we simply load the images and convert it to tensor and we also scale it from minus one to one, just like the authors, so that our model consistently sees similarly scaled images as compared to the random noise. Moving to train underscore DDPM file, where the train function loads up the config and gets the model, dataset, diffusion and training configurations from it. We then instantiate the noise scheduler, dataset and our model. After setting up the optimizer and the loss functions, we run our training loop. Here we take our image batch, sample random noise of shape B x 1 x h x w, and sample random timesteps. The scheduler adds noise to these batch images based on the sample timesteps, and we then backpropagate based on the loss between noise prediction by our model and the actual noise that we added. For sampling, similar to training, we load the config and necessary parameters, our model and noise scheduler. The sample method then creates a random noise sample based on number of images requested and then we go through the time steps in reverse. For each time step we get our model's noise prediction and call the reverse process of scheduler that we had created with this xt and noise prediction and then it returns the mean of xt-1 and estimate of the original image. We can choose to either save one of these to see the progress of sampling. Now let's also take a look at our config file. This just has the dataset parameters, which stores our image path. Model params, which stores parameters necessary to create model like the number of channels, down channels and so on. Like I had mentioned, we can put in the number of layers required in each of our down, mid and up blocks. And finally we specify the training parameters. The unit class in the repo has blocks, which actually read this config and create model based on whatever configuration is provided. It does everything similar to what we just implemented, except that it loops over the number of layers as well. And I've also added shapes of the output that we would get at each of those block calls so that it helps a bit in understanding everything. For training, as I mentioned, I train on MNIST, but in order to see if everything works for RGB images, I also train on this dataset of texture images, because I already have it downloaded since my video on implementing DALI. Here is a sample of images from this dataset. These are not generated, these are images from the dataset itself. Though the dataset has 256x256 images, I resized the images to be 28x28, primarily because I lack two important things for training on larger sized images, patience and compute, rather cheap compute. For MNIST I train it for about 20 epochs taking 40 minutes on V100 GPU and for this texture dataset I train for about 60 epochs taking roughly about 3 hours. And that gives me these results. Here I am saving the original image prediction at each time step. And you can see that because MNIST images are all similar looking, the model pretty quickly gets a decent original image prediction at each time step and you can see that because MNIST images are all similar looking the model pretty quickly gets a decent original image prediction whereas for the textured data set it doesn't till about last 200-300 time steps but by the end of all the steps we get decent results for both the data sets you can obviously train it on a larger size data set though probably you would have to maybe increase the channels and maybe train for longer epochs to get nice results. So that's all that I wanted to cover for implementing DDPM. We went through scheduler implementation, unit implementation and saw how everything comes together in the training and sampling code. Hopefully it gave you a better understanding of diffusion models. And thank you so much for watching this video and if you are liking the content and getting benefit from it, do subscribe the channel. See you in the next video.

References

- Stable Diffussion Art Tutorials, workflows and tools.

r/StableDiffusion/on Reddit.- VAE on Wikipedia

- Understanding Variational Autoencoders (VAEs) by Joesph Rocca