Published 2024-01-21.

Last modified 2025-09-21.

Time to read: 11 minutes.

llm collection.

After playing around a bit with Stable Diffusion WebUI by AUTOMATIC1111, I was impressed by its power and how absolutely horrible the user interface was. Documentation is fragmented, and the state of the art is moving rapidly, so by the time you get up to speed, everything has changed and you must start the learning process over again.

About ComfyUI

ComfyUI (GitHub) is a free, open-source, next-generation user interface for generative AI programs such as Stable Diffusion, Flux, Nvidia Cosmos Predict2, and audio models.

OUR MISSION

We want to build the operating system for Gen AI.

ComfyUI is building foundational open-source software for the visual AI space. We make tools for the artist of the future: a human equipped with AI who can be an order of magnitude more productive than before. We unlock animators, creative directors, and startups to use AI without paying money to large, closed AI companies. We empower the individual who was not born with the gift of the brush to also be a painter.

ComfyUI builds software that equips our users to solve their own problems using AI. We provide a set of building blocks that enables users to make software that solves their own problems, often without writing a single line of code. Because of this, ComfyUI is used for many things: creating videos, 3D assets, images, music, art exhibits, powering other apps as an API, and more.

License

Since ComfyUI is under GPLv3, if ComfyUI is integrated into a project and made available to others, that project will also be subject to the GPLv3 license. Other popular software that uses GPLv3 includes Blender, GIMP, and the Bash shell.

Warning: GPLv3 is a strong copyleft license.

Do not use ComfyUI if your project must avoid copyleft contamination.

This caveat applies even if your application is not distributed with GPLv3 code,

but you make users download it separately.

If you wish to distribute your project, including software, you must be aware of GPLv3 contamination, which makes your entire software project subject to the GPLv3 license. Do not combine GPLv3 components with proprietary code if you want to distribute your software project. If you or your company intends to profit from your software, you should avoid GPLv3 code entirely.

If you do distribute your software project, and you used a GPLv3 component as part of your application (even if only linking at run-time to a library), then you must make the entire source code of your project available. This is true even if:

- You do not charge money.

- You do not change the GPLv3 component.

Happily, if you use GPLv3 software such as ComfyUI to make a video, the resulting video is not subject to any licensing restriction. The GPLv3 license applies to the software itself and any derivative works of that software, not to the output or content produced by using the software, provided the output does not include or incorporate the GPLv3-licensed code.

Any output, for example, audio and video files, is not subject to GPLv3 restrictions, even if GPLv3 software was used to create it, so long as the output is a distinct work (as opposed to being a covered work) and does not incorporate the software.

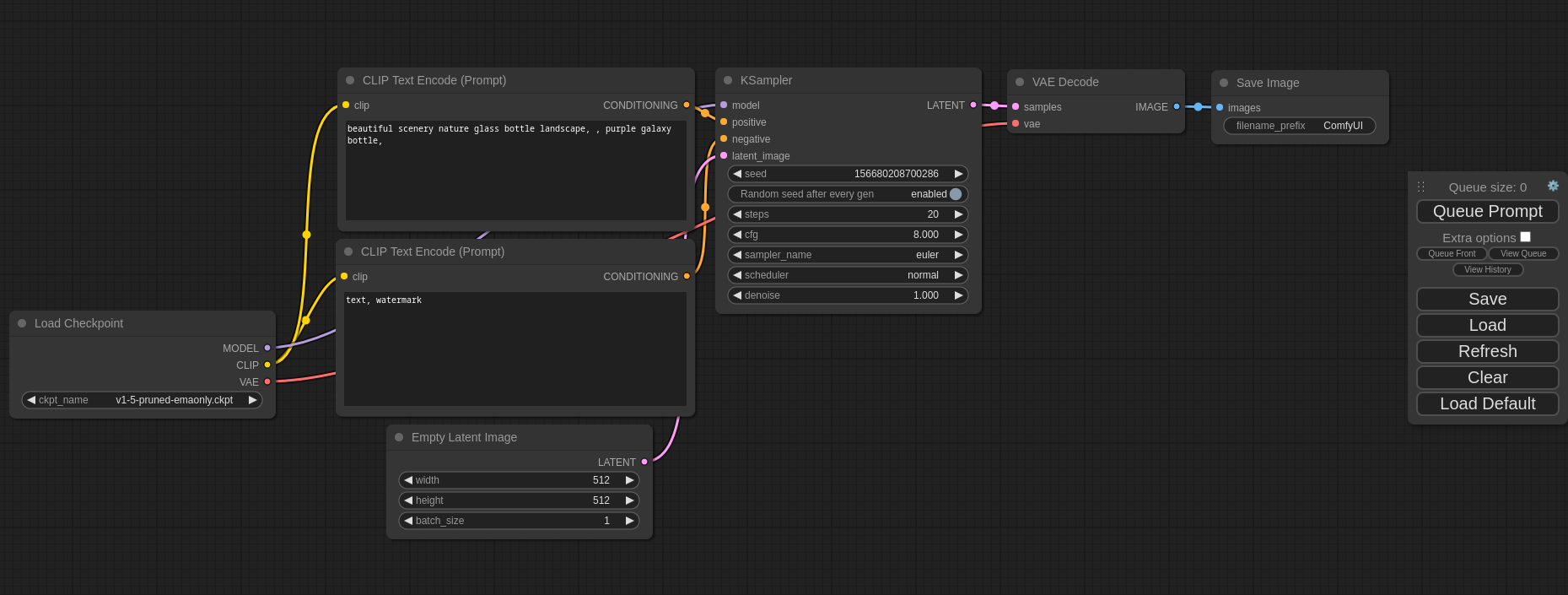

Graph/Node Interface

Instead of many tabs, buttons, and small text boxes, ComfyUI uses a graph/node interface to visually connect functional blocks, or nodes. Graph/node interface user interfaces enable detailed control of a model and make it easy to define AI media project pipelines.

The Fusion component of DaVinci Resolve also uses a graph/node user interface, as does TouchDesigner. In fact, ComfyUI is beginning to encroach on TouchDesigner’s territory.

Technical people might find this new interface natural; however, non-technical people might take longer to feel comfortable with this approach.

GPU Support

Although GPUs from NVIDIA, AMD, and Intel are supported, the only GPUs that reliably provide meaningful benefit are NVIDIA 3000 series and above.

Useful Infobits

ComfyUI Examples (GitHub) has many ComfyUI example projects.

The ComfyUI Community Docs contains this useful gem:

To load the associated flow of a generated image, simply load the image via the Load button in the menu, or drag and drop it into the ComfyUI window. This will automatically parse the details and load all the relevant nodes, including their settings.

Only parts of the graph that have an output with all the correct inputs will be executed.

Only parts of the graph that change from each execution to the next will be executed; if you submit the same graph twice, only the first will be executed. If you change the last part of the graph, only the part you changed and the part that depends on it will be executed.

Dragging a generated png onto the webpage or loading one will give you the full workflow, including seeds that were used to create it.

The option to run ComfyUI in a Jupyter notebook seems terrific.

ComfyUI is a highly efficient VRAM management tool. This repository provides instructions for setting up and using ComfyUI in a Python environment and Jupyter notebooks.

ComfyUI can significantly reduce the VRAM requirements for running models. For example, the original Stable Diffusion v1 at RunwayML's stable-diffusion repository requires at least 10GB VRAM. With ComfyUI, you can run it with as little as 1GB VRAM. Let's set up the environment and give it a try!

Installation

You will not be able to use ComfyUI to do anything useful unless a model is installed and ComfyUI is using the model. The instructions for installing and using models follow the installation instructions.

Assuming you are familiar with working with WSL/Ubuntu or native Ubuntu, this article should contain all the information you need to install, configure, and run ComfyUI on WSL/Ubuntu and native Ubuntu.

As usual, because I generally only run native Ubuntu and WSL2 Ubuntu, my notes on installation do not mention Macs or native Windows any more than necessary.

ComfyUI can be installed 3 ways, described below. Common to each installation method is that ComfyUI writes into the directory structure that it runs from. This is problematic from a security point of view, and increases the chances of problems with updating the program.

-

Desktop Application

- Simplest setup

- Revisions might lag quite a bit

- Native Windows and macOS only

-

Windows Portable Version

- Provides latest features

- Native Windows only

- Nvidia GPUs only

-

Manual Install (Windows, Linux.)

- Provides latest features

- Use this option if you have want a nightly build, want to install into WSL, or have an AMD or Intel GPU

The official installation instructions are here. In some places it states that Python 3.12 is recommended, and there is partial support for Python 3.13, while in other places it reverses the recommendation. Ubuntu 25.04 LTS (Plucky Puffin) defaults to Python 3.13. The final release of Python version 3.14.0 is scheduled for Tuesday, October 7, 2025, and the final release of Python version 3.15.0 is expected on Thursday, October 1, 2026. The current version of ComfyUI might not work on those versions, so be sure to update ComfyUI when new major Python versions are released.

Use --preview-method auto

to enable previews.

The default installation includes a fast latent preview method that's low-resolution.

To enable higher-quality previews with

TAESD

(Tiny AutoEncoder for Stable Diffusion,)

download

taesd_decoder.pth, taesdxl_decoder.pth,

taesd3_decoder.pth and taef1_decoder.pth

and place them in the models/vae_approx folder.

Once they're installed, restart ComfyUI and launch it with --preview-method taesd.

Portable Version for Native Windows

The Windows Portable Version

is stored in .7z format.

I used 7-Zip

to open the file.

Because I wanted to store the Windows Portable Version in %ProgramFiles%,

and writing to that directory requires administrator privilege,

I had to launch 7-zip as administrator.

- Press the Windows key, then type

7-zip. - The option to Run as administrator will appear; click it.

- Provide the full path to your downloads directory in the URL input box.

- Right-click on the

ComfyUI_windows_portabledirectory entry in 7-zip -

Press F5 or select Copy to... the paste in

C:\Program Files(Windows environment variables are not allowed here). -

A subdirectory called

C:\Program Files\ComfyUI_windows_portableshould now exist. -

I allowed all users to write into

C:\Program Files\ComfyUI_windows_portable\ComfyUIby:- Right-clicking on the directory in File Manager

- Selecting the Security tab

- Clicking the Edit... button

- Scrolling the Group or user names list until I could select Users

- Setting Bull control in the permissions list

- Clicking OK twice.

The top-level files included three Windows scripts:

run_cpu.bat, run_nvidia_gpu.bat, and run_nvidia_gpu_fast_fp16_accumulation.bat.

There was also a file called README_VERY_IMPORTANT.txt.

├── ComfyUI │ ├── alembic_db │ ├── api_server │ ├── app │ ├── comfy │ ├── comfy_api │ ├── comfy_api_nodes │ ├── comfy_config │ ├── comfy_execution │ ├── comfy_extras │ ├── custom_nodes │ ├── input │ ├── middleware │ ├── models │ ├── output │ ├── script_examples │ ├── tests │ ├── tests-unit │ └── utils ├── python_embeded │ ├── Include │ ├── Lib │ ├── Scripts │ └── share └── update

HOW TO RUN:

if you have a NVIDIA gpu:

run_nvidia_gpu.bat

if you want to enable the fast fp16 accumulation (faster for fp16 models with slightly less quality):

run_nvidia_gpu_fast_fp16_accumulation.bat

To run it in slow CPU mode:

run_cpu.bat

IF YOU GET A RED ERROR IN THE UI MAKE SURE YOU HAVE A MODEL/CHECKPOINT IN: ComfyUI\models\checkpoints

You can download the stable diffusion 1.5 one from: https://huggingface.co/Comfy-Org/stable-diffusion-v1-5-archive/blob/main/v1-5-pruned-emaonly-fp16.safetensors

RECOMMENDED WAY TO UPDATE: To update the ComfyUI code: update\update_comfyui.bat

To update ComfyUI with the python dependencies, note that you should ONLY run this if you have issues with python dependencies. update\update_comfyui_and_python_dependencies.bat

TO SHARE MODELS BETWEEN COMFYUI AND ANOTHER UI: In the ComfyUI directory you will find a file: extra_model_paths.yaml.example Rename this file to: extra_model_paths.yaml and edit it with your favorite text editor.

I then double-clicked on C:\Program Files\ComfyUI_windows_portable\run_nvidia_gpu.bat

and a CMD session opened in Windows Terminal.

The web browser also opened the ComfyUI webapp.

C:\Program Files\ComfyUI_windows_portable>.\python_embeded\python.exe -s ComfyUI\main.py --windows-standalone-build Checkpoint files will always be loaded safely. Total VRAM 12288 MB, total RAM 65307 MB pytorch version: 2.8.0+cu129 Set vram state to: NORMAL_VRAM Device: cuda:0 NVIDIA GeForce RTX 3060 : cudaMallocAsync Using pytorch attention Python version: 3.13.6 (tags/v3.13.6:4e66535, Aug 6 2025, 14:36:00) [MSC v.1944 64 bit (AMD64)] ComfyUI version: 0.3.59 ****** User settings have been changed to be stored on the server instead of browser storage. ****** ****** For multi-user setups add the --multi-user CLI argument to enable multiple user profiles. ****** ComfyUI frontend version: 1.25.11 [Prompt Server] web root: C:\Program Files\ComfyUI_windows_portable\python_embeded\Lib\site-packages\comfyui_frontend_package\static Import times for custom nodes: 0.0 seconds: C:\Program Files\ComfyUI_windows_portable\ComfyUI\custom_nodes\websocket_image_save.py Context impl SQLiteImpl. Will assume non-transactional DDL. No target revision found. Starting server To see the GUI go to: http://127.0.0.1:8188

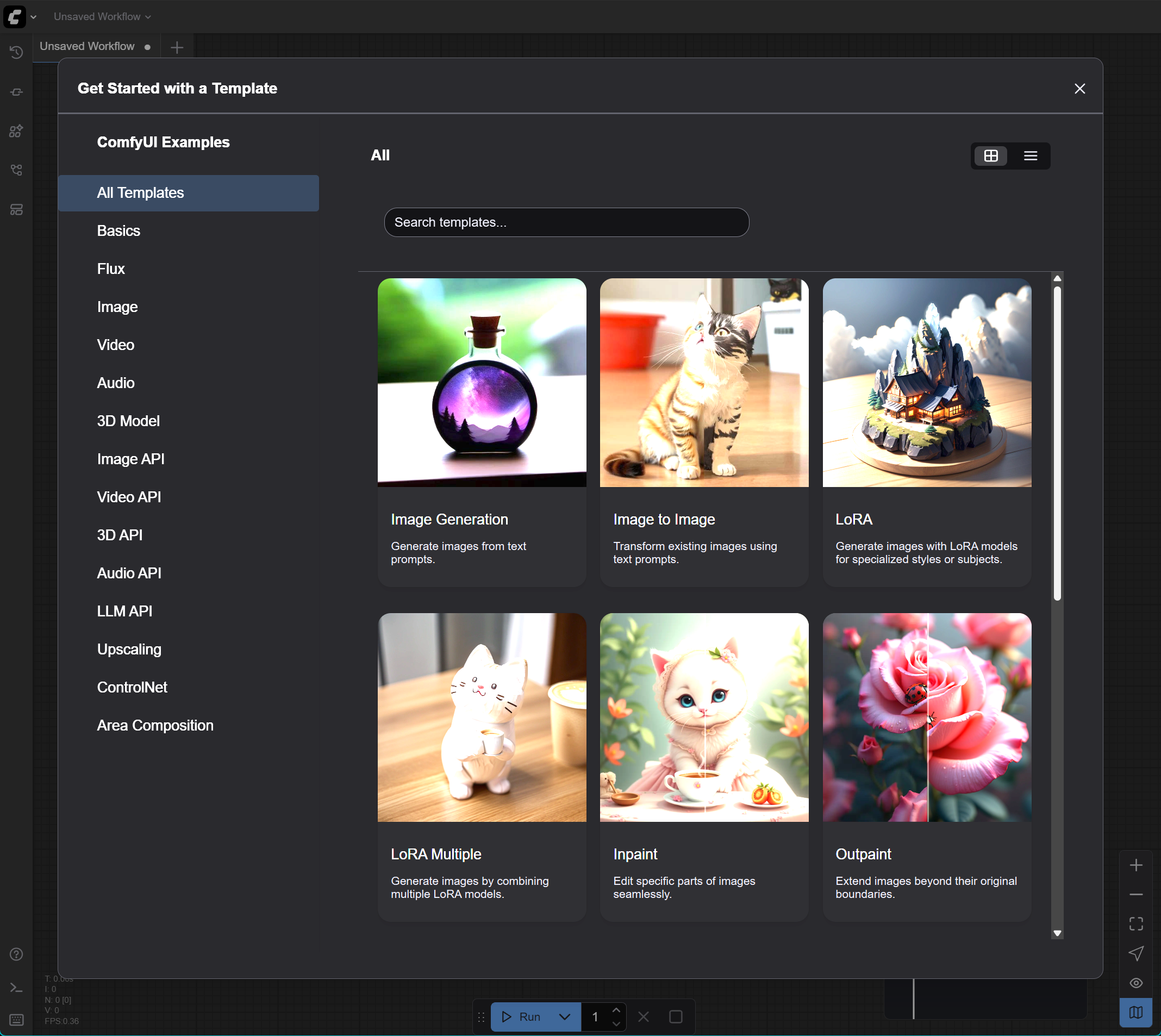

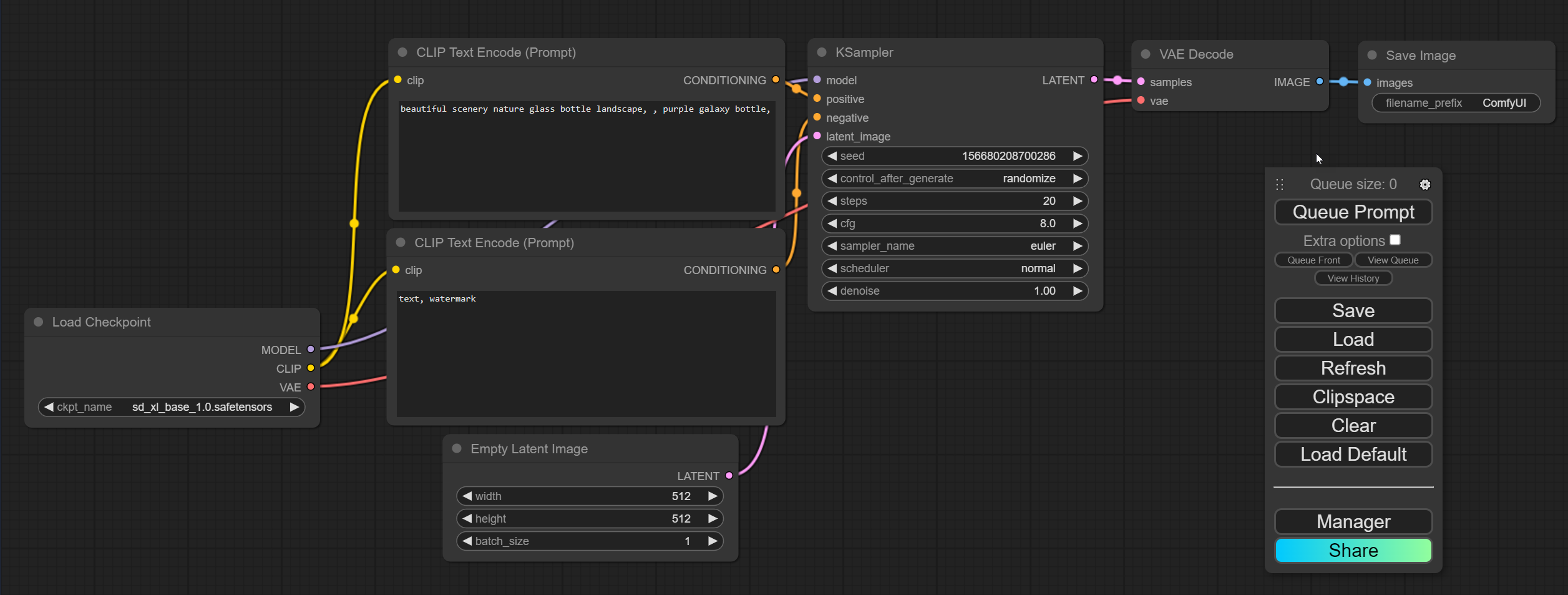

The GUI looks like this:

Just a reminder that you will not be able to use ComfyUI to do anything useful unless a model is installed and ComfyUI is using the model. The instructions for installing and using models follow the installation instructions.

Custom Installation

This section describes how I performed a custom installation on WSL.

Sibling Directories

I installed ComfyUI next to

stable-diffusion-webui.

The instructions in this article assume that you will do the same.

Both of these user interfaces are changing rapidly, from week to week.

You will likely need to be able to switch between them.

Sharing data between them is not only possible but is encouraged.

I defined an environment variable called llm, which points to

the directory root of all my LLM-related programs.

You could do the same by adding a line like the following in ~/.bashrc:

export llm=/mnt/f/work/llm

Activate the variable definition in the current terminal session by typing:

$ source ~/.bashrc

After I installed ComfyUI, stable-diffusion-webui,

and some other programs that I am experimenting with,

the $llm directory had the following contents

(your computer probably does not have all of these directories):

$ tree -dL 1 $llm /mnt/f/work/llm ├── ComfyUI ├── Fooocus ├── ollama-webui └── stable-diffusion-webui

At this point you just need to make the parent of that directory the current directory.

After following these instructions, your computer should have a ComfyUI next to

stable-diffusion-webui.

Git Clone ComfyUI

$ cd $llm # Change to the directory where my LLM projects live

$ git clone https://github.com/comfyanonymous/ComfyUI Cloning into 'ComfyUI'... remote: Enumerating objects: 9623, done. remote: Counting objects: 100% (2935/2935), done. remote: Compressing objects: 100% (231/231), done. remote: Total 9623 (delta 2775), reused 2709 (delta 2704), pack-reused 6688 Receiving objects: 100% (9623/9623), 3.79 MiB | 1.50 MiB/s, done. Resolving deltas: 100% (6510/6510), done.

$ cd ComfyUI

Let's take a look at the directories that got installed within the new ComfyUI directory:

$ tree -d -L 2 . ├── input ├── user │ └── default ├── output ├── venv │ ├── include │ ├── lib │ ├── lib64 -> lib │ ├── share │ └── bin ├── __pycache__ ├── api_server │ ├── routes │ ├── utils │ └── services ├── custom_nodes │ ├── ComfyUI-Manager │ └── __pycache__ ├── alembic_db ├── utils ├── comfy_config ├── comfy_api │ ├── torch_helpers │ ├── input │ ├── input_impl │ ├── internal │ ├── util │ ├── v0_0_1 │ ├── v0_0_2 │ └── latest ├── app │ ├── __pycache__ │ └── database ├── comfy_execution ├── middleware ├── models │ ├── checkpoints │ ├── clip │ ├── clip_vision │ ├── configs │ ├── controlnet │ ├── diffusers │ ├── embeddings │ ├── gligen │ ├── hypernetworks │ ├── loras │ ├── style_models │ ├── unet │ ├── upscale_models │ ├── vae │ ├── vae_approx │ ├── photomaker │ ├── diffusion_models │ ├── text_encoders │ ├── model_patches │ └── audio_encoders ├── script_examples ├── tests │ ├── compare │ ├── inference │ └── execution ├── tests-unit │ ├── execution_test │ ├── comfy_test │ ├── server │ ├── utils │ ├── comfy_api_nodes_test │ ├── comfy_api_test │ ├── comfy_extras_test │ ├── folder_paths_test │ ├── prompt_server_test │ ├── app_test │ └── server_test ├── comfy │ ├── __pycache__ │ ├── taesd │ ├── t2i_adapter │ ├── cldm │ ├── extra_samplers │ ├── comfy_types │ ├── sd1_tokenizer │ ├── image_encoders │ ├── ldm │ ├── k_diffusion │ ├── audio_encoders │ ├── text_encoders │ └── weight_adapter ├── comfy_api_nodes │ ├── util │ └── apis └── comfy_extras ├── __pycache__ └── chainner_models 94 directories

Python Virtual Environment

I created a Python virtual environment

(venv) for ComfyUI,

inside the ComfyUI directory that was created by git clone.

You should too.

The .gitignore file already has an entry for venv, so use that name, as shown below.

For some reason the Mac manual installation instructions guide the reader through the process of doing this,

but the Windows installation instructions make no mention of Python virtual environments.

It is easy to make a venv, and you definitely should do so:

$ python3 -m venv venv

$ source venv/bin/activate (venv) $

Notice how the shell prompt changed after the virtual environment was activated. This virtual environment should always be activated before running ComfyUI. In a moment we will ensure this happens through configuration.

Install Dependent Python Libraries

Now that a venv was in place for ComfyUI,

I followed the manual installation instructions for installing dependent Python libraries.

These libraries will be stored within the virtual environment,

which will guarantee that there will be no undesirable versioning issues with

dependent Python libraries from other projects.

$ pip install -r requirements.txt Collecting comfyui-frontend-package==1.26.13 (from -r requirements.txt (line 1)) Downloading comfyui_frontend_package-1.26.13-py3-none-any.whl.metadata (118 bytes) Collecting comfyui-workflow-templates==0.1.81 (from -r requirements.txt (line 2)) Downloading comfyui_workflow_templates-0.1.81-py3-none-any.whl.metadata (55 kB) Collecting comfyui-embedded-docs==0.2.6 (from -r requirements.txt (line 3)) Downloading comfyui_embedded_docs-0.2.6-py3-none-any.whl.metadata (2.9 kB) Collecting torch (from -r requirements.txt (line 4)) Downloading torch-2.8.0-cp313-cp313-manylinux_2_28_x86_64.whl.metadata (30 kB) Collecting torchsde (from -r requirements.txt (line 5)) Downloading torchsde-0.2.6-py3-none-any.whl.metadata (5.3 kB) Collecting torchvision (from -r requirements.txt (line 6)) Downloading torchvision-0.23.0-cp313-cp313-manylinux_2_28_x86_64.whl.metadata (6.1 kB) Collecting torchaudio (from -r requirements.txt (line 7)) Downloading torchaudio-2.8.0-cp313-cp313-manylinux_2_28_x86_64.whl.metadata (7.2 kB) Collecting numpy>=1.25.0 (from -r requirements.txt (line 8)) Downloading numpy-2.3.3-cp313-cp313-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl.metadata (62 kB) Collecting einops (from -r requirements.txt (line 9)) Downloading einops-0.8.1-py3-none-any.whl.metadata (13 kB) Collecting transformers>=4.37.2 (from -r requirements.txt (line 10)) Downloading transformers-4.56.2-py3-none-any.whl.metadata (40 kB) Collecting tokenizers>=0.13.3 (from -r requirements.txt (line 11)) Downloading tokenizers-0.22.1-cp39-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (6.8 kB) Collecting sentencepiece (from -r requirements.txt (line 12)) Downloading sentencepiece-0.2.1-cp313-cp313-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl.metadata (10 kB) Collecting safetensors>=0.4.2 (from -r requirements.txt (line 13)) Downloading safetensors-0.6.2-cp38-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (4.1 kB) Collecting aiohttp>=3.11.8 (from -r requirements.txt (line 14)) Downloading aiohttp-3.12.15-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (7.7 kB) Collecting yarl>=1.18.0 (from -r requirements.txt (line 15)) Downloading yarl-1.20.1-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (73 kB) Requirement already satisfied: pyyaml in /home/mslinn/venv/default/lib/python3.13/site-packages (from -r requirements.txt (line 16)) (6.0.2) Requirement already satisfied: Pillow in /home/mslinn/venv/default/lib/python3.13/site-packages (from -r requirements.txt (line 17)) (11.3.0) Collecting scipy (from -r requirements.txt (line 18)) Downloading scipy-1.16.2-cp313-cp313-manylinux2014_x86_64.manylinux_2_17_x86_64.whl.metadata (62 kB) Collecting tqdm (from -r requirements.txt (line 19)) Using cached tqdm-4.67.1-py3-none-any.whl.metadata (57 kB) Collecting psutil (from -r requirements.txt (line 20)) Downloading psutil-7.1.0-cp36-abi3-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (23 kB) Collecting alembic (from -r requirements.txt (line 21)) Downloading alembic-1.16.5-py3-none-any.whl.metadata (7.3 kB) Collecting SQLAlchemy (from -r requirements.txt (line 22)) Downloading sqlalchemy-2.0.43-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (9.6 kB) Collecting av>=14.2.0 (from -r requirements.txt (line 23)) Downloading av-15.1.0-cp313-cp313-manylinux_2_28_x86_64.whl.metadata (4.6 kB) Collecting kornia>=0.7.1 (from -r requirements.txt (line 26)) Downloading kornia-0.8.1-py2.py3-none-any.whl.metadata (17 kB) Collecting spandrel (from -r requirements.txt (line 27)) Downloading spandrel-0.4.1-py3-none-any.whl.metadata (15 kB) Collecting soundfile (from -r requirements.txt (line 28)) Downloading soundfile-0.13.1-py2.py3-none-manylinux_2_28_x86_64.whl.metadata (16 kB) Requirement already satisfied: pydantic~=2.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from -r requirements.txt (line 29)) (2.11.9) Requirement already satisfied: pydantic-settings~=2.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from -r requirements.txt (line 30)) (2.10.1) Requirement already satisfied: annotated-types>=0.6.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from pydantic~=2.0->-r requirements.txt (line 29)) (0.7.0) Requirement already satisfied: pydantic-core==2.33.2 in /home/mslinn/venv/default/lib/python3.13/site-packages (from pydantic~=2.0->-r requirements.txt (line 29)) (2.33.2) Requirement already satisfied: typing-extensions>=4.12.2 in /home/mslinn/venv/default/lib/python3.13/site-packages (from pydantic~=2.0->-r requirements.txt (line 29)) (4.15.0) Requirement already satisfied: typing-inspection>=0.4.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from pydantic~=2.0->-r requirements.txt (line 29)) (0.4.1) Requirement already satisfied: python-dotenv>=0.21.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from pydantic-settings~=2.0->-r requirements.txt (line 30)) (1.1.1) Collecting filelock (from torch->-r requirements.txt (line 4)) Downloading filelock-3.19.1-py3-none-any.whl.metadata (2.1 kB) Collecting setuptools (from torch->-r requirements.txt (line 4)) Using cached setuptools-80.9.0-py3-none-any.whl.metadata (6.6 kB) Collecting sympy>=1.13.3 (from torch->-r requirements.txt (line 4)) Using cached sympy-1.14.0-py3-none-any.whl.metadata (12 kB) Collecting networkx (from torch->-r requirements.txt (line 4)) Downloading networkx-3.5-py3-none-any.whl.metadata (6.3 kB) Collecting jinja2 (from torch->-r requirements.txt (line 4)) Using cached jinja2-3.1.6-py3-none-any.whl.metadata (2.9 kB) Collecting fsspec (from torch->-r requirements.txt (line 4)) Downloading fsspec-2025.9.0-py3-none-any.whl.metadata (10 kB) Collecting nvidia-cuda-nvrtc-cu12==12.8.93 (from torch->-r requirements.txt (line 4)) Using cached nvidia_cuda_nvrtc_cu12-12.8.93-py3-none-manylinux2010_x86_64.manylinux_2_12_x86_64.whl.metadata (1.7 kB) Collecting nvidia-cuda-runtime-cu12==12.8.90 (from torch->-r requirements.txt (line 4)) Using cached nvidia_cuda_runtime_cu12-12.8.90-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl.metadata (1.7 kB) Collecting nvidia-cuda-cupti-cu12==12.8.90 (from torch->-r requirements.txt (line 4)) Using cached nvidia_cuda_cupti_cu12-12.8.90-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl.metadata (1.7 kB) Collecting nvidia-cudnn-cu12==9.10.2.21 (from torch->-r requirements.txt (line 4)) Downloading nvidia_cudnn_cu12-9.10.2.21-py3-none-manylinux_2_27_x86_64.whl.metadata (1.8 kB) Collecting nvidia-cublas-cu12==12.8.4.1 (from torch->-r requirements.txt (line 4)) Using cached nvidia_cublas_cu12-12.8.4.1-py3-none-manylinux_2_27_x86_64.whl.metadata (1.7 kB) Collecting nvidia-cufft-cu12==11.3.3.83 (from torch->-r requirements.txt (line 4)) Using cached nvidia_cufft_cu12-11.3.3.83-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl.metadata (1.7 kB) Collecting nvidia-curand-cu12==10.3.9.90 (from torch->-r requirements.txt (line 4)) Using cached nvidia_curand_cu12-10.3.9.90-py3-none-manylinux_2_27_x86_64.whl.metadata (1.7 kB) Collecting nvidia-cusolver-cu12==11.7.3.90 (from torch->-r requirements.txt (line 4)) Using cached nvidia_cusolver_cu12-11.7.3.90-py3-none-manylinux_2_27_x86_64.whl.metadata (1.8 kB) Collecting nvidia-cusparse-cu12==12.5.8.93 (from torch->-r requirements.txt (line 4)) Using cached nvidia_cusparse_cu12-12.5.8.93-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl.metadata (1.8 kB) Collecting nvidia-cusparselt-cu12==0.7.1 (from torch->-r requirements.txt (line 4)) Using cached nvidia_cusparselt_cu12-0.7.1-py3-none-manylinux2014_x86_64.whl.metadata (7.0 kB) Collecting nvidia-nccl-cu12==2.27.3 (from torch->-r requirements.txt (line 4)) Downloading nvidia_nccl_cu12-2.27.3-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl.metadata (2.0 kB) Collecting nvidia-nvtx-cu12==12.8.90 (from torch->-r requirements.txt (line 4)) Using cached nvidia_nvtx_cu12-12.8.90-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl.metadata (1.8 kB) Collecting nvidia-nvjitlink-cu12==12.8.93 (from torch->-r requirements.txt (line 4)) Using cached nvidia_nvjitlink_cu12-12.8.93-py3-none-manylinux2010_x86_64.manylinux_2_12_x86_64.whl.metadata (1.7 kB) Collecting nvidia-cufile-cu12==1.13.1.3 (from torch->-r requirements.txt (line 4)) Downloading nvidia_cufile_cu12-1.13.1.3-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl.metadata (1.7 kB) Collecting triton==3.4.0 (from torch->-r requirements.txt (line 4)) Downloading triton-3.4.0-cp313-cp313-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl.metadata (1.7 kB) Collecting trampoline>=0.1.2 (from torchsde->-r requirements.txt (line 5)) Downloading trampoline-0.1.2-py3-none-any.whl.metadata (10 kB) Collecting huggingface-hub<1.0,>=0.34.0 (from transformers>=4.37.2->-r requirements.txt (line 10)) Downloading huggingface_hub-0.35.0-py3-none-any.whl.metadata (14 kB) Requirement already satisfied: packaging>=20.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers>=4.37.2->-r requirements.txt (line 10)) (25.0) Collecting regex!=2019.12.17 (from transformers>=4.37.2->-r requirements.txt (line 10)) Downloading regex-2025.9.18-cp313-cp313-manylinux2014_x86_64.manylinux_2_17_x86_64.manylinux_2_28_x86_64.whl.metadata (40 kB) Requirement already satisfied: requests in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers>=4.37.2->-r requirements.txt (line 10)) (2.32.5) Collecting hf-xet<2.0.0,>=1.1.3 (from huggingface-hub<1.0,>=0.34.0->transformers>=4.37.2->-r requirements.txt (line 10)) Downloading hf_xet-1.1.10-cp37-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (4.7 kB) Collecting aiohappyeyeballs>=2.5.0 (from aiohttp>=3.11.8->-r requirements.txt (line 14)) Downloading aiohappyeyeballs-2.6.1-py3-none-any.whl.metadata (5.9 kB) Collecting aiosignal>=1.4.0 (from aiohttp>=3.11.8->-r requirements.txt (line 14)) Downloading aiosignal-1.4.0-py3-none-any.whl.metadata (3.7 kB) Requirement already satisfied: attrs>=17.3.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from aiohttp>=3.11.8->-r requirements.txt (line 14)) (25.3.0) Collecting frozenlist>=1.1.1 (from aiohttp>=3.11.8->-r requirements.txt (line 14)) Downloading frozenlist-1.7.0-cp313-cp313-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (18 kB) Collecting multidict<7.0,>=4.5 (from aiohttp>=3.11.8->-r requirements.txt (line 14)) Downloading multidict-6.6.4-cp313-cp313-manylinux2014_x86_64.manylinux_2_17_x86_64.manylinux_2_28_x86_64.whl.metadata (5.3 kB) Collecting propcache>=0.2.0 (from aiohttp>=3.11.8->-r requirements.txt (line 14)) Downloading propcache-0.3.2-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (12 kB) Requirement already satisfied: idna>=2.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from yarl>=1.18.0->-r requirements.txt (line 15)) (3.10) Collecting Mako (from alembic->-r requirements.txt (line 21)) Downloading mako-1.3.10-py3-none-any.whl.metadata (2.9 kB) Collecting greenlet>=1 (from SQLAlchemy->-r requirements.txt (line 22)) Downloading greenlet-3.2.4-cp313-cp313-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl.metadata (4.1 kB) Collecting kornia_rs>=0.1.9 (from kornia>=0.7.1->-r requirements.txt (line 26)) Downloading kornia_rs-0.1.9-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.metadata (11 kB) Requirement already satisfied: cffi>=1.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from soundfile->-r requirements.txt (line 28)) (2.0.0) Requirement already satisfied: pycparser in /home/mslinn/venv/default/lib/python3.13/site-packages (from cffi>=1.0->soundfile->-r requirements.txt (line 28)) (2.23) Collecting mpmath<1.4,>=1.1.0 (from sympy>=1.13.3->torch->-r requirements.txt (line 4)) Downloading mpmath-1.3.0-py3-none-any.whl.metadata (8.6 kB) Requirement already satisfied: MarkupSafe>=2.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from jinja2->torch->-r requirements.txt (line 4)) (3.0.2) Requirement already satisfied: charset_normalizer<4,>=2 in /home/mslinn/venv/default/lib/python3.13/site-packages (from requests->transformers>=4.37.2->-r requirements.txt (line 10)) (3.4.3) Requirement already satisfied: urllib3<3,>=1.21.1 in /home/mslinn/venv/default/lib/python3.13/site-packages (from requests->transformers>=4.37.2->-r requirements.txt (line 10)) (2.5.0) Requirement already satisfied: certifi>=2017.4.17 in /home/mslinn/venv/default/lib/python3.13/site-packages (from requests->transformers>=4.37.2->-r requirements.txt (line 10)) (2025.8.3) Downloading comfyui_frontend_package-1.26.13-py3-none-any.whl (9.8 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 9.8/9.8 MB 42.1 MB/s 0:00:00 Downloading comfyui_workflow_templates-0.1.81-py3-none-any.whl (77.1 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 77.1/77.1 MB 45.8 MB/s 0:00:01 Downloading comfyui_embedded_docs-0.2.6-py3-none-any.whl (3.5 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.5/3.5 MB 55.0 MB/s 0:00:00 Downloading torch-2.8.0-cp313-cp313-manylinux_2_28_x86_64.whl (887.9 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 887.9/887.9 MB 40.2 MB/s 0:00:18 Using cached nvidia_cublas_cu12-12.8.4.1-py3-none-manylinux_2_27_x86_64.whl (594.3 MB) Using cached nvidia_cuda_cupti_cu12-12.8.90-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (10.2 MB) Using cached nvidia_cuda_nvrtc_cu12-12.8.93-py3-none-manylinux2010_x86_64.manylinux_2_12_x86_64.whl (88.0 MB) Using cached nvidia_cuda_runtime_cu12-12.8.90-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (954 kB) Downloading nvidia_cudnn_cu12-9.10.2.21-py3-none-manylinux_2_27_x86_64.whl (706.8 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 706.8/706.8 MB 42.8 MB/s 0:00:14 Using cached nvidia_cufft_cu12-11.3.3.83-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (193.1 MB) Downloading nvidia_cufile_cu12-1.13.1.3-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (1.2 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.2/1.2 MB 36.4 MB/s 0:00:00 Using cached nvidia_curand_cu12-10.3.9.90-py3-none-manylinux_2_27_x86_64.whl (63.6 MB) Using cached nvidia_cusolver_cu12-11.7.3.90-py3-none-manylinux_2_27_x86_64.whl (267.5 MB) Using cached nvidia_cusparse_cu12-12.5.8.93-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (288.2 MB) Using cached nvidia_cusparselt_cu12-0.7.1-py3-none-manylinux2014_x86_64.whl (287.2 MB) Downloading nvidia_nccl_cu12-2.27.3-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (322.4 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 322.4/322.4 MB 46.8 MB/s 0:00:07 Using cached nvidia_nvjitlink_cu12-12.8.93-py3-none-manylinux2010_x86_64.manylinux_2_12_x86_64.whl (39.3 MB) Using cached nvidia_nvtx_cu12-12.8.90-py3-none-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (89 kB) Downloading triton-3.4.0-cp313-cp313-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl (155.6 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 155.6/155.6 MB 48.5 MB/s 0:00:03 Downloading torchsde-0.2.6-py3-none-any.whl (61 kB) Downloading torchvision-0.23.0-cp313-cp313-manylinux_2_28_x86_64.whl (8.6 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 8.6/8.6 MB 45.5 MB/s 0:00:00 Downloading torchaudio-2.8.0-cp313-cp313-manylinux_2_28_x86_64.whl (4.0 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 4.0/4.0 MB 46.5 MB/s 0:00:00 Downloading numpy-2.3.3-cp313-cp313-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl (16.6 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 16.6/16.6 MB 48.8 MB/s 0:00:00 Downloading einops-0.8.1-py3-none-any.whl (64 kB) Downloading transformers-4.56.2-py3-none-any.whl (11.6 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 11.6/11.6 MB 49.9 MB/s 0:00:00 Downloading tokenizers-0.22.1-cp39-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (3.3 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.3/3.3 MB 42.1 MB/s 0:00:00 Downloading huggingface_hub-0.35.0-py3-none-any.whl (563 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 563.4/563.4 kB 22.6 MB/s 0:00:00 Downloading hf_xet-1.1.10-cp37-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (3.2 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.2/3.2 MB 44.2 MB/s 0:00:00 Downloading sentencepiece-0.2.1-cp313-cp313-manylinux_2_27_x86_64.manylinux_2_28_x86_64.whl (1.4 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.4/1.4 MB 40.2 MB/s 0:00:00 Downloading safetensors-0.6.2-cp38-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (485 kB) Downloading aiohttp-3.12.15-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.7 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.7/1.7 MB 45.8 MB/s 0:00:00 Downloading yarl-1.20.1-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (352 kB) Downloading multidict-6.6.4-cp313-cp313-manylinux2014_x86_64.manylinux_2_17_x86_64.manylinux_2_28_x86_64.whl (254 kB) Downloading scipy-1.16.2-cp313-cp313-manylinux2014_x86_64.manylinux_2_17_x86_64.whl (35.7 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 35.7/35.7 MB 45.5 MB/s 0:00:00 Using cached tqdm-4.67.1-py3-none-any.whl (78 kB) Downloading psutil-7.1.0-cp36-abi3-manylinux_2_12_x86_64.manylinux2010_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl (291 kB) Downloading alembic-1.16.5-py3-none-any.whl (247 kB) Downloading sqlalchemy-2.0.43-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (3.3 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.3/3.3 MB 41.0 MB/s 0:00:00 Downloading av-15.1.0-cp313-cp313-manylinux_2_28_x86_64.whl (39.6 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 39.6/39.6 MB 45.4 MB/s 0:00:00 Downloading kornia-0.8.1-py2.py3-none-any.whl (1.1 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.1/1.1 MB 30.5 MB/s 0:00:00 Downloading spandrel-0.4.1-py3-none-any.whl (305 kB) Downloading soundfile-0.13.1-py2.py3-none-manylinux_2_28_x86_64.whl (1.3 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.3/1.3 MB 34.0 MB/s 0:00:00 Downloading aiohappyeyeballs-2.6.1-py3-none-any.whl (15 kB) Downloading aiosignal-1.4.0-py3-none-any.whl (7.5 kB) Downloading frozenlist-1.7.0-cp313-cp313-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl (232 kB) Downloading fsspec-2025.9.0-py3-none-any.whl (199 kB) Downloading greenlet-3.2.4-cp313-cp313-manylinux_2_24_x86_64.manylinux_2_28_x86_64.whl (610 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 610.5/610.5 kB 25.6 MB/s 0:00:00 Downloading kornia_rs-0.1.9-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (2.8 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.8/2.8 MB 49.1 MB/s 0:00:00 Downloading propcache-0.3.2-cp313-cp313-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (206 kB) Downloading regex-2025.9.18-cp313-cp313-manylinux2014_x86_64.manylinux_2_17_x86_64.manylinux_2_28_x86_64.whl (802 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 802.1/802.1 kB 33.4 MB/s 0:00:00 Using cached setuptools-80.9.0-py3-none-any.whl (1.2 MB) Using cached sympy-1.14.0-py3-none-any.whl (6.3 MB) Downloading mpmath-1.3.0-py3-none-any.whl (536 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 536.2/536.2 kB 27.8 MB/s 0:00:00 Downloading trampoline-0.1.2-py3-none-any.whl (5.2 kB) Downloading filelock-3.19.1-py3-none-any.whl (15 kB) Using cached jinja2-3.1.6-py3-none-any.whl (134 kB) Downloading mako-1.3.10-py3-none-any.whl (78 kB) Downloading networkx-3.5-py3-none-any.whl (2.0 MB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.0/2.0 MB 45.2 MB/s 0:00:00 Installing collected packages: trampoline, nvidia-cusparselt-cu12, mpmath, tqdm, sympy, setuptools, sentencepiece, safetensors, regex, psutil, propcache, nvidia-nvtx-cu12, nvidia-nvjitlink-cu12, nvidia-nccl-cu12, nvidia-curand-cu12, nvidia-cufile-cu12, nvidia-cuda-runtime-cu12, nvidia-cuda-nvrtc-cu12, nvidia-cuda-cupti-cu12, nvidia-cublas-cu12, numpy, networkx, multidict, Mako, kornia_rs, jinja2, hf-xet, greenlet, fsspec, frozenlist, filelock, einops, comfyui-workflow-templates, comfyui-frontend-package, comfyui-embedded-docs, av, aiohappyeyeballs, yarl, triton, SQLAlchemy, soundfile, scipy, nvidia-cusparse-cu12, nvidia-cufft-cu12, nvidia-cudnn-cu12, huggingface-hub, aiosignal, tokenizers, nvidia-cusolver-cu12, alembic, aiohttp, transformers, torch, torchvision, torchsde, torchaudio, kornia, spandrel Successfully installed Mako-1.3.10 SQLAlchemy-2.0.43 aiohappyeyeballs-2.6.1 aiohttp-3.12.15 aiosignal-1.4.0 alembic-1.16.5 av-15.1.0 comfyui-embedded-docs-0.2.6 comfyui-frontend-package-1.26.13 comfyui-workflow-templates-0.1.81 einops-0.8.1 filelock-3.19.1 frozenlist-1.7.0 fsspec-2025.9.0 greenlet-3.2.4 hf-xet-1.1.10 huggingface-hub-0.35.0 jinja2-3.1.6 kornia-0.8.1 kornia_rs-0.1.9 mpmath-1.3.0 multidict-6.6.4 networkx-3.5 numpy-2.3.3 nvidia-cublas-cu12-12.8.4.1 nvidia-cuda-cupti-cu12-12.8.90 nvidia-cuda-nvrtc-cu12-12.8.93 nvidia-cuda-runtime-cu12-12.8.90 nvidia-cudnn-cu12-9.10.2.21 nvidia-cufft-cu12-11.3.3.83 nvidia-cufile-cu12-1.13.1.3 nvidia-curand-cu12-10.3.9.90 nvidia-cusolver-cu12-11.7.3.90 nvidia-cusparse-cu12-12.5.8.93 nvidia-cusparselt-cu12-0.7.1 nvidia-nccl-cu12-2.27.3 nvidia-nvjitlink-cu12-12.8.93 nvidia-nvtx-cu12-12.8.90 propcache-0.3.2 psutil-7.1.0 regex-2025.9.18 safetensors-0.6.2 scipy-1.16.2 sentencepiece-0.2.1 setuptools-80.9.0 soundfile-0.13.1 spandrel-0.4.1 sympy-1.14.0 tokenizers-0.22.1 torch-2.8.0 torchaudio-2.8.0 torchsde-0.2.6 torchvision-0.23.0 tqdm-4.67.1 trampoline-0.1.2 transformers-4.56.2 triton-3.4.0 yarl-1.20.

The application can be started now:

$ python main.py Adding extra search path checkpoints /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/Stable-diffusion Adding extra search path configs /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/Stable-diffusion Adding extra search path vae /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/VAE Adding extra search path loras /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/Lora Adding extra search path loras /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/LyCORIS Adding extra search path upscale_models /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/ESRGAN Adding extra search path upscale_models /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/RealESRGAN Adding extra search path upscale_models /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/SwinIR Adding extra search path embeddings /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/embeddings Adding extra search path hypernetworks /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/hypernetworks Adding extra search path controlnet /mnt/f/work/llm/stable-diffusion/stable-diffusion-webui/models/ControlNet ** ComfyUI startup time: 2025-09-22 17:13:05.111631 ** Platform: Linux ** Python version: 3.13.3 (main, Aug 14 2025, 11:53:40) [GCC 14.2.0] ** Python executable: /home/mslinn/venv/default/bin/python ** Log path: /mnt/f/work/llm/ComfyUI/comfyui.log Prestartup times for custom nodes: 0.1 seconds: /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager Checkpoint files will always be loaded safely. Total VRAM 12288 MB, total RAM 15990 MB pytorch version: 2.8.0+cu128 Set vram state to: NORMAL_VRAM Device: cuda:0 NVIDIA GeForce RTX 3060 : cudaMallocAsync Using pytorch attention Python version: 3.13.3 (main, Aug 14 2025, 11:53:40) [GCC 14.2.0] ComfyUI version: 0.3.59 ComfyUI frontend version: 1.26.13 [Prompt Server] web root: /home/mslinn/venv/default/lib/python3.13/site-packages/comfyui_frontend_package/static ### Loading: ComfyUI-Manager (V2.2.5) ## ComfyUI-Manager: installing dependencies Collecting GitPython (from -r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 1)) Downloading gitpython-3.1.45-py3-none-any.whl.metadata (13 kB) Collecting matrix-client==0.4.0 (from -r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) Downloading matrix_client-0.4.0-py2.py3-none-any.whl.metadata (5.0 kB) Requirement already satisfied: transformers in /home/mslinn/venv/default/lib/python3.13/site-packages (from -r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (4.56.2) Requirement already satisfied: huggingface-hub>0.20 in /home/mslinn/venv/default/lib/python3.13/site-packages (from -r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 4)) (0.35.0) Requirement already satisfied: requests~=2.22 in /home/mslinn/venv/default/lib/python3.13/site-packages (from matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) (2.32.5) Collecting urllib3~=1.21 (from matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) Downloading urllib3-1.26.20-py2.py3-none-any.whl.metadata (50 kB) Requirement already satisfied: charset_normalizer<4,>=2 in /home/mslinn/venv/default/lib/python3.13/site-packages (from requests~=2.22->matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) (3.4.3) Requirement already satisfied: idna<4,>=2.5 in /home/mslinn/venv/default/lib/python3.13/site-packages (from requests~=2.22->matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) (3.10) Requirement already satisfied: certifi>=2017.4.17 in /home/mslinn/venv/default/lib/python3.13/site-packages (from requests~=2.22->matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) (2025.8.3) Collecting gitdb<5,>=4.0.1 (from GitPython->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 1)) Downloading gitdb-4.0.12-py3-none-any.whl.metadata (1.2 kB) Collecting smmap<6,>=3.0.1 (from gitdb<5,>=4.0.1->GitPython->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 1)) Downloading smmap-5.0.2-py3-none-any.whl.metadata (4.3 kB) Requirement already satisfied: filelock in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (3.19.1) Requirement already satisfied: numpy>=1.17 in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (2.3.3) Requirement already satisfied: packaging>=20.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (25.0) Requirement already satisfied: pyyaml>=5.1 in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (6.0.2) Requirement already satisfied: regex!=2019.12.17 in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (2025.9.18) Requirement already satisfied: tokenizers<=0.23.0,>=0.22.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (0.22.1) Requirement already satisfied: safetensors>=0.4.3 in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (0.6.2) Requirement already satisfied: tqdm>=4.27 in /home/mslinn/venv/default/lib/python3.13/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (4.67.1) Requirement already satisfied: fsspec>=2023.5.0 in /home/mslinn/venv/default/lib/python3.13/site-packages (from huggingface-hub>0.20->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 4)) (2025.9.0) Requirement already satisfied: typing-extensions>=3.7.4.3 in /home/mslinn/venv/default/lib/python3.13/site-packages (from huggingface-hub>0.20->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 4)) (4.15.0) Requirement already satisfied: hf-xet<2.0.0,>=1.1.3 in /home/mslinn/venv/default/lib/python3.13/site-packages (from huggingface-hub>0.20->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 4)) (1.1.10) Downloading matrix_client-0.4.0-py2.py3-none-any.whl (43 kB) Downloading urllib3-1.26.20-py2.py3-none-any.whl (144 kB) Downloading gitpython-3.1.45-py3-none-any.whl (208 kB) Downloading gitdb-4.0.12-py3-none-any.whl (62 kB) Downloading smmap-5.0.2-py3-none-any.whl (24 kB) Installing collected packages: urllib3, smmap, gitdb, matrix-client, GitPython Attempting uninstall: urllib3 Found existing installation: urllib3 2.5.0 Uninstalling urllib3-2.5.0: Successfully uninstalled urllib3-2.5.0 Successfully installed GitPython-3.1.45 gitdb-4.0.12 matrix-client-0.4.0 smmap-5.0.2 urllib3-1.26.20 ## ComfyUI-Manager: installing dependencies done. ### ComfyUI Revision: 3945 [1fee8827] | Released on '2025-09-22' FETCH DATA from: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/custom-node-list.json FETCH DATA from: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/extension-node-map.json FETCH DATA from: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/model-list.json FETCH DATA from: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/alter-list.json Import times for custom nodes: 0.0 seconds: /mnt/f/work/llm/ComfyUI/custom_nodes/websocket_image_save.py 3.3 seconds: /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager [ComfyUI-Manager] default cache updated: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/custom-node-list.json [ComfyUI-Manager] default cache updated: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/extension-node-map.json [ComfyUI-Manager] default cache updated: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/model-list.json Context impl SQLiteImpl. Will assume non-transactional DDL. No target revision found. [ComfyUI-Manager] default cache updated: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/alter-list.json Starting server To see the GUI go to: {% href label='http://127.0.0.1:8188' url='http://127.0.0.1:8188' %}

Unlike the experience with the Portable Version, the custom version did not open the web browser at http://127.0.0.1:8188. When I opened the page, I saw this:

Updating

The documentation for How to Update ComfyUI is extremely detailed. While I like detailed documentation, manually updating a complex product like ComfyUI that has many moving parts that intermingles program and data is risky.

Configuration

I was happy to see a section in the installation instructions that discussed sharing models between AUTOMATIC1111 and ComfyUI. The documentation says:

AUTOMATIC1111 and ComfyUI.

You only need to do two simple things to make this happen.

First, rename extra_model_paths.yaml.example in the ComfyUI directory to extra_model_paths.yaml by typing:

$ mv extra_model_paths.yaml{.example,}

The file extra_model_paths.yaml will look like this:

#Rename this to extra_model_paths.yaml and ComfyUI will load it

#config for a1111 ui #all you have to do is change the base_path to where yours is installed a111: base_path: path/to/stable-diffusion-webui/

checkpoints: models/Stable-diffusion configs: models/Stable-diffusion vae: models/VAE loras: | models/Lora models/LyCORIS upscale_models: | models/ESRGAN models/RealESRGAN models/SwinIR embeddings: embeddings hypernetworks: models/hypernetworks controlnet: models/ControlNet

#config for comfyui #your base path should be either an existing comfy install or a central folder where you store all of your models, loras, etc.

#comfyui: # base_path: path/to/comfyui/ # checkpoints: models/checkpoints/ # clip: models/clip/ # clip_vision: models/clip_vision/ # configs: models/configs/ # controlnet: models/controlnet/ # embeddings: models/embeddings/ # loras: models/loras/ # upscale_models: models/upscale_models/ # vae: models/vae/

#other_ui: # base_path: path/to/ui # checkpoints: models/checkpoints # gligen: models/gligen # custom_nodes: path/custom_nodes

The example file as provided contains relative paths, but the documentation shows absolute paths. After some experimentation, I found that this software works with both relative and absolute paths.

Second, you should change path/ in this file to

../.

Here is an incantation that you can use to make the change:

$ OLD=path/to/stable-diffusion-webui/

$ NEW=../stable-diffusion-webui/

$ sed -ie "s^$OLD^$NEW^" extra_model_paths.yaml

Configuration complete!

Aside: At first, it seemed unclear to me if the comments in extra_model_paths.yaml

meant that I had to make more changes to it to share files between the UIs.

Then I noticed that the name of the ComfyUI directory that holds models is all lowercase (models),

but the name of the AUTOMATIC1111 directory starts with a capital letter (Models).

The configuration file, extra_model_paths.yaml, uses the AUTOMATIC1111 directory name (Models),

so that seems to indicate that setting base_path causes all the relative paths that follow to refer to

the AUTOMATIC1111 directories.

This makes sense.

When ComfyUI starts up, it generates a detailed message that indicates exactly where it is reading files from.

I found that the message clearly showed that the stable-diffusion-webui files were being picked up by ComfyUI.

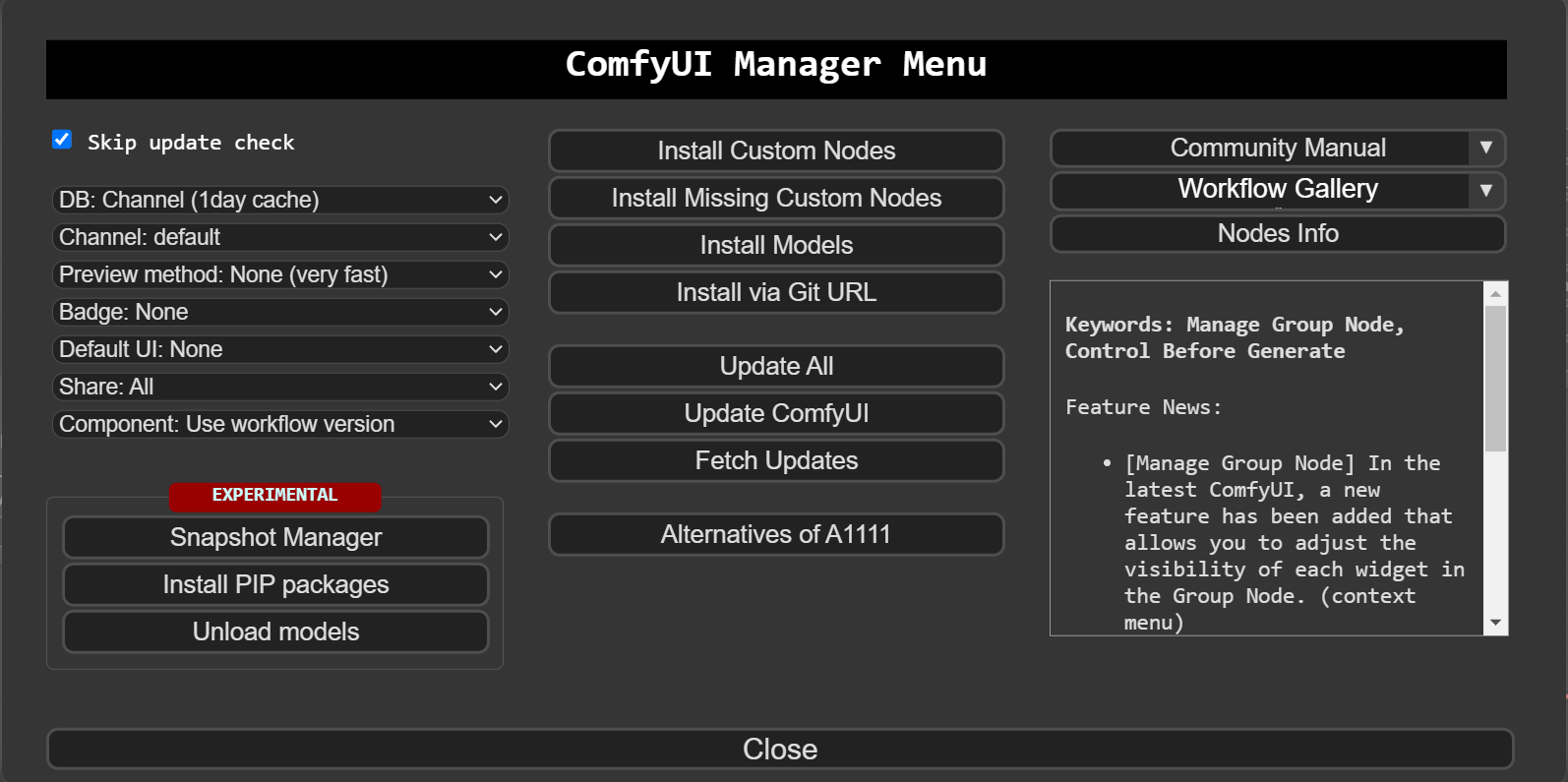

Extensions and Updating

The ComfyUI Manager extension allows you to update ComfyUI and easily add more extensions. This section discusses how to install the ComfyUI Manager extension.

You are probably not running ComfyUI on your computer yet.

If this is the case, change to your ComfyUI installation directory and

skip to the git clone step.

Otherwise, if ComfyUI is already running in a terminal session, stop it by typing CTRL-C into the terminal session a few times:

$ CTRL-C Stopped server

$ CTRL-C $

Now clone the ComfyUI-Manager git repository into the custom_nodes/

subdirectory of ComfyUI by typing:

$ git clone https://github.com/ltdrdata/ComfyUI-Manager.git \ custom_nodes/ComfyUI-Manager Cloning into 'custom_nodes/ComfyUI-Manager'... remote: Enumerating objects: 5820, done. remote: Counting objects: 100% (585/585), done. remote: Compressing objects: 100% (197/197), done. remote: Total 5820 (delta 410), reused 489 (delta 384), pack-reused 5235 Receiving objects: 100% (5820/5820), 4.36 MiB | 1.83 MiB/s, done. Resolving deltas: 100% (4126/4126), done.

Model

If you want to manage your model files outside of ComfyUI/models, you may have the following reasons:

- You have multiple ComfyUI instances and want them to share model files to save disk space

- You have different types of GUI programs (such as WebUI) and want them to use the same model files

- Model files cannot be recognized or found.

We provide a way to add extra model search paths via the extra_model_paths.yaml configuration file

$ find . -iname 'extra_model_paths*' ./extra_model_paths.yaml ./extra_model_paths.yaml.example ./extra_model_paths.yamle ./tests/execution/extra_model_paths.yaml

#Rename this to extra_model_paths.yaml and ComfyUI will load it

#config for a1111 ui

#all you have to do is change the base_path to where yours is installed

a111:

base_path: path/to/stable-diffusion-webui/

checkpoints: models/Stable-diffusion

configs: models/Stable-diffusion

vae: models/VAE

loras: |

models/Lora

models/LyCORIS

upscale_models: |

models/ESRGAN

models/RealESRGAN

models/SwinIR

embeddings: embeddings

hypernetworks: models/hypernetworks

controlnet: models/ControlNet

#config for comfyui

#your base path should be either an existing comfy install or a central folder where you store all of your models, loras, etc.

#comfyui:

# base_path: path/to/comfyui/

# # You can use is_default to mark that these folders should be listed first, and used as the default dirs for eg downloads

# #is_default: true

# checkpoints: models/checkpoints/

# clip: models/clip/

# clip_vision: models/clip_vision/

# configs: models/configs/

# controlnet: models/controlnet/

# diffusion_models: |

# models/diffusion_models

# models/unet

# embeddings: models/embeddings/

# loras: models/loras/

# upscale_models: models/upscale_models/

# vae: models/vae/

#other_ui:

# base_path: path/to/ui

# checkpoints: models/checkpoints

# gligen: models/gligen

# custom_nodes: path/custom_nodes

The Mac installation instructions tell readers to download the Stable Diffusion v1.5 model.

That is a good idea; however, because I wanted to share that model with the

stable-diffusion-webui user interface, I typed the following:

$ DEST=../stable-diffusion-webui/models/Stable-diffusion/

$ HUG=https://huggingface.co

$ SDCK=$HUG/runwayml/stable-diffusion-v1-5/resolve/main

$ URL=$SDCK/v1-5-pruned-emaonly.ckpt

$ wget -P "$DEST" $URL

Running ComfyUI

The ComfyUI help message is:

$ python main.py -h usage: main.py [-h] [--listen [IP]] [--port PORT] [--tls-keyfile TLS_KEYFILE] [--tls-certfile TLS_CERTFILE] [--enable-cors-header [ORIGIN]] [--max-upload-size MAX_UPLOAD_SIZE] [--base-directory BASE_DIRECTORY] [--extra-model-paths-config PATH [PATH ...]] [--output-directory OUTPUT_DIRECTORY] [--temp-directory TEMP_DIRECTORY] [--input-directory INPUT_DIRECTORY] [--auto-launch] [--disable-auto-launch] [--cuda-device DEVICE_ID] [--default-device DEFAULT_DEVICE_ID] [--cuda-malloc | --disable-cuda-malloc] [--force-fp32 | --force-fp16] [--fp32-unet | --fp64-unet | --bf16-unet | --fp16-unet | --fp8_e4m3fn-unet | --fp8_e5m2-unet | --fp8_e8m0fnu-unet] [--fp16-vae | --fp32-vae | --bf16-vae] [--cpu-vae] [--fp8_e4m3fn-text-enc | --fp8_e5m2-text-enc | --fp16-text-enc | --fp32-text-enc | --bf16-text-enc] [--force-channels-last] [--directml [DIRECTML_DEVICE]] [--oneapi-device-selector SELECTOR_STRING] [--disable-ipex-optimize] [--supports-fp8-compute] [--preview-method [none,auto,latent2rgb,taesd]] [--preview-size PREVIEW_SIZE] [--cache-classic | --cache-lru CACHE_LRU | --cache-none] [--use-split-cross-attention | --use-quad-cross-attention | --use-pytorch-cross-attention | --use-sage-attention | --use-flash-attention] [--disable-xformers] [--force-upcast-attention | --dont-upcast-attention] [--gpu-only | --highvram | --normalvram | --lowvram | --novram | --cpu] [--reserve-vram RESERVE_VRAM] [--async-offload] [--force-non-blocking] [--default-hashing-function {md5,sha1,sha256,sha512}] [--disable-smart-memory] [--deterministic] [--fast [FAST ...]] [--mmap-torch-files] [--disable-mmap] [--dont-print-server] [--quick-test-for-ci] [--windows-standalone-build] [--disable-metadata] [--disable-all-custom-nodes] [--whitelist-custom-nodes WHITELIST_CUSTOM_NODES [WHITELIST_CUSTOM_NODES ...]] [--disable-api-nodes] [--multi-user] [--verbose [{DEBUG,INFO,WARNING,ERROR,CRITICAL}]] [--log-stdout] [--front-end-version FRONT_END_VERSION] [--front-end-root FRONT_END_ROOT] [--user-directory USER_DIRECTORY] [--enable-compress-response-body] [--comfy-api-base COMFY_API_BASE] [--database-url DATABASE_URL]

options: -h, --help show this help message and exit --listen [IP] Specify the IP address to listen on (default: 127.0.0.1). You can give a list of ip addresses by separating them with a comma like: 127.2.2.2,127.3.3.3 If --listen is provided without an argument, it defaults to 0.0.0.0,:: (listens on all ipv4 and ipv6) --port PORT Set the listen port. --tls-keyfile TLS_KEYFILE Path to TLS (SSL) key file. Enables TLS, makes app accessible at https://... requires --tls-certfile to function --tls-certfile TLS_CERTFILE Path to TLS (SSL) certificate file. Enables TLS, makes app accessible at https://... requires --tls-keyfile to function --enable-cors-header [ORIGIN] Enable CORS (Cross-Origin Resource Sharing) with optional origin or allow all with default '*'. --max-upload-size MAX_UPLOAD_SIZE Set the maximum upload size in MB. --base-directory BASE_DIRECTORY Set the ComfyUI base directory for models, custom_nodes, input, output, temp, and user directories. --extra-model-paths-config PATH [PATH ...] Load one or more extra_model_paths.yaml files. --output-directory OUTPUT_DIRECTORY Set the ComfyUI output directory. Overrides --base- directory. --temp-directory TEMP_DIRECTORY Set the ComfyUI temp directory (default is in the ComfyUI directory). Overrides --base-directory. --input-directory INPUT_DIRECTORY Set the ComfyUI input directory. Overrides --base- directory. --auto-launch Automatically launch ComfyUI in the default browser. --disable-auto-launch Disable auto launching the browser. --cuda-device DEVICE_ID Set the id of the cuda device this instance will use. All other devices will not be visible. --default-device DEFAULT_DEVICE_ID Set the id of the default device, all other devices will stay visible. --cuda-malloc Enable cudaMallocAsync (enabled by default for torch 2.0 and up). --disable-cuda-malloc Disable cudaMallocAsync. --force-fp32 Force fp32 (If this makes your GPU work better please report it). --force-fp16 Force fp16. --fp32-unet Run the diffusion model in fp32. --fp64-unet Run the diffusion model in fp64. --bf16-unet Run the diffusion model in bf16. --fp16-unet Run the diffusion model in fp16 --fp8_e4m3fn-unet Store unet weights in fp8_e4m3fn. --fp8_e5m2-unet Store unet weights in fp8_e5m2. --fp8_e8m0fnu-unet Store unet weights in fp8_e8m0fnu. --fp16-vae Run the VAE in fp16, might cause black images. --fp32-vae Run the VAE in full precision fp32. --bf16-vae Run the VAE in bf16. --cpu-vae Run the VAE on the CPU. --fp8_e4m3fn-text-enc Store text encoder weights in fp8 (e4m3fn variant). --fp8_e5m2-text-enc Store text encoder weights in fp8 (e5m2 variant). --fp16-text-enc Store text encoder weights in fp16. --fp32-text-enc Store text encoder weights in fp32. --bf16-text-enc Store text encoder weights in bf16. --force-channels-last Force channels last format when inferencing the models. --directml [DIRECTML_DEVICE] Use torch-directml. --oneapi-device-selector SELECTOR_STRING Sets the oneAPI device(s) this instance will use. --disable-ipex-optimize Disables ipex.optimize default when loading models with Intel's Extension for Pytorch. --supports-fp8-compute ComfyUI will act like if the device supports fp8 compute. --preview-method [none,auto,latent2rgb,taesd] Default preview method for sampler nodes. --preview-size PREVIEW_SIZE Sets the maximum preview size for sampler nodes. --cache-classic Use the old style (aggressive) caching. --cache-lru CACHE_LRU Use LRU caching with a maximum of N node results cached. May use more RAM/VRAM. --cache-none Reduced RAM/VRAM usage at the expense of executing every node for each run. --use-split-cross-attention Use the split cross attention optimization. Ignored when xformers is used. --use-quad-cross-attention Use the sub-quadratic cross attention optimization . Ignored when xformers is used. --use-pytorch-cross-attention Use the new pytorch 2.0 cross attention function. --use-sage-attention Use sage attention. --use-flash-attention Use FlashAttention. --disable-xformers Disable xformers. --force-upcast-attention Force enable attention upcasting, please report if it fixes black images. --dont-upcast-attention Disable all upcasting of attention. Should be unnecessary except for debugging. --gpu-only Store and run everything (text encoders/CLIP models, etc... on the GPU). --highvram By default models will be unloaded to CPU memory after being used. This option keeps them in GPU memory. --normalvram Used to force normal vram use if lowvram gets automatically enabled. --lowvram Split the unet in parts to use less vram. --novram When lowvram isn't enough. --cpu To use the CPU for everything (slow). --reserve-vram RESERVE_VRAM Set the amount of vram in GB you want to reserve for use by your OS/other software. By default some amount is reserved depending on your OS. --async-offload Use async weight offloading. --force-non-blocking Force ComfyUI to use non-blocking operations for all applicable tensors. This may improve performance on some non-Nvidia systems but can cause issues with some workflows. --default-hashing-function {md5,sha1,sha256,sha512} Allows you to choose the hash function to use for duplicate filename / contents comparison. Default is sha256. --disable-smart-memory Force ComfyUI to agressively offload to regular ram instead of keeping models in vram when it can. --deterministic Make pytorch use slower deterministic algorithms when it can. Note that this might not make images deterministic in all cases. --fast [FAST ...] Enable some untested and potentially quality deteriorating optimizations. --fast with no arguments enables everything. You can pass a list specific optimizations if you only want to enable specific ones. Current valid optimizations: fp16_accumulation fp8_matrix_mult cublas_ops autotune --mmap-torch-files Use mmap when loading ckpt/pt files. --disable-mmap Don't use mmap when loading safetensors. --dont-print-server Don't print server output. --quick-test-for-ci Quick test for CI. --windows-standalone-build Windows standalone build: Enable convenient things that most people using the standalone windows build will probably enjoy (like auto opening the page on startup). --disable-metadata Disable saving prompt metadata in files. --disable-all-custom-nodes Disable loading all custom nodes. --whitelist-custom-nodes WHITELIST_CUSTOM_NODES [WHITELIST_CUSTOM_NODES ...] Specify custom node folders to load even when --disable-all-custom-nodes is enabled. --disable-api-nodes Disable loading all api nodes. --multi-user Enables per-user storage. --verbose [{DEBUG,INFO,WARNING,ERROR,CRITICAL}] Set the logging level --log-stdout Send normal process output to stdout instead of stderr (default). --front-end-version FRONT_END_VERSION Specifies the version of the frontend to be used. This command needs internet connectivity to query and download available frontend implementations from GitHub releases. The version string should be in the format of: [repoOwner]/[repoName]@[version] where version is one of: "latest" or a valid version number (e.g. "1.0.0") --front-end-root FRONT_END_ROOT The local filesystem path to the directory where the frontend is located. Overrides --front-end-version. --user-directory USER_DIRECTORY Set the ComfyUI user directory with an absolute path. Overrides --base-directory. --enable-compress-response-body Enable compressing response body. --comfy-api-base COMFY_API_BASE Set the base URL for the ComfyUI API. (default: https://api.comfy.org) --database-url DATABASE_URL Specify the database URL, e.g. for an in-memory database you can use 'sqlite:///:memory:'.

Useful Options

--auto-launch- This option opens the default web browser with the default ComfyUI project on startup.

--highvram- By default models, will be unloaded to CPU memory after being used. This option keeps models in GPU memory, which speeds up multiple uses.

--output-directory-

The default output directory is

~/Downloads. Change it with this option. --preview-method-

Use

--preview-method autoto enable previews. The default installation includes a fast but low-resolution latent preview method. To enable higher-quality previews with TAESD, download thetaesd_decoder.pth(for SD1.x and SD2.x) andtaesdxl_decoder.pth(for SDXL) models and place them in themodels/vae_approxfolder. Once they are installed, restart ComfyUI to enable high-quality previews. --temp-directory-

The default directory for temporary files is the installation directory, which is unfortunate.

Change it with this option. For example:

--temp-directory /tmp

Comfyui Script

Below is a script called comfyui, which runs ComfyUI.

- Sets the output directory to the current directory.

- Uses the GPU if present, and keeps the models loaded into GPU memory between iterations.

- A temporary directory will be created and automatically be removed after ComfyUI exits.

-

The script requires an environment variable called

comfyuito be set, which points at the directory that ComfyUI was installed into. You could set the variable in~/.bashrc, like this:~/.bashrcexport comfyui=/mnt/c/work/llm/ComfyUI

I broke the definition into two environment variables:~/.bashrcexport llm="/mnt/c/work/llm" export comfyui="$llm/ComfyUI"

And now, the comfyui script, in all its glory:

#!/bin/bash

RED='\033[0;31m'

RESET='\033[0m' # No Color

if [ -z "$comfyui" ]; then

printf "${RED}Error: The comfyui environment variable was not set.

Please see https://mslinn.com/llm/7400-comfyui.html#installation${RESET}

"

exit 2

fi

if [ ! -d "$comfyui" ]; then

printf "${RED}Error: The directory that the comfyui environment variable points to, '$comfyui', does not exist.${RESET}\n"

exit 3

fi

if [ ! -f "$comfyui/main.py" ]; then

printf "${RED}\

The directory that the comfyui environment variable points to, '$comfyui', does not contain main.py.

Is this actually the ComfyUI directory?${RESET}

"

EOF

exit 3

fi

OUT_DIR="$(pwd)"

WORK_DIR="$(mktemp -d)"

if [[ ! "$WORK_DIR" || ! -d "$WORK_DIR" ]]; then

echo "${RED}Error: Could not create temporary directory.${RESET}"

exit 1

fi

function cleanup {

rm -rf "$WORK_DIR"

}

# register the cleanup function to be called on the EXIT signal

trap cleanup EXIT

cd "$comfyui" || exit

python main.py \

--auto-launch \

--highvram \

--output-directory "$OUT_DIR" \

--preview-method auto \

--temp-directory "$WORK_DIR"

Launching

This is the output from launching ComfyUI with the comfyui script:

$ comfyui ** ComfyUI startup time: 2024-01-22 18:08:35.283187 ** Platform: Linux ** Python version: 3.11.6 (main, Oct 8 2023, 05:06:43) [GCC 13.2.0] ** Python executable: /mnt/f/work/llm/ComfyUI/.comfyui_env/bin/python ** Log path: /mnt/f/work/llm/ComfyUI/comfyui.log Prestartup times for custom nodes: 0.1 seconds: /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager Total VRAM 12288 MB, total RAM 7942 MB Set vram state to: NORMAL_VRAM Device: cuda:0 NVIDIA GeForce RTX 3060 : cudaMallocAsync VAE dtype: torch.bfloat16 Using pytorch cross attention Adding extra search path checkpoints ../stable-diffusion/stable-diffusion-webui/models/Stable-diffusion Adding extra search path configs ../stable-diffusion/stable-diffusion-webui/models/Stable-diffusion Adding extra search path vae ../stable-diffusion/stable-diffusion-webui/models/VAE Adding extra search path loras ../stable-diffusion/stable-diffusion-webui/models/Lora Adding extra search path loras ../stable-diffusion/stable-diffusion-webui/models/LyCORIS Adding extra search path upscale_models ../stable-diffusion/stable-diffusion-webui/models/ESRGAN Adding extra search path upscale_models ../stable-diffusion/stable-diffusion-webui/models/RealESRGAN Adding extra search path upscale_models ../stable-diffusion/stable-diffusion-webui/models/SwinIR Adding extra search path embeddings ../stable-diffusion/stable-diffusion-webui/embeddings Adding extra search path hypernetworks ../stable-diffusion/stable-diffusion-webui/models/hypernetworks Adding extra search path controlnet ../stable-diffusion/stable-diffusion-webui/models/ControlNet ### Loading: ComfyUI-Manager (V2.2.5) ## ComfyUI-Manager: installing dependencies Collecting GitPython (from -r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 1)) Obtaining dependency information for GitPython from https://files.pythonhosted.org/packages/45/c6/a637a7a11d4619957cb95ca195168759a4502991b1b91c13d3203ffc3748/GitPython-3.1.41-py3-none-any.whl.metadata Downloading GitPython-3.1.41-py3-none-any.whl.metadata (14 kB) Collecting matrix-client==0.4.0 (from -r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) Downloading matrix_client-0.4.0-py2.py3-none-any.whl (43 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 43.5/43.5 kB 8.2 MB/s eta 0:00:00 Requirement already satisfied: transformers in ./.comfyui_env/lib/python3.11/site-packages (from -r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (4.36.2) Requirement already satisfied: huggingface-hub>0.20 in ./.comfyui_env/lib/python3.11/site-packages (from -r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 4)) (0.20.2) Requirement already satisfied: requests~=2.22 in ./.comfyui_env/lib/python3.11/site-packages (from matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) (2.31.0) Collecting urllib3~=1.21 (from matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) Obtaining dependency information for urllib3~=1.21 from https://files.pythonhosted.org/packages/b0/53/aa91e163dcfd1e5b82d8a890ecf13314e3e149c05270cc644581f77f17fd/urllib3-1.26.18-py2.py3-none-any.whl.metadata Downloading urllib3-1.26.18-py2.py3-none-any.whl.metadata (48 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 48.9/48.9 kB 16.0 MB/s eta 0:00:00 Collecting gitdb<5,>=4.0.1 (from GitPython->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 1)) Obtaining dependency information for gitdb<5,>=4.0.1 from https://files.pythonhosted.org/packages/fd/5b/8f0c4a5bb9fd491c277c21eff7ccae71b47d43c4446c9d0c6cff2fe8c2c4/gitdb-4.0.11-py3-none-any.whl.metadata Using cached gitdb-4.0.11-py3-none-any.whl.metadata (1.2 kB) Requirement already satisfied: filelock in ./.comfyui_env/lib/python3.11/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (3.13.1) Requirement already satisfied: numpy>=1.17 in ./.comfyui_env/lib/python3.11/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (1.26.3) Requirement already satisfied: packaging>=20.0 in ./.comfyui_env/lib/python3.11/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (23.2) Requirement already satisfied: pyyaml>=5.1 in ./.comfyui_env/lib/python3.11/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (6.0.1) Requirement already satisfied: regex!=2019.12.17 in ./.comfyui_env/lib/python3.11/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (2023.12.25) Requirement already satisfied: tokenizers<0.19,>=0.14 in ./.comfyui_env/lib/python3.11/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (0.15.0) Requirement already satisfied: safetensors>=0.3.1 in ./.comfyui_env/lib/python3.11/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (0.4.1) Requirement already satisfied: tqdm>=4.27 in ./.comfyui_env/lib/python3.11/site-packages (from transformers->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 3)) (4.66.1) Requirement already satisfied: fsspec>=2023.5.0 in ./.comfyui_env/lib/python3.11/site-packages (from huggingface-hub>0.20->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 4)) (2023.12.2) Requirement already satisfied: typing-extensions>=3.7.4.3 in ./.comfyui_env/lib/python3.11/site-packages (from huggingface-hub>0.20->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 4)) (4.9.0) Collecting smmap<6,>=3.0.1 (from gitdb<5,>=4.0.1->GitPython->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 1)) Obtaining dependency information for smmap<6,>=3.0.1 from https://files.pythonhosted.org/packages/a7/a5/10f97f73544edcdef54409f1d839f6049a0d79df68adbc1ceb24d1aaca42/smmap-5.0.1-py3-none-any.whl.metadata Using cached smmap-5.0.1-py3-none-any.whl.metadata (4.3 kB) Requirement already satisfied: charset-normalizer<4,>=2 in ./.comfyui_env/lib/python3.11/site-packages (from requests~=2.22->matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) (3.3.2) Requirement already satisfied: idna<4,>=2.5 in ./.comfyui_env/lib/python3.11/site-packages (from requests~=2.22->matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) (3.6) Requirement already satisfied: certifi>=2017.4.17 in ./.comfyui_env/lib/python3.11/site-packages (from requests~=2.22->matrix-client==0.4.0->-r /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager/requirements.txt (line 2)) (2023.11.17) Downloading GitPython-3.1.41-py3-none-any.whl (196 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 196.4/196.4 kB 51.6 MB/s eta 0:00:00 Using cached gitdb-4.0.11-py3-none-any.whl (62 kB) Downloading urllib3-1.26.18-py2.py3-none-any.whl (143 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 143.8/143.8 kB 53.2 MB/s eta 0:00:00 Using cached smmap-5.0.1-py3-none-any.whl (24 kB) Installing collected packages: urllib3, smmap, gitdb, matrix-client, GitPython Attempting uninstall: urllib3 Found existing installation: urllib3 2.1.0 Uninstalling urllib3-2.1.0: Successfully uninstalled urllib3-2.1.0 Successfully installed GitPython-3.1.41 gitdb-4.0.11 matrix-client-0.4.0 smmap-5.0.1 urllib3-1.26.18 ## ComfyUI-Manager: installing dependencies done. ### ComfyUI Revision: 1923 [ef5a28b5] | Released on '2024-01-20' FETCH DATA from: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/custom-node-list.json Import times for custom nodes: 6.1 seconds: /mnt/f/work/llm/ComfyUI/custom_nodes/ComfyUI-Manager Starting server To see the GUI go to: http://127.0.0.1:8188 FETCH DATA from: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/extension-node-map.json FETCH DATA from: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/model-list.json FETCH DATA from: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/alter-list.json [ComfyUI-Manager] default cache updated: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/custom-node-list.json [ComfyUI-Manager] default cache updated: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/model-list.json [ComfyUI-Manager] default cache updated: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/alter-list.json [ComfyUI-Manager] default cache updated: https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/extension-node-map.json

Point your web browser to localhost:8188, if it does not open automatically,

and you will see the default project.

The ComfyUI Queue panel will have a button for the Manager extension that you just installed.

Clicking on the Manager button will open the ComfyUI Manager Menu panel:

comfyui.log in its directory,

and backs up any previous file of that name to comfyui.prev.log and

comfyui.prev2.log.

The Linux Filesystem Hierarchy Standard states that log files should be placed in

/var/log .

ComfyUI does not make any provision for that.

This deficiency means that production installations are more likely to have permission-related security problems.

stable-diffusion-art.com

wrote up how to generate an image using ComfyUI.

This is a good video on first steps with ComfyUI:

Hotkeys

| Key Binding | Description |

|---|---|

| Ctrl - Enter | Queue up current graph for generation |

| Ctrl - SHIFT - Enter | Queue up current graph as first for generation |

| CTRL - ALT - Enter | Cancel current generation |

| CTRL - Z/CTRL - Y | Undo/Redo |

| CTRL - S | Save workflow |

| CTRL - O | Load workflow |

| CTRL - A | Select all nodes |

| ALT - C | Collapse/uncollapse selected nodes |

| CTRL - M | Mute/unmute selected nodes |

| CTRL - B | Bypass selected nodes (acts like the node was removed from the graph and the wires reconnected through) |

| Delete/Backspace | Delete selected nodes |

| CTRL - Backspace | Delete the current graph |

| Space | Moves the canvas around when the space key is held down and the cursor is moved |

CTRL/SHIFT - Click

| Add clicked node to selection |

CTRL - C/CTRL - V

| Copy and paste selected nodes (without maintaining connections to outputs of unselected nodes) |

CTRL - C/CTRL - SHIFT - V

| Copy and paste selected nodes (maintaining connections from outputs of unselected nodes to inputs of pasted nodes) |

| SHIFT - Drag | Move multiple selected nodes at the same time |

| CTRL - D | Load default graph |

| ALT - + | Canvas Zoom in |

| ALT - - | Canvas Zoom out |

| CTRL - SHIFT - left mouse button - vertical drag | Canvas Zoom in/out |

| P | Pin/Unpin selected nodes |

| CTRL - G | Group selected nodes |

| Q | Toggle visibility of the queue |

| H | Toggle visibility of history |

| R | Refresh graph |

| F | Show/Hide menu |

| . | Fit view to selection, or fit to the entire graph when nothing is selected |

| Double-Click left mouse button | Open node quick search palette |

| SHIFT - drag | Move multiple wires at once |

| CTRL - ALT - left mouse button | Disconnect all wires from the clicked slot |

AudioReactive

RyanOnTheInside’s ComfyUI AudioReactive Node Pack.

More Videos

The following videos are by Scott Detweiler,

a quality assurance staff member at stability.ai.

Scott's job is to ensure Stable Diffusion and related software work properly.

He is obviously an experienced user,

although he can be hard to undertand because he speaks rather quickly and often does not articulate words clearly.

Check out his portfolio.

Usage Notes

The ComfyUI README has a section entitled Notes. These are actually usage notes, with suggestions on how to make prompts, including wildcard and dynamic prompts.

References

- ComfyUI on Matrix, an open-source secure messenger and collaboration app, like Discord, but including encrypted privacy.

- Discord channel