Published 2025-01-06.

Last modified 2025-08-12.

Time to read: 7 minutes.

git collection.

I have published 8 articles about the Git large file system (LFS). They are meant to be read in order.

- Git Large File System Overview

- Extra Git and Git LFS Commands

- Git LFS Client Installation

- Git LFS Server URLs

- Git-ls-files, Wildmatch Patterns and Permutation Scripts

- Git LFS Tracking, Migration and Un-Migration

- Git LFS Client Configuration & Commands

- Git LFS SSH Authentication

- Working With Git LFS

- Evaluation Procedure For Git LFS Servers

- Git LFS server tests:

6 articles are still in process.

Instructions for typing along are given for Ubuntu and WSL/Ubuntu. If you have a Mac, the compiled Go programs provided on GitHub should install easily, and most of the textual information should be helpful.

I evaluated several Git LFS servers. Scripts and procedures common to each evaluation are described in this article. This article concludes with a brief outline of the procedure followed for each of the Git LFS servers that I chose to evaluate.

The results of evaluating each server are documented in follow-on articles.

If you evaluate another Git LFS implementation using the scripts provided in this article and send me detailed results. My coordinates are on the front page of this website.

Many websites show examples of how to work with Git LFS by tracking small files.

This is quicker and easier than testing with files over 100 MB, which can be slow and consumes a lot of storage space.

Testing with small files will avoid most of the practical details that evaluation is intended to surface.

Furthermore, you should also see what happens when your LFS storage exceeds 1 GB,

and what happens when you attempt to commit more than 2 GB of content in a single commit.

If you follow along with the instructions I give in the evaluations, large files will actually be used,

non-trivial amounts of LFS storage will be used, and practical issues may arise.

This is the motivation for performing evaluations, after all.

Git LFS Evaluation Procedure

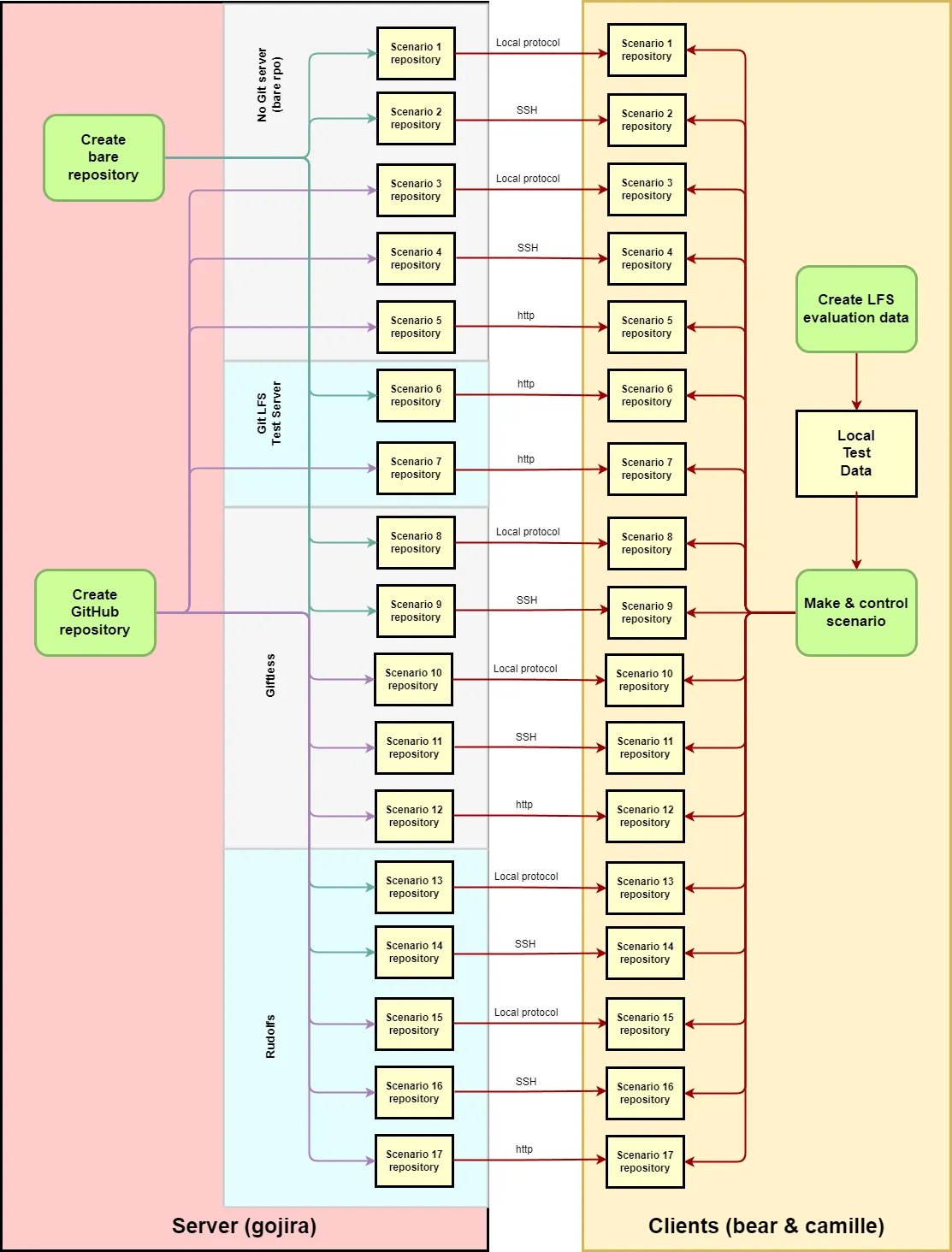

Each of the next several articles is dedicated to the evaluation of a specific Git LFS server. Each Git LFS server is evaluated in several scenarios. The high-level process for each evaluation scenario is as follows:

- The Git LFS server is installed.

- Potential Git LFS server configuration is considered, and any relevant activities are performed.

-

Each evaluation is performed in a series of steps.

The

stepscript sequences the stages of each GitHub LFS evaluation scenario. It uses test data provided by thegit_lfs_test_datascript and stored in$work/.git/ git_lfs_test_data -

For the null server tests the

new_bare_reposcript is used. -

For most of the Git LFS servers tested the

create_lfs_eval_reposcript is used to create the evaluation repository as a subdirectory of~/git_lfs_reposongojira.

-

For the null server tests the

Step Script

The step script accepts the scenario number and the step number.

Each of the steps are shown below, along with a description of the actions performed for that step.

-

The Git LFS Server URL is saved into an appropriately crafted

.lfsconfigfile for the evaluation scenario.

Thetrackscript specifies the wildmatch patterns that determine the files to be stored in Git LFS.

An initial set of files are copied into the repository, CRCs are computed for each file and stored. -

The initial set of files are added to the Git and Git LFS repositories,

and the time that took is saved.

The files are committed, and the time that took is saved.

The files are pushed, and the time that took is saved.

The client-side and server-side Git repositories are examined. Where are the files stored? How big are they? Etc. -

Big and small files are updated, deleted and renamed.

CRCs are computed for all files and saved. The CRCs of files that did not change are examined to make sure that the CRCs did not change. The client-side and server-side Git repositories are examined. Where are the files stored? How big are they? Etc. - The repository is cloned to another machine with a properly configured git client. CRCs are computed for all files and compared to the CRCs computed on the other client machine.

- File changes for step 3 are made on the second git client machine, committed, and pushed. CRCs are computed for all files.

- On the first client machine, changes are pulled. The CRCs of all files are compared between the two clients.

-

The

untrackandunmigratecommands are used to move all files from Git LFS to Git. Any issues are noted, and the Git LFS repository is examined. CRCs are computed and compared to the previous value.

Organization

I defined 3 environment variables to make moving between directories easier and less confusing for readers.

These environment variables take advantage of the organizational similarity of the Git LFS evaluation setup

between server and clients.

The values shown were created by the setup_ and

setup_ scripts.

LFS_EVAL=/home/mslinn/lfs_eval LFS_DATA=$LFS_EVAL/eval_data LFS_SCENARIOS=$LFS_EVAL/scenarios

The clients stored evaluation data files and client-side copies of the repositories in $work/git/lfs_eval/.

I defined 3 environment variables to match the server-side environment variables.

LFS_EVAL=/mnt/f/work/git/lfs_eval LFS_DATA=$LFS_EVAL/eval_data LFS_SCENARIOS=$LFS_EVAL/scenarios

$LFS_DATA/

step1/

.checksums

README.md

pdf1.pdf

video1.m4v

video2.mov

video3.avi

video4.ogg

zip1.zip

zip2.zip

step2/

.checksums

README.md

pdf1.pdf

video2.mov

video3.avi

zip1.zip

step3/

.checksums

README.md

$LFS_SCENARIOS/

1/

.git/

.checksums

README.md

...

2/

.git/

.checksums

README.md

...

17/

.git/

.checksums

README.md

...

To make all the Git repositories on server gojira:

mslinn@gojira ~ $ setup_git_lfs_eval_server Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_1.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_2.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_3.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_4.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_5.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_6.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_7.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_8.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_9.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_10.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_11.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_12.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_13.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_14.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_15.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_16.git/ Initialized empty shared Git repository in /home/mslinn/lfs_eval/scenarios/scenario_17.git/

Test Data

We need test data to ensure that Git LFS is functioning correctly. A minimum of 2 GB of binary files larger than 100 GB is required to properly test Git LFS.

The test files are organized so Git and Git LFS operations can be tested. Large files need to be added, removed and updated. Old versions need to be retrievable.

For convenience, I wrote a script called git_lfs_test_data that

downloads large files from several sources.

The files are organized into subdirectories;

each subdirectory contains the files that are added to the commit for each step of the test procedure.

Installation instructions are provided in Extra Git and Git LFS Commands.

About The Test Data

The script downloads 10 files, ranging in size from 102 MB to 398 MB.

The downloaded files have filetypes that include

avi, m4v, mov, mp4, ogg, pdf, and zip.

These files are suitably licensed for testing purposes.

Big Buck Bunny is a set of CC BY 3.0 licensed videos that are free to share and adapt. These videos are renderings of a single original video, provided in a variety of formats and resolutions. The original video is a comedy about a fat rabbit taking revenge on three irritating rodents. The script downloads some Big Buck Bunny videos for use as large file content for evaluating Git LFS servers.

The script also downloads one large zip file from each of the following:

Large PDFs are downloaded from testfile/org.

The downloaded large files are placed in subdirectory, called v1/ and v2/.

These subdirectories are used to update other previously committed large files.

Doing so will reveal any problems that might exist when revising a large file.

Using git_lfs_test_data

You should create an environment variable called work that points to where you want Git LFS testing to be done.

Also create a subdirectory called $work/git/.

This is where the Git LFS testing will be performed.

It takes several minutes for the git_lfs_test_data script to download the test files.

They should be saved in $work/git/git_lfs_test_data/.

The test scripts copy these files to your Git LFS test directory as required.

Here is an example of how to use the git_lfs_test_data script to download the test data.

I stored the files in a new directory called $work/git/git_lfs_test_data

on my local server gojira.

The follow-on articles reference this directory, accessed via ssh.

Scroll to the end of the output to see the test data that we now have available to work with.

mslinn@gojira ~ $ mkdir "$work/git/git_lfs_test_data"

mslinn@gojira ~ $ cd "$work/git/git_lfs_test_data"

mslinn@gojira ~ $ git_lfs_test_data Downloading BigBuckBunny_640x360.m4v as step1/video1.m4v ##################################################### 100.0% Downloading big_buck_bunny_480p_h264.mov as step1/video2.mov ##################################################### 100.0% Downloading big_buck_bunny_480p_stereo.avi as step1/video3.avi ##################################################### 100.0% Downloading big_buck_bunny_720p_stereo.ogg as step1/video4.ogg ##################################################### 100.0% Downloading enwik9.zip as step1/zip1.zip ##################################################### 100.0% Downloading rdf-files.tar.zip as step1/zip2.zip ##################################################### 100.0% Downloading 100MB-TESTFILE.ORG.pdf as step1/pdf1.pdf ##################################################### 100.0% Downloading 200MB.zip as step2/zip1.zip ##################################################### 100.0% Downloading big_buck_bunny_720p_h264.mov as step2/video2.mov ##################################################### 100.0% Downloading big_buck_bunny_720p_stereo.avi as step2/video3.avi ##################################################### 100.0% Downloading 200MB-TESTFILE.ORG.pdf as step2/pdf1.pdf ##################################################### 100.0%Download complete. Here are lists of downloaded files and sizes for each step, ordered by name. The last line in each listing shows the total size of the files. Step 1: 103M pdf1.pdf 116M video1.m4v 238M video2.mov 150M video3.avi 188M video4.ogg 308M zip1.zip 154M zip2.zip 1.3G . Step 2: 205M pdf1.pdf 398M video2.mov 272M video3.avi 200M zip1.zip 1.1G .

The filetypes of these large files are:

avi, m4v, mov, ogg, pdf, and zip.

Evaluation Repository Creation Script

I wrote the following script to prepare a consistent testbed for non-null Git LFS servers. The script creates a private git repository in your user account on GitHub. The commands in the script were discussed in Git LFS Tracking, Migration and Un-Migration.

The script relies on the GitHub CLI. If you want to use this script, please install the GitHub CLI first. Be sure to initialize the GitHub CLI before you run the script. GitHub CLI initialization allows you to run authenticated remote git commands from a console.

Installation instructions are provided in Extra Git and Git LFS Commands.

You will see this script in action when the Git LFS servers are evaluated in the follow-on articles.

Scenarios Considered

The following scenarios were considered for this evaluation.

Reviewers: I am most interested in your feedback about

the value, relevance and feasibility of the scenarios.

Please let me know of additional scenarios that might be of interest, along with suggested use cases.

No Git LFS Server

| Git Server | Scenario | Git LFS Protocol | Use Cases |

|---|---|---|---|

| None (bare repo) | 1 | local |

Not functional.

|

| 2 | SSH |

| |

| GitHub | 3 | local |

|

| 4 | SSH |

| |

| 5 | http

|

|

Git LFS Test Server

Provides its own web server, which supports http and https.

No other protocols are supported.

| Git Server | Scenario | Git LFS Protocol | Use Cases |

|---|---|---|---|

| None (bare repo) | 6 | http

|

|

| GitHub | 7 | http

|

|

Giftless

| Git Server | Scenario | Git LFS Protocol | Use Cases |

|---|---|---|---|

| None (bare repo) | 8 | local |

|

| None (bare repo) | 9 | SSH |

|

| GitHub | 10 | local |

|

| GitHub | 11 | SSH |

|

| GitHub | 12 | http

|

|

Rudolfs

| Git Server | Scenario | Git LFS Protocol | Use Cases |

|---|---|---|---|

| None (bare repo) | 13 | local |

|

| None (bare repo) | 14 | SSH |

|

| GitHub | 15 | local |

|

| GitHub | 16 | SSH |

|

| GitHub | 17 | http

|

|

I have published 8 articles about the Git large file system (LFS). They are meant to be read in order.

- Git Large File System Overview

- Extra Git and Git LFS Commands

- Git LFS Client Installation

- Git LFS Server URLs

- Git-ls-files, Wildmatch Patterns and Permutation Scripts

- Git LFS Tracking, Migration and Un-Migration

- Git LFS Client Configuration & Commands

- Git LFS SSH Authentication

- Working With Git LFS

- Evaluation Procedure For Git LFS Servers

- Git LFS server tests:

6 articles are still in process.

Instructions for typing along are given for Ubuntu and WSL/Ubuntu. If you have a Mac, the compiled Go programs provided on GitHub should install easily, and most of the textual information should be helpful.