Published 2024-01-27.

Time to read: 2 minutes.

llm collection.

This is a quick refresher of the terms and symbols used in probability theory. You should at least glance through this material before attempting to read a technical description of stochastic models such as those used by Stable Diffusion.

Additional terms are defined in Large Language Model Notes. The terms in the glossary refer to terms defined in this article, so read this one first.

I used Writing Math For Web Pages to format the mathematical expressions in this article using MathLive’s LaTeX Notes variant.

Definitions

- Random variable

- A random variable is a numerical quantity that is generated by a random experiment.

- Discrete random variable

- A random variable is called discrete if it has either a finite or a countable number of possible values.

- Continuous random variable

- A random variable is called continuous if its possible values contain a whole interval of numbers.

Diffusion probabilistic models, for example Stable Diffusion, use continuous random variables. In fact, they are absolutely continuous, which have distributions that can be described by probability density functions, which assigns probabilities to intervals.

- Likelihood

- a measure of goodness of fit for a model.

- Log-likelihood

- a term used in statistics, meaning the natural logarithm of the likelihood. The maximum value of the log of the probability occurs at the same point as the original probability function. Log-likelihood values can be summed, unlike likelihoods which must be multiplied, so log-likelihood is easier to work with than likelihood.

- Markov chain

- A stochastic (random) model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event.

- Loss

- a measure of how well a model did at predicting the outcome. A loss function is a measure of how accurately a model is able to predict the expected value. A high value for the loss means the model performed poorly. Conversely, a low value for the loss means the model performed well.

- Tensor

-

In the field of mathematics, tensors

are used to describe physical properties, similar to how scalars and vectors are used.

Tensors are actually a generalisation of scalars and vectors; a scalar is a zero-rank tensor, and a vector is a first-rank tensor.

See also Large Language Model Notes.

Probability and Statistics Symbols

| Symbol | Discussion |

|---|---|

| \displaystyle\sum_{} | The Greek letter capital sigma (\sum_{}) is used to denote the summing together of the result of iteratively evaluating the expression that follows. |

| P(x) |

The probability \displaystyle P(x)

that a discrete random variable X will have

the value x

in one trial of an experiment.

The value is always a nonnegative real number, between 0 and 1 inclusive.

|

| \displaystyle \mathcal{N} |

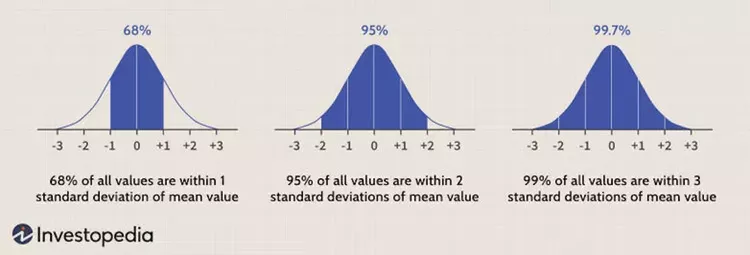

The calligraphic N symbol (\mathcal{N}) is used to represent a normal distribution,

also known as a Gaussian distribution.

In graphical form, the normal distribution appears as a bell curve. All normal distributions can be described by just two parameters: the mean (\mu) and the standard deviation (\sigma). Normal distributions are a probability distribution that are symmetric about the mean, showing that data near the mean are more frequent in occurrence than data far from the mean. For a normal distribution, the mean is 0 and the standard deviation is 1. |

| \mu |

A mu symbol (\mu) represents the

mean, also called the expectation value or expected value.

The mean of a random variable may be interpreted as the average of the values assumed by the random variable in repeated trials of the experiment.

The generic formula is:

\displaystyle\mu=\sum_{}xP(x)

|

| {\sigma^2} |

A lower-case sigma symbol, squared, (\sigma^2) represents the

variance.

The generic formula is:

\sigma^2 = \displaystyle\left\lbrack\sum_{} x^2P(x)\right\rbrack\displaylimits-\mu^2

|

| \displaystyle\sigma |

A lower-case sigma symbol (\displaystyle\sigma) represents the

standard deviation. The generic formula is:

\sigma = \sqrt{\displaystyle\left\lbrack\sum_{} x^2P(x)\right\rbrack\displaylimits-\mu^2}

|

| \displaystyle\epsilon | The lunate epsilon symbol ({\epsilon}) represents set membership. Mathematicians read the symbol {\epsilon} as "element of"; for example, they read 1 \epsilon \mathbb{N} as "1 is an element of the natural numbers". |

| \displaystyle\Pi | The Greek letter capital pi (\displaystyle\Pi) is used to denote the multiplying together of the result of iteratively evaluating the expression that follows. Learn more about sum and product notation. |

| \displaystyle\varepsilon and \displaystyle\Epsilon | Error |

| \displaystyle\sim |

A tilde (\displaystyle\sim) specifies the

probability distribution of a random variable.

\displaystyle e \sim \mathcal{N}(\mu, \sigma^2) means that e is distributed as a Normal (Gaussian) distribution with mean \displaystyle\mu and variance \displaystyle\sigma^2. |

| \displaystyle| |

A vertical bar (|) is used to denote conditional probability. For example, P(A|B) means “the probability of A given that B occurred.” |

| \displaystyle\left\Vert |

A pair of double vertical bars (\displaystyle \left\Vert) is used to denote Euclidean norm of the contents.

For example, \displaystyle \left\Vert{x}\right\Vert means “the Euclidean norm of x.”

This can also be written as \displaystyle \left\Vert{x}\right\Vert_2.

The Euclidean norm is the square root of the sum of squares; this is the formula for distance in a cartesian coordinate system. The NumPy norm() function, with default parameters, performs this computation.

|