Published 2022-07-01.

Last modified 2022-12-23.

Time to read: 8 minutes.

This is another article in my ongoing saga of moving off AWS, which does not integrate security with real-time billing. I recently learned this the hard way: when my AWS account was hijacked, in less than 15 minutes, a huge bill was incurred.

The world of pain that I experienced after the breach was inflicted by broken and wasteful AWS remedial processes, and an ineffective AWS management structure. This type of issue only is enabled because of deficiencies in the AWS architecture. Those AWS architectural deficiencies feel like the result of an exploitive mindset:

Demand Limits to Financial Liability From PaaS Vendors

PaaS vendors currently provide accounts with all services ready to go, without limit. That is good for the vendor's bottom line, but highly dangerous for their customers.

All PaaS customers should demand the ability for themselves to be able to set firm limits on budgeted expenses, along with the ability to deny all services not explicitly authorized.

You can buy insurance against losses resulting from various calamities, but you cannot limit your financial liability with PaaS vendors.

Yet.

Website Hosting Market

Recently, I have spent a lot of time looking at options for hosting websites. I found the following types of products:

| WordPress | General Web Server | VM | S3 Compatible | |

|---|---|---|---|---|

| CDN | No | No | No | Yes |

| Speed | Slow to Medium | Slow to Medium | Slow to Fast | Fast |

| Reliability | Fair | Fair | Depends on you | Good |

| Financial Liability | Fixed monthly cost | Fixed monthly cost | Depends | Unlimited |

| Storage | Small | Small | Depends | Unlimited |

| CLI & API | No | No | Depends | Yes |

This website requires about 220 GB of storage.

It has numerous images, in 2 versions:

webp

and png.

So for this website, ‘small’ means less than 250 GB storage.

I decided to give the S3-compatible product Linode Storage a try.

Why Linode?

First, the positive: Linode is a pioneer in virtual computing. It has been on my short list of places for hosting my pet projects for many years. Prices have always been quite competitive, products solid, with good support ... and smart people have answered the phone when I have called. Being able to easily speak with a capable human provides huge value.

On the other hand, as I shall demonstrate in this article, Linode’s documentation presents as a significant barrier to customers considering adopting their services. I had to work a lot harder than I should have to get my evaluation website up and running. Hopefully, this document is complete enough, so others can follow along and host their static websites on Linode Storage.

Acquired by Akamai

Linode was acquired by Akamai 3 months ago. Akamai is the original CDN, and their network is gigantic.

Linode does not yet offer a CDN product, but the person I spoke to at Linode when I started writing this article suggested that a CDN product from Linode based on Akamai’s network might be available soon. He also said that they were planning to working on a mechanism for limiting financial liability to their customers in 2022.

This was music to my financially risk-averse ears!

The remainder of this article will take you through all the steps necessary to:

- Install and configure software tools on a WSL / WSL2 / Ubuntu computer

- Make an S3-compatible bucket and set it up to hold a website

- Upload the website, and easily handle mimetype issues

- Generate and install a free 4096-bit SSL wildcard certificate

- Get a security rating from Qualys / SSL Labs

Trialing Linode Storage

Installing s3cmd

I installed the recommended S3-compatible command-line program s3cmd on WSL2 / Ubuntu like this:

$ yes | sudo apt install s3cmd Reading package lists... Done Building dependency tree... Done Reading state information... Done The following additional packages will be installed: python3-magic The following NEW packages will be installed: python3-magic s3cmd 0 upgraded, 2 newly installed, 0 to remove and 0 not upgraded. Need to get 133 kB of archives. After this operation, 584 kB of additional disk space will be used. Get:1 http://archive.ubuntu.com/ubuntu jammy/main amd64 python3-magic all 2:0.4.24-2 [12.6 kB] Get:2 http://archive.ubuntu.com/ubuntu jammy/universe amd64 s3cmd all 2.2.0-1 [120 kB] Fetched 133 kB in 0s (278 kB/s) Selecting previously unselected package python3-magic. (Reading database ... 169821 files and directories currently installed.) Preparing to unpack .../python3-magic_2%3a0.4.24-2_all.deb ... Unpacking python3-magic (2:0.4.24-2) ... Selecting previously unselected package s3cmd. Preparing to unpack .../archives/s3cmd_2.2.0-1_all.deb ... Unpacking s3cmd (2.2.0-1) ... Setting up python3-magic (2:0.4.24-2) ... Setting up s3cmd (2.2.0-1) ... Processing triggers for man-db (2.10.2-1) ... Scanning processes... Scanning processor microcode... Scanning linux images... Failed to retrieve available kernel versions. Failed to check for processor microcode upgrades. No services need to be restarted. No containers need to be restarted. No user sessions are running outdated binaries. No VM guests are running outdated hypervisor (qemu) binaries on this host.

Here is the s3cmd help message:

$ s3cmd Usage: s3cmd [options] COMMAND [parameters]

S3cmd is a tool for managing objects in Amazon S3 storage. It allows for making and removing "buckets" and uploading, downloading and removing "objects" from these buckets.

Options: -h, --help show this help message and exit --configure Invoke interactive (re)configuration tool. Optionally use as '--configure s3://some-bucket' to test access to a specific bucket instead of attempting to list them all. -c FILE, --config=FILE Config file name. Defaults to $HOME/.s3cfg --dump-config Dump current configuration after parsing config files and command line options and exit. --access_key=ACCESS_KEY AWS Access Key --secret_key=SECRET_KEY AWS Secret Key --access_token=ACCESS_TOKEN AWS Access Token -n, --dry-run Only show what should be uploaded or downloaded but don't actually do it. May still perform S3 requests to get bucket listings and other information though (only for file transfer commands) -s, --ssl Use HTTPS connection when communicating with S3. (default) --no-ssl Don't use HTTPS. -e, --encrypt Encrypt files before uploading to S3. --no-encrypt Don't encrypt files. -f, --force Force overwrite and other dangerous operations. --continue Continue getting a partially downloaded file (only for [get] command). --continue-put Continue uploading partially uploaded files or multipart upload parts. Restarts parts/files that don't have matching size and md5. Skips files/parts that do. Note: md5sum checks are not always sufficient to check (part) file equality. Enable this at your own risk. --upload-id=UPLOAD_ID UploadId for Multipart Upload, in case you want continue an existing upload (equivalent to --continue- put) and there are multiple partial uploads. Use s3cmd multipart [URI] to see what UploadIds are associated with the given URI. --skip-existing Skip over files that exist at the destination (only for [get] and [sync] commands). -r, --recursive Recursive upload, download or removal. --check-md5 Check MD5 sums when comparing files for [sync]. (default) --no-check-md5 Do not check MD5 sums when comparing files for [sync]. Only size will be compared. May significantly speed up transfer but may also miss some changed files. -P, --acl-public Store objects with ACL allowing read for anyone. --acl-private Store objects with default ACL allowing access for you only. --acl-grant=PERMISSION:EMAIL or USER_CANONICAL_ID Grant stated permission to a given amazon user. Permission is one of: read, write, read_acp, write_acp, full_control, all --acl-revoke=PERMISSION:USER_CANONICAL_ID Revoke stated permission for a given amazon user. Permission is one of: read, write, read_acp, write_acp, full_control, all -D NUM, --restore-days=NUM Number of days to keep restored file available (only for 'restore' command). Default is 1 day. --restore-priority=RESTORE_PRIORITY Priority for restoring files from S3 Glacier (only for 'restore' command). Choices available: bulk, standard, expedited --delete-removed Delete destination objects with no corresponding source file [sync] --no-delete-removed Don't delete destination objects [sync] --delete-after Perform deletes AFTER new uploads when delete-removed is enabled [sync] --delay-updates *OBSOLETE* Put all updated files into place at end [sync] --max-delete=NUM Do not delete more than NUM files. [del] and [sync] --limit=NUM Limit number of objects returned in the response body (only for [ls] and [la] commands) --add-destination=ADDITIONAL_DESTINATIONS Additional destination for parallel uploads, in addition to last arg. May be repeated. --delete-after-fetch Delete remote objects after fetching to local file (only for [get] and [sync] commands). -p, --preserve Preserve filesystem attributes (mode, ownership, timestamps). Default for [sync] command. --no-preserve Don't store FS attributes --exclude=GLOB Filenames and paths matching GLOB will be excluded from sync --exclude-from=FILE Read --exclude GLOBs from FILE --rexclude=REGEXP Filenames and paths matching REGEXP (regular expression) will be excluded from sync --rexclude-from=FILE Read --rexclude REGEXPs from FILE --include=GLOB Filenames and paths matching GLOB will be included even if previously excluded by one of --(r)exclude(-from) patterns --include-from=FILE Read --include GLOBs from FILE --rinclude=REGEXP Same as --include but uses REGEXP (regular expression) instead of GLOB --rinclude-from=FILE Read --rinclude REGEXPs from FILE --files-from=FILE Read list of source-file names from FILE. Use - to read from stdin. --region=REGION, --bucket-location=REGION Region to create bucket in. As of now the regions are: us-east-1, us-west-1, us-west-2, eu-west-1, eu- central-1, ap-northeast-1, ap-southeast-1, ap- southeast-2, sa-east-1 --host=HOSTNAME HOSTNAME:PORT for S3 endpoint (default: s3.amazonaws.com, alternatives such as s3-eu- west-1.amazonaws.com). You should also set --host- bucket. --host-bucket=HOST_BUCKET DNS-style bucket+hostname:port template for accessing a bucket (default: %(bucket)s.s3.amazonaws.com) --reduced-redundancy, --rr Store object with 'Reduced redundancy'. Lower per-GB price. [put, cp, mv] --no-reduced-redundancy, --no-rr Store object without 'Reduced redundancy'. Higher per- GB price. [put, cp, mv] --storage-class=CLASS Store object with specified CLASS (STANDARD, STANDARD_IA, ONEZONE_IA, INTELLIGENT_TIERING, GLACIER or DEEP_ARCHIVE). [put, cp, mv] --access-logging-target-prefix=LOG_TARGET_PREFIX Target prefix for access logs (S3 URI) (for [cfmodify] and [accesslog] commands) --no-access-logging Disable access logging (for [cfmodify] and [accesslog] commands) --default-mime-type=DEFAULT_MIME_TYPE Default MIME-type for stored objects. Application default is binary/octet-stream. -M, --guess-mime-type Guess MIME-type of files by their extension or mime magic. Fall back to default MIME-Type as specified by --default-mime-type option --no-guess-mime-type Don't guess MIME-type and use the default type instead. --no-mime-magic Don't use mime magic when guessing MIME-type. -m MIME/TYPE, --mime-type=MIME/TYPE Force MIME-type. Override both --default-mime-type and --guess-mime-type. --add-header=NAME:VALUE Add a given HTTP header to the upload request. Can be used multiple times. For instance set 'Expires' or 'Cache-Control' headers (or both) using this option. --remove-header=NAME Remove a given HTTP header. Can be used multiple times. For instance, remove 'Expires' or 'Cache- Control' headers (or both) using this option. [modify] --server-side-encryption Specifies that server-side encryption will be used when putting objects. [put, sync, cp, modify] --server-side-encryption-kms-id=KMS_KEY Specifies the key id used for server-side encryption with AWS KMS-Managed Keys (SSE-KMS) when putting objects. [put, sync, cp, modify] --encoding=ENCODING Override autodetected terminal and filesystem encoding (character set). Autodetected: UTF-8 --add-encoding-exts=EXTENSIONs Add encoding to these comma delimited extensions i.e. (css,js,html) when uploading to S3 ) --verbatim Use the S3 name as given on the command line. No pre- processing, encoding, etc. Use with caution! --disable-multipart Disable multipart upload on files bigger than --multipart-chunk-size-mb --multipart-chunk-size-mb=SIZE Size of each chunk of a multipart upload. Files bigger than SIZE are automatically uploaded as multithreaded- multipart, smaller files are uploaded using the traditional method. SIZE is in Mega-Bytes, default chunk size is 15MB, minimum allowed chunk size is 5MB, maximum is 5GB. --list-md5 Include MD5 sums in bucket listings (only for 'ls' command). -H, --human-readable-sizes Print sizes in human readable form (eg 1kB instead of 1234). --ws-index=WEBSITE_INDEX Name of index-document (only for [ws-create] command) --ws-error=WEBSITE_ERROR Name of error-document (only for [ws-create] command) --expiry-date=EXPIRY_DATE Indicates when the expiration rule takes effect. (only for [expire] command) --expiry-days=EXPIRY_DAYS Indicates the number of days after object creation the expiration rule takes effect. (only for [expire] command) --expiry-prefix=EXPIRY_PREFIX Identifying one or more objects with the prefix to which the expiration rule applies. (only for [expire] command) --progress Display progress meter (default on TTY). --no-progress Don't display progress meter (default on non-TTY). --stats Give some file-transfer stats. --enable Enable given CloudFront distribution (only for [cfmodify] command) --disable Disable given CloudFront distribution (only for [cfmodify] command) --cf-invalidate Invalidate the uploaded filed in CloudFront. Also see [cfinval] command. --cf-invalidate-default-index When using Custom Origin and S3 static website, invalidate the default index file. --cf-no-invalidate-default-index-root When using Custom Origin and S3 static website, don't invalidate the path to the default index file. --cf-add-cname=CNAME Add given CNAME to a CloudFront distribution (only for [cfcreate] and [cfmodify] commands) --cf-remove-cname=CNAME Remove given CNAME from a CloudFront distribution (only for [cfmodify] command) --cf-comment=COMMENT Set COMMENT for a given CloudFront distribution (only for [cfcreate] and [cfmodify] commands) --cf-default-root-object=DEFAULT_ROOT_OBJECT Set the default root object to return when no object is specified in the URL. Use a relative path, i.e. default/index.html instead of /default/index.html or s3://bucket/default/index.html (only for [cfcreate] and [cfmodify] commands) -v, --verbose Enable verbose output. -d, --debug Enable debug output. --version Show s3cmd version (2.2.0) and exit. -F, --follow-symlinks Follow symbolic links as if they are regular files --cache-file=FILE Cache FILE containing local source MD5 values -q, --quiet Silence output on stdout --ca-certs=CA_CERTS_FILE Path to SSL CA certificate FILE (instead of system default) --ssl-cert=SSL_CLIENT_CERT_FILE Path to client own SSL certificate CRT_FILE --ssl-key=SSL_CLIENT_KEY_FILE Path to client own SSL certificate private key KEY_FILE --check-certificate Check SSL certificate validity --no-check-certificate Do not check SSL certificate validity --check-hostname Check SSL certificate hostname validity --no-check-hostname Do not check SSL certificate hostname validity --signature-v2 Use AWS Signature version 2 instead of newer signature methods. Helpful for S3-like systems that don't have AWS Signature v4 yet. --limit-rate=LIMITRATE Limit the upload or download speed to amount bytes per second. Amount may be expressed in bytes, kilobytes with the k suffix, or megabytes with the m suffix --no-connection-pooling Disable connection re-use --requester-pays Set the REQUESTER PAYS flag for operations -l, --long-listing Produce long listing [ls] --stop-on-error stop if error in transfer --content-disposition=CONTENT_DISPOSITION Provide a Content-Disposition for signed URLs, e.g., "inline; filename=myvideo.mp4" --content-type=CONTENT_TYPE Provide a Content-Type for signed URLs, e.g., "video/mp4"

Commands: Make bucket s3cmd mb s3://BUCKET Remove bucket s3cmd rb s3://BUCKET List objects or buckets s3cmd ls [s3://BUCKET[/PREFIX]] List all object in all buckets s3cmd la Put file into bucket s3cmd put FILE [FILE...] s3://BUCKET[/PREFIX] Get file from bucket s3cmd get s3://BUCKET/OBJECT LOCAL_FILE Delete file from bucket s3cmd del s3://BUCKET/OBJECT Delete file from bucket (alias for del) s3cmd rm s3://BUCKET/OBJECT Restore file from Glacier storage s3cmd restore s3://BUCKET/OBJECT Synchronize a directory tree to S3 (checks files freshness using size and md5 checksum, unless overridden by options, see below) s3cmd sync LOCAL_DIR s3://BUCKET[/PREFIX] or s3://BUCKET[/PREFIX] LOCAL_DIR or s3://BUCKET[/PREFIX] s3://BUCKET[/PREFIX] Disk usage by buckets s3cmd du [s3://BUCKET[/PREFIX]] Get various information about Buckets or Files s3cmd info s3://BUCKET[/OBJECT] Copy object s3cmd cp s3://BUCKET1/OBJECT1 s3://BUCKET2[/OBJECT2] Modify object metadata s3cmd modify s3://BUCKET1/OBJECT Move object s3cmd mv s3://BUCKET1/OBJECT1 s3://BUCKET2[/OBJECT2] Modify Access control list for Bucket or Files s3cmd setacl s3://BUCKET[/OBJECT] Modify Bucket Policy s3cmd setpolicy FILE s3://BUCKET Delete Bucket Policy s3cmd delpolicy s3://BUCKET Modify Bucket CORS s3cmd setcors FILE s3://BUCKET Delete Bucket CORS s3cmd delcors s3://BUCKET Modify Bucket Requester Pays policy s3cmd payer s3://BUCKET Show multipart uploads s3cmd multipart s3://BUCKET [Id] Abort a multipart upload s3cmd abortmp s3://BUCKET/OBJECT Id List parts of a multipart upload s3cmd listmp s3://BUCKET/OBJECT Id Enable/disable bucket access logging s3cmd accesslog s3://BUCKET Sign arbitrary string using the secret key s3cmd sign STRING-TO-SIGN Sign an S3 URL to provide limited public access with expiry s3cmd signurl s3://BUCKET/OBJECT <expiry_epoch|+expiry_offset> Fix invalid file names in a bucket s3cmd fixbucket s3://BUCKET[/PREFIX] Create Website from bucket s3cmd ws-create s3://BUCKET Delete Website s3cmd ws-delete s3://BUCKET Info about Website s3cmd ws-info s3://BUCKET Set or delete expiration rule for the bucket s3cmd expire s3://BUCKET Upload a lifecycle policy for the bucket s3cmd setlifecycle FILE s3://BUCKET Get a lifecycle policy for the bucket s3cmd getlifecycle s3://BUCKET Remove a lifecycle policy for the bucket s3cmd dellifecycle s3://BUCKET List CloudFront distribution points s3cmd cflist Display CloudFront distribution point parameters s3cmd cfinfo [cf://DIST_ID] Create CloudFront distribution point s3cmd cfcreate s3://BUCKET Delete CloudFront distribution point s3cmd cfdelete cf://DIST_ID Change CloudFront distribution point parameters s3cmd cfmodify cf://DIST_ID Display CloudFront invalidation request(s) status s3cmd cfinvalinfo cf://DIST_ID[/INVAL_ID]

For more information, updates and news, visit the s3cmd website: http://s3tools.org

Signup

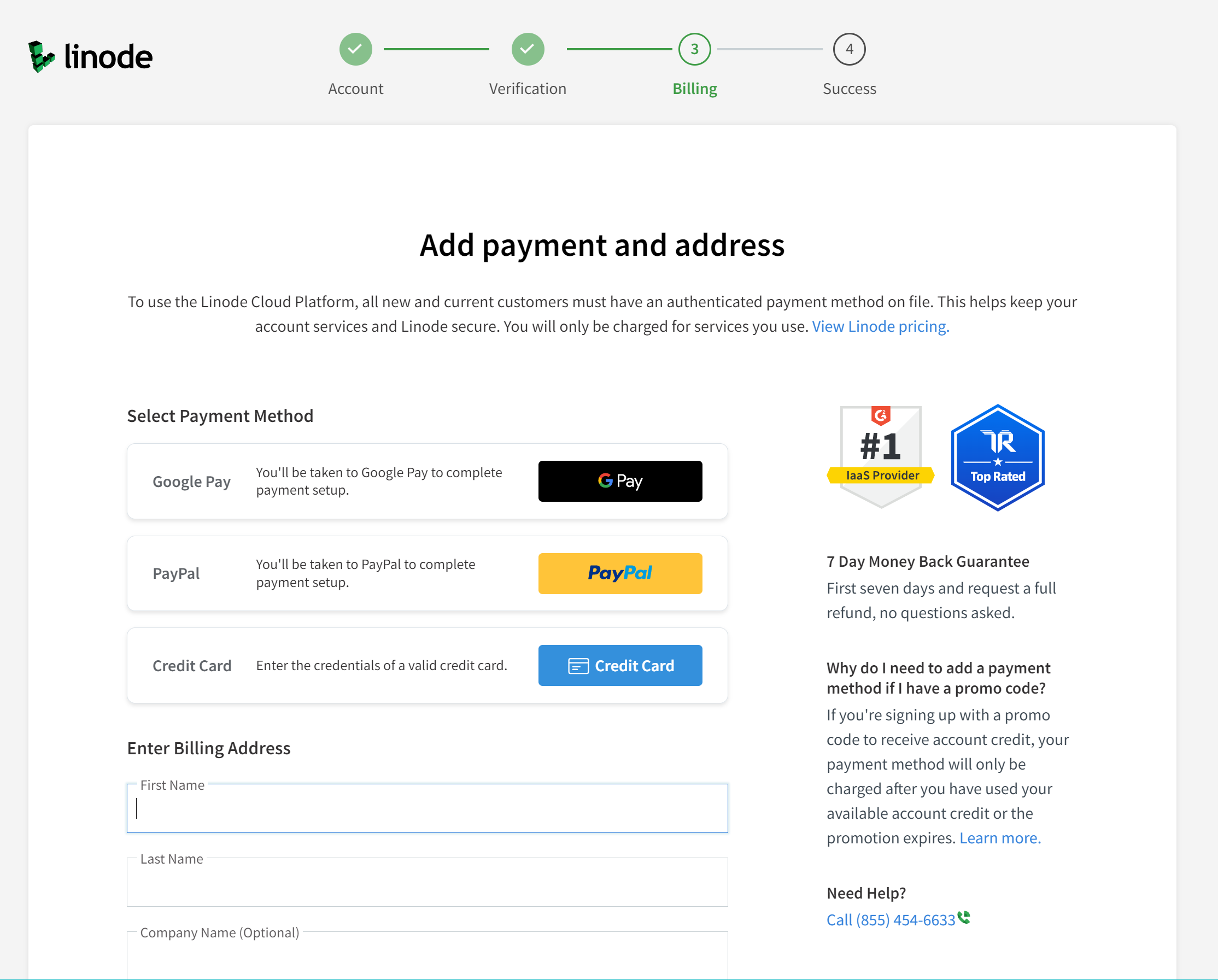

I went to the Linode signup page and signed up with my email. Using third parties for authentication means they track you more easily, and introduces an unnecessary dependency.

The email signup procedure requires a mobile phone for SMS-based MFA. I would rather use a TOTP authenticator app instead of SMS for MFA.

I want to ensure that I limit my financial liability. One way of protecting myself is to limit the maximum amount that can be charged to the payment mechanism. Because PayPal has no maximum transaction limit, it is not a suitable choice for a service with unlimited financial liability. However, credit cards can have transaction limits set, and Google Pay has the following limits:

Maximum single transaction amount: $2,000 USD.

Daily maximum total transaction amount: $2,500 USD.

Up to 15 transactions per day.

Additional limits on the dollar amount or frequency of transactions may be imposed in accordance with the Google Pay Terms of Service. – From Google Pay Help

Here are additional limits for Google Pay. Because of prior good experiences with how credit card processors handled fraud, I decided to use a credit card with a low limit instead of Google Pay.

Generating Linode Access Keys

I followed the directions:

- Logged into the Cloud Manager.

- Selected the Object Storage menu item in the sidebar and clicked on the Access Keys tab.

- Clicked on the Create Access Key button, which displays the Create Access Key panel.

- Typed in the name

mslinnas the label for the new access key. - Clicked the Submit button to create the access key.

- The new access key and its secret key are displayed.

This is the only time that the secret key is visible.

I stored the access key and the secret key in my password manager,

lastpass.com.

Configuring s3cmd

s3cmd is a Python program that works with all S3-compatible APIs, such as those provided by AWS,

Linode Storage, Google Cloud Storage and DreamHost DreamObjects.

I intend to use its sync subcommand to synchronize the

www.mslinn.com bucket contents in Linode Storage with the most recently generated version of this website by Jekyll.

s3cmd needs to be

configured for

Linode

before it can be used.

Some configuration settings for Linode are non-obvious.

The following should work for most users,

with the caution that the access key and secret key are of course unique for every user.

$ s3cmd --configure

Enter new values or accept defaults in brackets with Enter. Refer to user manual for detailed description of all options.

Access key and Secret key are your identifiers for Amazon S3. Leave them empty for using the env variables. Access Key [asdfasdf]: asdfasdfasdf Secret Key [asdfasdfasdf]: asdfasdfasdf Default Region [US]:

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3. S3 Endpoint [s3.amazonaws.com]: us-east-1.linodeobjects.com

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used if the target S3 system supports dns based buckets. DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]: %(bucket)s.us-east-1.linodeobjects.com

Encryption password is used to protect your files from reading by unauthorized persons while in transfer to S3 Encryption password: Path to GPG program [/usr/bin/gpg]:

When using secure HTTPS protocol all communication with Amazon S3 servers is protected from 3rd party eavesdropping. This method is slower than plain HTTP, and can only be proxied with Python 2.7 or newer Use HTTPS protocol [Yes]:

On some networks all internet access must go through a HTTP proxy. Try setting it here if you can't connect to S3 directly HTTP Proxy server name:

New settings: Access Key: asdfasdfasdf Secret Key: asdfasdfasdf Default Region: US S3 Endpoint: https://us-east-1.linodeobjects.com DNS-style bucket+hostname:port template for accessing a bucket: %(bucket)s.us-east-1.linodeobjects.com Encryption password: Path to GPG program: /usr/bin/gpg Use HTTPS protocol: True HTTP Proxy server name: HTTP Proxy server port: 0

Test access with supplied credentials? [Y/n] n Save settings? [y/N] y Configuration saved to '/home/mslinn/.s3cfg'

I was unable to successfully test access. Two different errors appeared at different times:

Please wait, attempting to list all buckets... ERROR: Test failed: 403 (SignatureDoesNotMatch) ERROR: Test failed: [Errno -2] Name or service not known

Eventually I saved the configuration without testing as shown above.

This created a file called $HOME/.s3cfg:

[default] access_key = asdfasdfasdf access_token = add_encoding_exts = add_headers = bucket_location = US ca_certs_file = cache_file = check_ssl_certificate = True check_ssl_hostname = True cloudfront_host = cloudfront.amazonaws.com connection_max_age = 5 connection_pooling = True content_disposition = content_type = default_mime_type = binary/octet-stream delay_updates = False delete_after = False delete_after_fetch = False delete_removed = False dry_run = False enable_multipart = True encoding = UTF-8 encrypt = False expiry_date = expiry_days = expiry_prefix = follow_symlinks = False force = False get_continue = False gpg_command = /usr/bin/gpg gpg_decrypt = %(gpg_command)s -d --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s gpg_encrypt = %(gpg_command)s -c --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s gpg_passphrase = guess_mime_type = True host_base = us-east-1.linodeobjects.com host_bucket = %(bucket)s.us-east-1.linodeobjects.com human_readablesizes = False invalidate_default_index_on_cf = False invalidate_default_index_root_on_cf = True invalidate_on_cf = False kms_key = limit = -1 limitrate = 0 list_md5 = False log_target_prefix = long_listing = False max_delete = -1 mime_type = multipart_chunksize_mb = 15 multipart_copy_chunksize_mb = 1024 multipart_max_chunks = 10000 preserve_attrs = True progress_meter = True proxy_host = proxy_port = 0 public_url_use_https = False put_continue = False recursive = False recv_chunk = 65536 reduced_redundancy = False requester_pays = False restore_days = 1 restore_priority = Standard secret_key = asdfasdfasdf send_chunk = 65536 server_side_encryption = False signature_v2 = False signurl_use_https = False simpledb_host = sdb.amazonaws.com skip_existing = False socket_timeout = 300 ssl_client_cert_file = ssl_client_key_file = stats = False stop_on_error = False storage_class = throttle_max = 100 upload_id = urlencoding_mode = normal use_http_expect = False use_https = True use_mime_magic = True verbosity = INFO website_endpoint = http://%(bucket)s.s3-website-%(location)s.amazonaws.com/ website_error = website_index = index.html

According to the docs

(Access Buckets and Files through URLs),

the configuration file needs website_endpoint: http://%(bucket)s.website-[cluster-url]/.

However, this is an error; instead of cluster-url, which might be https://us-east-1.linodeobjects.com,

cluster-id should be used, which for me was simply us-east-1.

Thus the endpoint for me,

and everyone with a bucket at us-east-1 in Newark, New Jersey should be:

http://%(bucket)s.website-us-east-1.linodeobjects.com/

After editing the file to manually change the value of website_endpoint,

I tried to list the buckets, and that worked:

$ s3cmd ls 2022-07-01 17:32 s3://mslinn

linode-cli

Next I tried

linode-cli.

$ pip install linode-cli Collecting linode-cli Downloading linode_cli-5.21.0-py2.py3-none-any.whl (204 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 204.2/204.2 kB 4.8 MB/s eta 0:00:00 Collecting terminaltables Downloading terminaltables-3.1.10-py2.py3-none-any.whl (15 kB) Collecting PyYAML Downloading PyYAML-6.0-cp310-cp310-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_12_x86_64.manylinux2010_x86_64.whl (682 kB) ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 682.2/682.2 kB 16.8 MB/s eta 0:00:00 Requirement already satisfied: requests in /home/mslinn/venv/default/lib/python3.10/site-packages (from linode-cli) (2.28.0) Requirement already satisfied: charset-normalizer~=2.0.0 in /home/mslinn/venv/default/lib/python3.10/site-packages (from requests->linode-cli) (2.0.12) Requirement already satisfied: idna<4,>=2.5 in /home/mslinn/venv/default/lib/python3.10/site-packages (from requests->linode-cli) (3.3) Requirement already satisfied: urllib3<1.27,>=1.21.1 in /home/mslinn/venv/default/lib/python3.10/site-packages (from requests->linode-cli) (1.26.9) Requirement already satisfied: certifi>=2017.4.17 in /home/mslinn/venv/default/lib/python3.10/site-packages (from requests->linode-cli) (2022.6.15) Installing collected packages: terminaltables, PyYAML, linode-cli Successfully installed PyYAML-6.0 linode-cli-5.21.0 terminaltables-3.1.10

The documentation fails to mention that the first time linode-cli is executed, and every time it is invoked with the configure subcommand,

it attempts to open a web browser.

WSL and WSL2 will not respond appropriately

unless an X client is already running, for example

GWSL.

$ linode-cli configure

Welcome to the Linode CLI. This will walk you through some initial setup.

The CLI will use its web-based authentication to log you in. If you prefer to supply a Personal Access Token, uselinode-cli configure --token

Press enter to continue. This will open a browser and proceed with authentication. A browser should open directing you to this URL to authenticate:

https://login.linode.com/oauth/authorize?client_id=asdfasdfasdf&response_type=token&scopes=*&redirect_uri=http://localhost:45789

If you are not automatically directed there, please copy/paste the link into your browser to continue..

Configuring mslinn

Default Region for operations. Choices are: 1 - ap-west 2 - ca-central 3 - ap-southeast 4 - us-central 5 - us-west 6 - us-southeast 7 - us-east 8 - eu-west 9 - ap-south 10 - eu-central 11 - ap-northeast

Default Region (Optional): 7

Default Type of Linode to deploy. Choices are: 1 - g6-nanode-1 2 - g6-standard-1 3 - g6-standard-2 4 - g6-standard-4 5 - g6-standard-6 6 - g6-standard-8 7 - g6-standard-16 8 - g6-standard-20 9 - g6-standard-24 10 - g6-standard-32 11 - g7-highmem-1 12 - g7-highmem-2 13 - g7-highmem-4 14 - g7-highmem-8 15 - g7-highmem-16 16 - g6-dedicated-2 17 - g6-dedicated-4 18 - g6-dedicated-8 19 - g6-dedicated-16 20 - g6-dedicated-32 21 - g6-dedicated-48 22 - g6-dedicated-50 23 - g6-dedicated-56 24 - g6-dedicated-64 25 - g1-gpu-rtx6000-1 26 - g1-gpu-rtx6000-2 27 - g1-gpu-rtx6000-3 28 - g1-gpu-rtx6000-4

Default Type of Linode (Optional):

Default Image to deploy to new Linodes. Choices are: 1 - linode/almalinux8 2 - linode/almalinux9 3 - linode/alpine3.12 4 - linode/alpine3.13 5 - linode/alpine3.14 6 - linode/alpine3.15 7 - linode/alpine3.16 8 - linode/arch 9 - linode/centos7 10 - linode/centos-stream8 11 - linode/centos-stream9 12 - linode/debian10 13 - linode/debian11 14 - linode/debian9 15 - linode/fedora34 16 - linode/fedora35 17 - linode/fedora36 18 - linode/gentoo 19 - linode/kali 20 - linode/debian11-kube-v1.20.15 21 - linode/debian9-kube-v1.20.7 22 - linode/debian9-kube-v1.21.1 23 - linode/debian11-kube-v1.21.12 24 - linode/debian9-kube-v1.22.2 25 - linode/debian11-kube-v1.22.9 26 - linode/debian11-kube-v1.23.6 27 - linode/opensuse15.3 28 - linode/opensuse15.4 29 - linode/rocky8 30 - linode/slackware14.2 31 - linode/slackware15.0 32 - linode/ubuntu16.04lts 33 - linode/ubuntu18.04 34 - linode/ubuntu20.04 35 - linode/ubuntu21.10 36 - linode/ubuntu22.04 37 - linode/centos8 38 - linode/slackware14.1 39 - linode/ubuntu21.04

Default Image (Optional): Active user is now mslinn

Config written to /home/mslinn/.config/linode-cli

linode-cli configuration created this file:

[DEFAULT] default-user = mslinn [mslinn] token = asdfasdfasdfasdfasdfasdf region = us-east

Right after I did the above, I was surprised to get the following email from Linode:

This is a notification to inform you that a device has been trusted to skip authentication on your Linode Account (mslinn). The request came from the following IP address: 70.53.179.203.

The device will not be prompted for a username or password for 30 days.

If this action did not originate from you, we recommend logging in and changing your password immediately. Also, as an extra layer of security, we highly recommend enabling two-factor authentication on your account. Please see the link below for more information on how to enable two-factor authentication:

Two-Factor Authentication

If you have any questions or concerns, please do not hesitate to contact us 24/7 by opening a support ticket from within the Linode Manager, giving us a call, or emailing support@linode.com.

Contact

Thank you,

The Linode.com Team

Contact Us

I began to suspect that Linode’s documentation had major deficiencies at this point. Instead of merely configuring a command-line client, my IP was whitelisted unexpectedly. I have nothing polite to say about this.

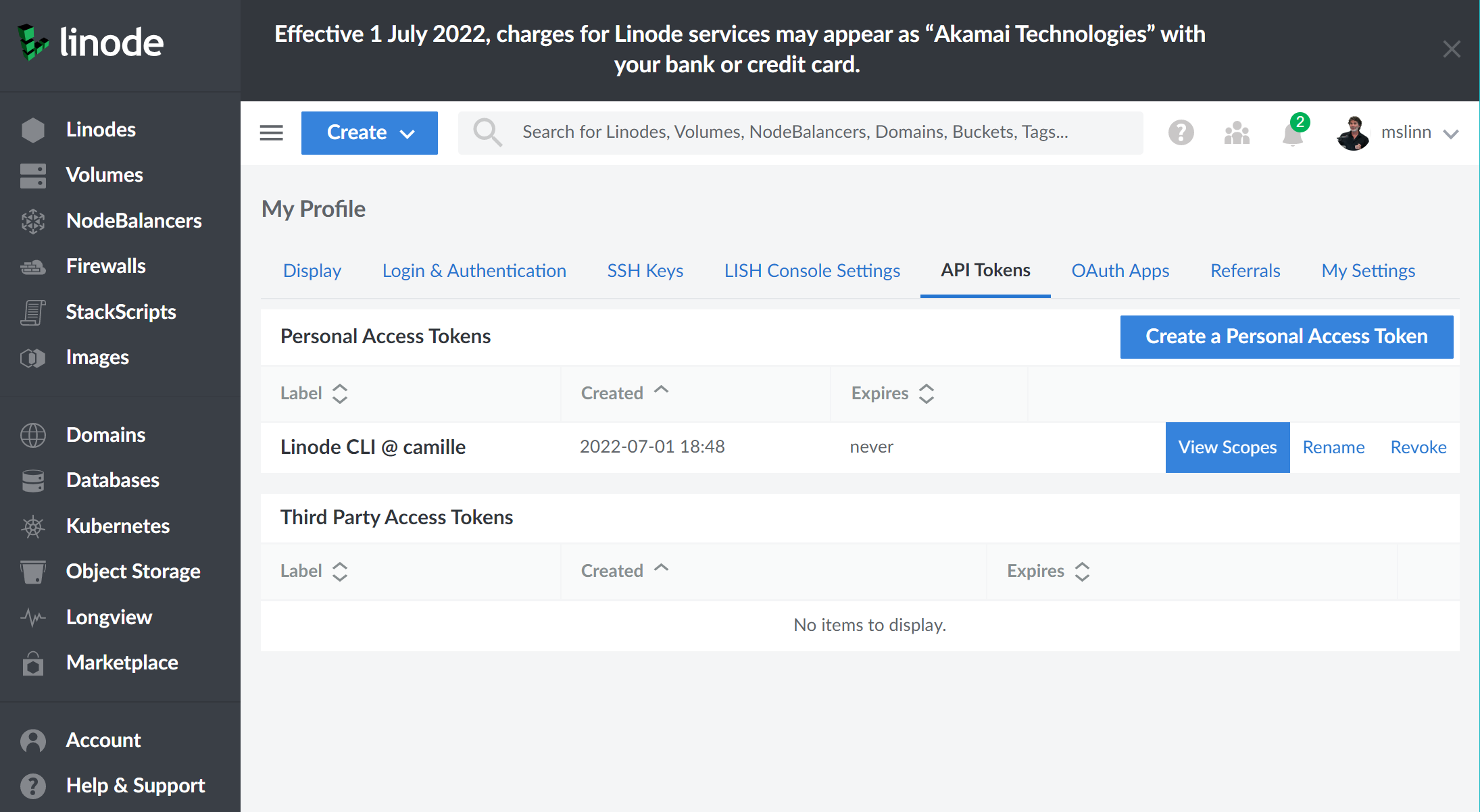

After poking around a bit, I discovered my

API Tokens page on Linode.

It seems that the linode-cli created a personal access token with a label containing the name of the computer used:

Linode CLI @ camille.

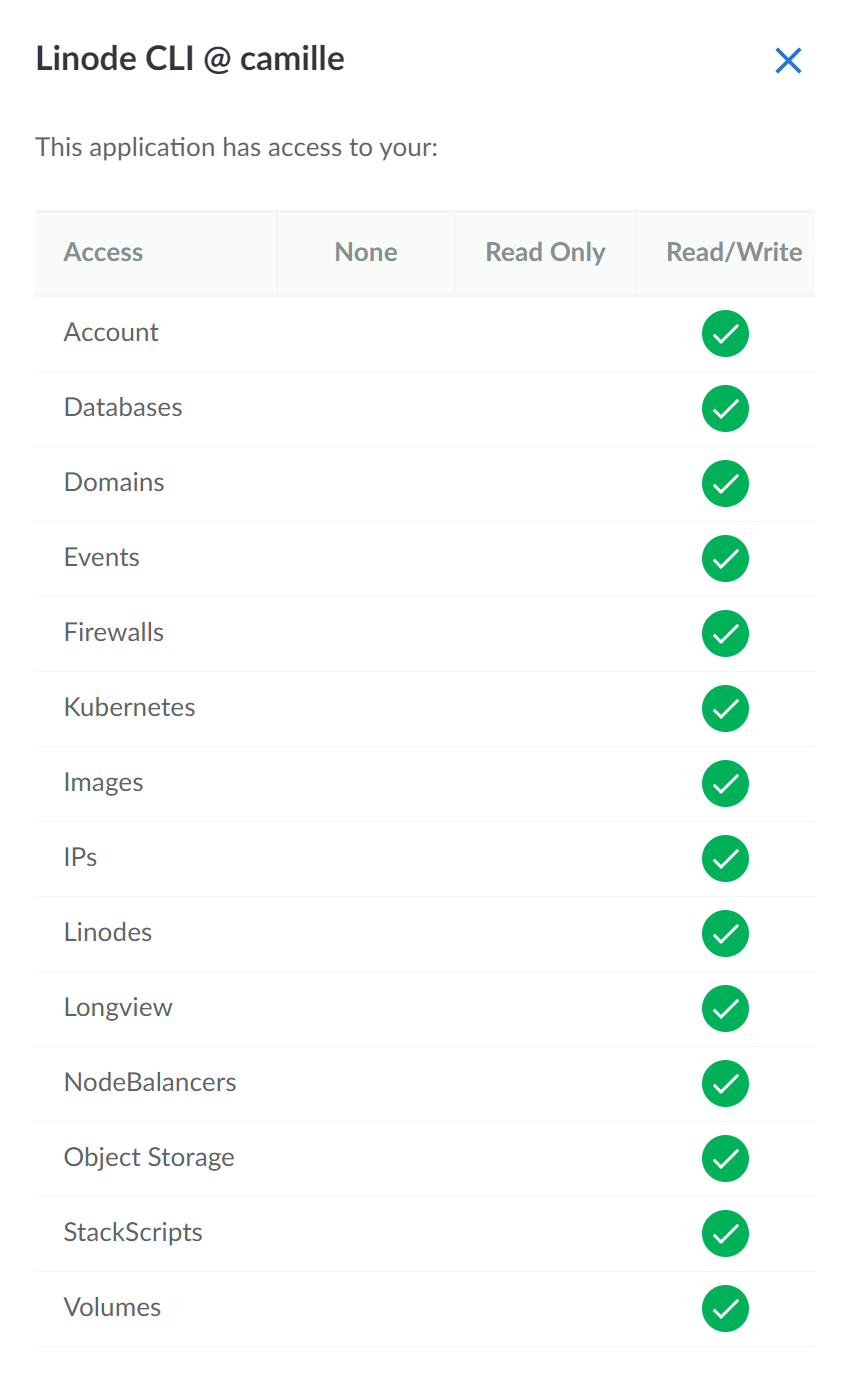

Clicking on the View Scopes button above displays the permissions granted to the token:

This personal access token is all-powerful.

The linode-cli quietly created a personal access token with all permissions enabled,

and did not warn the user, and did not say how to reduce or limit the permissions.

Security is enhanced when the minimum permission necessary is provided to accomplish necessary tasks.

Instead, this token silently maximizes the financial risk to the person or entity who pays for the account.

Creating the Website Bucket

The name of the bucket must exactly match the name of the subdomain that the website is served from.

If you try to serve content from a misnamed bucket, you will not be able to apply an SSL certificate;

instead, the certificate will be issued by linodeobjects.com.

I wanted to test using the subdomain linode.mslinn.com,

and then if all was well, switch www.mslinn.com over to Linode Storage.

Because buckets cannot be renamed, I had to make 2 buckets with identical contents,

one called linode.mslinn.com and the other called www.mslinn.com.

If I decide to stay with Linode,

I will delete the bucket I am using for testing, called linode.mslinn.com,

and run this website from the www.mslinn.com bucket.

I created a website-enabled bucket called www.mslinn.com as follows:

$ s3cmd mb --acl-public s3://www.mslinn.com Bucket 's3://www.mslinn.com/' created $ s3cmd ls 2022-07-01 17:32 s3://www.mslinn.com $ s3cmd ws-create \ --ws-index=index.html \ --ws-error=404.html \ s3://www.mslinn.com Bucket 's3://www.mslinn.com/': website configuration created.

The s3cmd ws-info subcommand displays the Linode Object Storage bucket URL as the Website endpoint:

$ s3cmd ws-info s3://www.mslinn.com Bucket s3://www.mslinn.com/: Website configuration Website endpoint: http://www.mslinn.com.website-us-east-1.linodeobjects.com/ Index document: index.html Error document: 404.html

Defining a CNAME for the Bucket

Although Linode provides a DNS Manager,

while I am testing out Linode I wanted to continue using Namecheap for DNS, so I navigated to

ap.www.namecheap.com/Domains/DomainControlPanel/mslinn.com/advancedns

and created CNAMEs like this:

linode.mslinn.com CNAME linode.mslinn.com.website-us-east-1.linodeobjects.com www.mslinn.com CNAME www.mslinn.com.website-us-east-1.linodeobjects.com

Warning: if the CNAME does not point to a URL with the word

website in it, for example

www.mslinn.com.us-east-1.linodeobjects.com,

the default index file and the error file will not automatically be served when expected.

Making a Free SSL Certificate

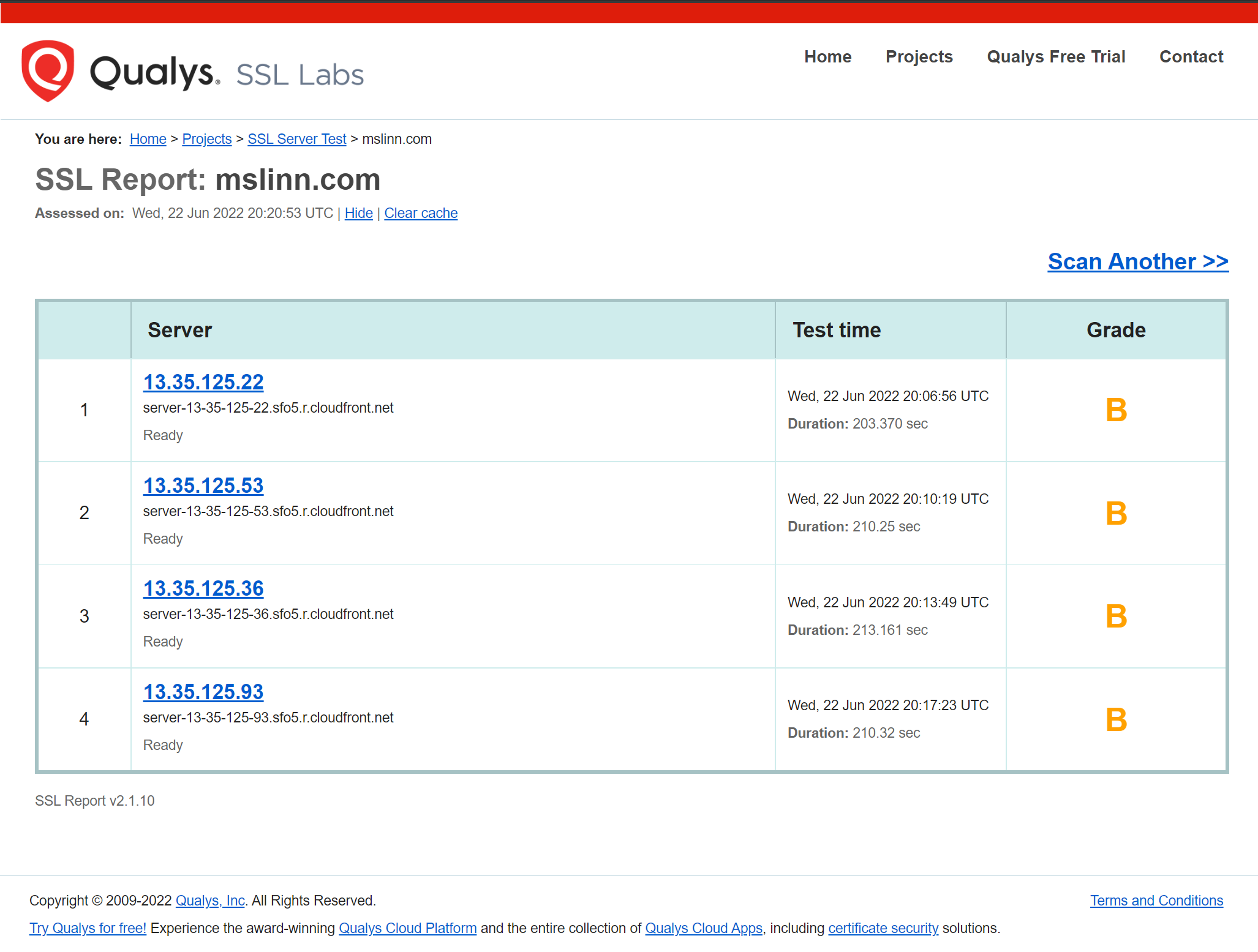

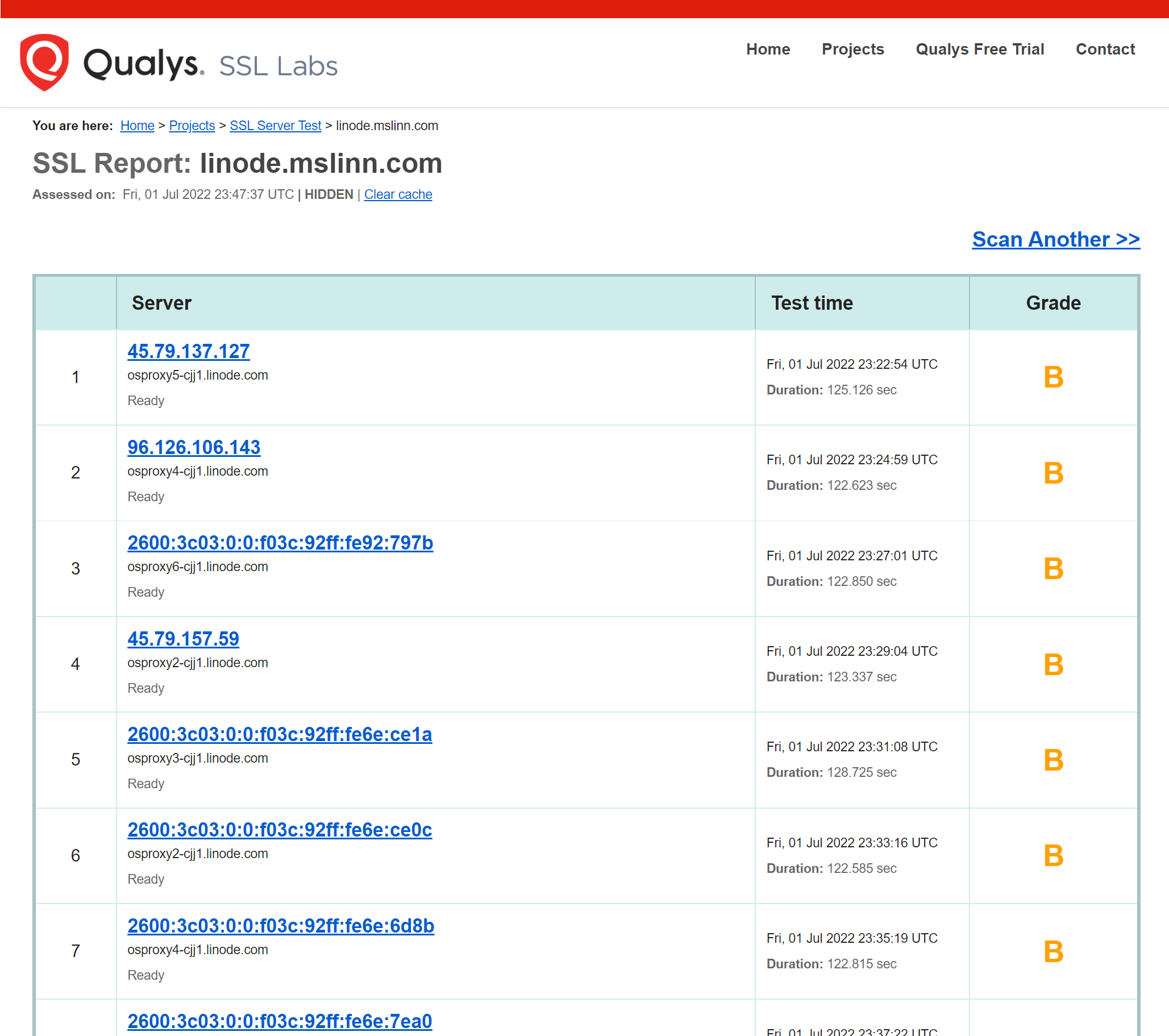

Qualys / SSL Labs rates the SSL security provided by AWS CloudFront using the AWS-generated SSL certificates with a “B” grade.

Lets see if Linode Object Storage can host a more secure website when provided

with a 4096-bit certificate generated by certbot/letsencrypt.

The conclusion of this article will reveal the answer.

Please read Creating and Renewing Letsencrypt Wildcard SSL Certificates for details.

Linode DNS Authentication

Eventually, if I stick with Linode, I could use the

certbot DNS plugin for Linode,

but I will not use it right now because I am trialing Linode.

Use of this plugin requires a configuration file containing Linode API credentials,

obtained from your Linode account’s

Applications & API Tokens page.

I stored the credentials in ~/.certbot/linode.ini:

# Linode API credentials used by Certbot dns_linode_key = 0123456789abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ64 dns_linode_version = [|3|4]

Here is how to create an SSL certificate certbot using Linode DNS authentication:

$ certbot certonly \

--dns-linode \

--dns-linode-credentials ~/.certbot/linode.ini \

--rsa-key-size 4096 \

-d mslinn.com \

-d *.mslinn.com \

--config-dir ~/.certbot/mslinn.com/config \

--logs-dir ~/.certbot/mslinn.com/logs \

--work-dir ~/.certbot/mslinn.com/work

Installing the Certificate

Now I created 2 certificates: one with the full chain of responsibility (fullchain.pem) and one containing my private key (privkey.pem).

I used bash environment variables called CERT_DIR, CERT and KEY to make the incantation convenient to type in.

CERT and KEY contain the contents of the files fullchain.pem and privkey.pem, respectively.

As you can see, the result was a valid (wildcard) SSL certificate.

$ CERT_DIR=/home/mslinn/.certbot/mslinn.com/config/live/mslinn.com $ CERT="$( cat $CERT_DIR/fullchain.pem )" $ KEY="$( cat $CERT_DIR/privkey.pem )" $ linode-cli object-storage ssl-upload \ us-east-1 www.mslinn.com \ --certificate "$CERT" \ --private_key "$KEY"┌──────┐ │ ssl │ ├──────┤ │ True │ └──────┘

Syncing the Website Bucket

I wrote a bash script to build this Jekyll-generated website.

Jekyll places the generated website in a directory called _site.

I synchronized

the contents of the freshly generated mslinn.com website,

stored in _site/, with the bucket as follows.

Note the trailing slash on _site/,

it is significant.

$ s3cmd sync \ --acl-public --delete-removed --guess-mime-type --quiet \ _site/ \ s3://www.mslinn.com INFO: No cache file found, creating it. INFO: Compiling list of local files... INFO: Running stat() and reading/calculating MD5 values on 2157 files, this may take some time... INFO: [1000/2157] INFO: [2000/2157] INFO: Retrieving list of remote files for s3://www.mslinn.com/_site ... INFO: Found 2157 local files, 0 remote files INFO: Verifying attributes... INFO: Summary: 2137 local files to upload, 20 files to remote copy, 0 remote files to delete ... lots more output ... Done. Uploaded 536630474 bytes in 153.6 seconds, 3.33 MB/s.

Originally, I did not provide the --acl-public option to s3cmd sync.

That meant the files in the bucket were all private – the opposite of what is required for a website.

Here is the incantation for making all the files in the bucket publicly readable:

$ s3cmd setacl s3://www.mslinn.com/ \

--acl-public --recursive --quiet

Linode’s S3 implementation faithfully mirrors a problem that AWS S3 has.

CSS files are automatically assigned the MIME type text/plain, instead of text/css.

$ curl -sI http://linode.mslinn.com/assets/css/style.css | \ grep Content-Type Content-Type: text/plain

I re-uploaded the CSS files with the proper mime type using this incantation:

$ cd _site $ find . -name *.css -exec \ s3cmd put -P --mime-type=text/css {} s3://www.mslinn.com/{} \;

It should be possible to provide a MIME-type mapping somehow! Linode, if you are reading this, please provide an extension to the S3 functionality, so customers could go beyond AWS's bugs.

The Result

Success! The same content can be fetched with 3 different URLs:

-

https://linode.mslinn.comuses the free wildcard certificate that was created above. -

http://linode.mslinn.com.website-us-east-1.linodeobjects.comworks without an SSL certificate. -

https://linode.mslinn.com.website-us-east-1.linodeobjects.comuses a certificate fromus-east-1.linodeobjects.com, which shows as an error, as it should. The error would be prevented if the certificate was a wildcard certificate issued bylinodeobjects.cominstead.

Qualys / SSL Labs rated the site on Linode Storage with the Let’s Encrypt SSL certificate: and gave it the same rating as when the site was hosted on AWS S3 / CloudFront.

BTW, you can test the date range of a certificate with this incantation:

$ curl https://linode.mslinn.com -vI --stderr - | grep 'date:' * start date: Nov 25 17:46:07 2022 GMT * expire date: Feb 23 17:46:06 2023 GMT

The site feels quite responsive. 😁