Published 2021-04-14.

Last modified 2021-05-19.

Time to read: 9 minutes.

posts collection, categorized under AWS, AWS Lambda, Azure, Django, Python, Serverless, Zappa, e-commerce.

As readers of this blog know, I have been chronicling my adventure into Python-powered e-commerce for several months. I have been focusing on Django in general, and Django-Oscar in particular. Webapps made with this technology are almost exclusively run on dedicated real or virtual machines. Serverless computing is a method of providing backend services on an as-used basis. AWS Lambda is the best-known example of serverless computing, and it combines nicely with a CDN like AWS CloudFront.

This article discusses 3 goals for an e-commerce system. Two goals are provided by the technology behind serverless webapps:

- Enormous and instantaneous scalability.

- Pay-as-you-go without an up-front cost commitment.

I have one more goal: very low latency for online shoppers.

The Big Picture

AWS Lambda consists of two main parts:

the Lambda service which manages the execution requests,

and the Amazon Linux micro virtual machines provisioned using AWS Firecracker,

which actually runs the code.

A Firecracker VM is started the first time a given Lambda function receives an execution request (the so-called “Cold Start”),

and as soon as the VM starts, it begins to poll the Lambda service for messages.

When the VM receives a message, it runs your function code handler, passing the received message JSON to the function as the event object.

Thus every time the Lambda service receives a Lambda execution request, it checks if there is a Firecracker microVM available to manage the execution request.

If so, it delivers the message to the VM to be executed.

In contrast, if no available Firecracker VM is found, it starts a new VM to manage the message.

Each VM executes one message at a time, so if a lot of concurrent requests are sent to the Lambda service,

for example due to a traffic spike received by an API gateway,

several new Firecracker VMs will be started to manage the requests and the average latency of the requests will be higher since each VM takes roughly a second to start.

– From How to run any programming language on AWS Lambda: Custom Runtimes by Matteo Moroni.

AWS Lambda Limits

AWS Lambda programs have access to considerable resources, enough for most e-commerce stores. The AWS Lambda runtime environment has the following limitations, some of which can be improved upon with some work:

- The disk space (ephemeral) is limited to 512 MB.

- The default deployment package size is 50 MB.

- The memory range is from 128 to 3008 MB.

- The maximum execution timeout for a function is 15 minutes.

- Request and response (synchronous calls) body payload size can be up to 6 MB.

- Event request (asynchronous calls) body can be up to 128 KB.

CloudFront

Brian Caffey wrote Building a Django application on AWS with Cloud Development Kit (CDK). The website Mr. Caffey's article discusses does not use Lambda, instead his website is always running. So, this option is quite informative and well-thought-out, but it is AWS-specific and does not discuss serverless architecture.

For me, the most interesting part about Mr. Caffey's article is it mentions using 3 origins with AWS CloudFront: (1) an origin for ALB (for hosting the Django API), (2) an origin for the S3 website (static Vue.js site), and (3) an S3 origin for Django assets. Mr. Caffey does not say why he used 3 origins, but feeding one CloudFront distribution from multiple origins would mean that all of their content would appear on the same Internet subdomain.

This means that the extra HTTP handshaking required for certain CORS (cross-origin HTTP requests) requests between subdomains would be avoided; specifically, there would be no need for pre-flight requests. This would make the website seem noticeably faster if users did lots of content editing and/or transactions with the website. My own pet project has users creating and modifying content, and purchasing product, so taking the requirement for the CORS handshakes away would be a win, plus the end user's web browser could reuse the origin HTTP connection, speeding up even non-cacheable requests.

Tamás Sallai wrote How to route to multiple origins with CloudFront – Set up path-based routing with Terraform. Mr. Sallai is a prolific writer!

Edge Computing

Performing computations and serving assets from a nearby point of presence minimizes latency for end users. E-commerce customers much prefer online stores that respond quickly. Edge computing can deliver that experience world-wide, and developers can deploy their work from wherever they are.

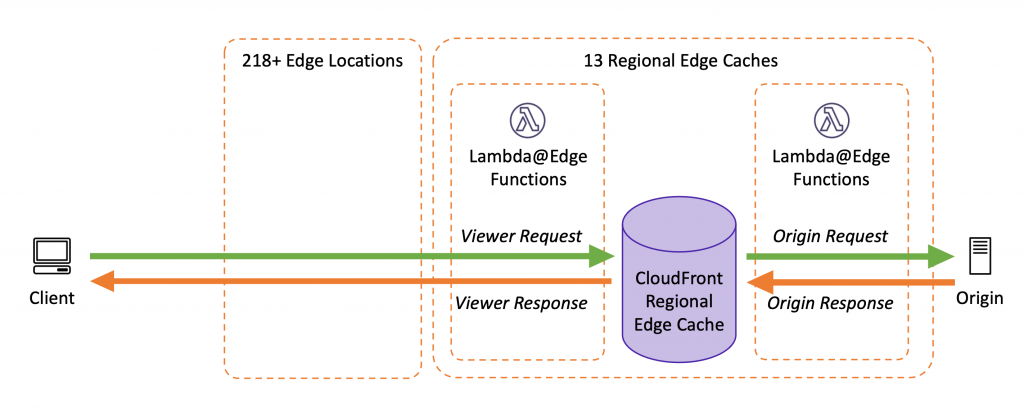

AWS Lambda@Edge (console) runs the Lambda computation in one of 13 regional AWS points of presence, one hop removed from the CloudFront edge locations, or at least in the same availability zone at the CloudFront point of presence. Distributed database issues would need to be addressed before significant benefits would accrue from this implementing this decentralized architecture. Unfortunately, Lambda@Edge has some significant restrictions that prevent it from running nontrivial Django apps.

Lambda@Edge Restrictions

From requirements and restrictions on using Lambda functions with CloudFront, it is apparent that it is not possible to run non-trivial Django apps securely at the edge with good performance.

- You can add triggers only for functions in the US East (N. Virginia) Region.

- You can’t configure your Lambda function to access resources inside your VPC.

- AWS Lambda environment variables are not supported.

- Lambda functions with AWS Lambda layers are not supported.

- Using AWS X-Ray is not supported.

- AWS Lambda reserved concurrency and provisioned concurrency are not supported.

- Lambda functions defined as container images are not supported.

Until such time as Lambda@Edge removes the above restrictions, Django webapps will continue to be deployed as centralized webapps, which means that ultra-low latency is not possible world-wide.

CloudFront Functions

CloudFront Functions are closer to the user, but have even more restrictions than Lambda@Edge. Alas, CloudFront Functions do not seem likely to be able to support significant computation any time soon.

Infrastructure as Code (IaC)

– From CloudFormation, Terraform, or CDK? A guide to IaC on AWS by Jared Short, published by

acloudguru.com.

– from Stackshare.io

Infographic: Lambda Framework Comparison

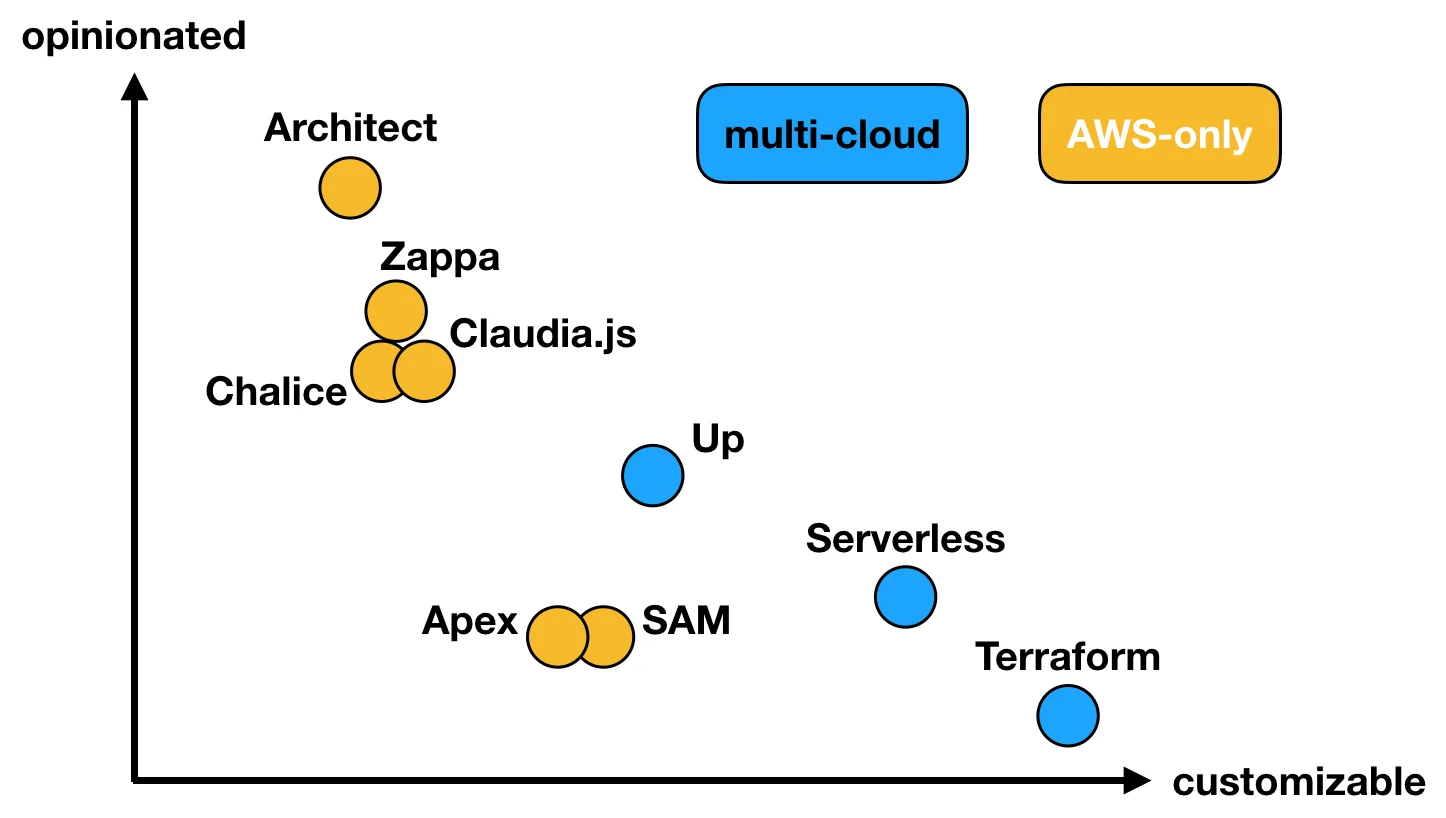

Yan Cui at Lumigo.io made this terrific infographic, which compares 9 serverless application frameworks and infrastructure management tools according to opinionatedness and customizability. This article discusses some of those technologies.

The trade-off between customizability and opinionatedness is that highly customizable frameworks require more code to do things that opinionated frameworks do more succinctly. On the other hand, very opinionated frameworks are more limited in their abilities. A classic example of an opinionated framework is Ruby on Rails, which is specifically designed for master/detail applications. Other types of applications should use a different framework, or no framework at all.

Two of the technologies on the above infographic are Zappa and Terraform, both of which I discuss in this article. Zappa is rather opinionated, while Terraform is very customizable.

AWS Cloud Development Kit (CDK)

AWS CDK provides a programmatic interface for modeling and provisioning cloud resources. Languages supported include Java, JavaScript, .NET, Node.js, Python and Typescript.

Even if AWS is not directly the service provider, awareness of the AWS CDK is important because some other options, for example the Cloud Development Kit for Terraform (cdktf), are based on AWS CDK.

Chalice – Serverless Django on AWS

Chalice is an AWS open-source project that has good traction. This Python serverless microframework for AWS allows applications that use Amazon API Gateway and AWS Lambda to be quickly created and deployed.

The name and logo of this project are suggestive of the Holy Grail. I found the thinly veiled references to Christianity to be off-putting. Religious references have no place in a professional environment. Programmers who work with this project have religious icons, words and phrases continuously presented to them, and they must write words that are strongly identified with Christian doctrine for them to write software. This is forced indoctrination.

Django w/ Zappa & AWS Lambda

Zappa is a popular library for serverless web hosting of Python webapps.

Zappa allows Python WSGI webapps like Django to run on AWS Lambda instead of from within a container like AWS EC2.

I am particularly interested in using Zappa to package and run django-oscar for AWS Lambda and CloudFront.

Zappa can perform two primary functions:

- Packaging – Zappa can build a Django webapp into an AWS Lambda package. The package can be delivered via other mechanisms, for example mechanisms that are not even Python aware.

- Deploying – Zappa can deploy and Django webapp to AWS Lambda, and configure several AWS services to feed events to the Django webapp.

… or use Zappa's

package command to create an archive that is ready for upload to

lambda and utilize the other helpful functions the project provides for use after code is deployed.

– from The Evolution of Maintainable Lambda Development Pt 2 by JBS Custom Software Solutions.

The Zappa documentation is excellent. The project has some rough edges, but the new regime coming on board seem competent and fired up. They have some work ahead to set things straight, but the technical path seems clear.

I think this project deserves special attention. Lots of moldy issues and PRs need to be processed, which a small team could get done fairly quickly. The project might also benefit from someone to hone the messaging. I opened an issue on the Zappa GitHub microsite to discuss this.

This seminal project has been around several years, and other well-known projects that have been developed since Zappa was first released have acknowledged that Zappa provided inspiration. Time to brush it up and set it straight again; its best days lie ahead!

Edgar Roman wrote this helpful document: Guide to using Django with Zappa.

I've messing around with Zappa, will report back.

Videos

- This video has got all the right technologies mixed together for me: Serverless Deployment of a Django Project with AWS Lambda, Zappa, S3 and PostgreSQL.

Djambda / AWS Lambda / Terraform

Terraform does not impose a runtime dependency unless the realtime orchestration features are used.

Djambda is an example project setting up Django application in AWS Lambda managed by Terraform. I intend to play with it and write up my experience right here Real Soon Now.

This project uses GitHub Actions to create environments for the master branch and pull requests. I wonder if this project can be used without GitHub actions?

– From AWS CDK? Why not Terraform? by Wojciech Gawroński.

Serverless Framework with WSGI

The docs describe Serverless WSGI as:

I am concerned that the Serverless architecture requires an ongoing runtime dependency on the viability and good will of Serverless, Inc. Any hiccup on their part will immediately be felt by all their users. It would make me nervous to base daily operational infrastructure on this.

Bintray and JCenter Went Poof!

I do not want to rely upon online services from a software tool vendor to run my builds. The Scala community is still recovering from Bintray and JCenter shutting down. I had dozens of Scala libraries on Bintray. I do not plan to migrate them, they are gone from public access.

– JCenter shutdown impact on Gradle builds

Trading Autonomy for Minimal Convenience is a Poor Trade

Remember that free products are usually subject to change or termination without notice. Examples abound of many companies whose free (and non-free) products suddenly ceased. There is no need to assume this type of vulnerability, so I block my metaphoric ears to the siren sound that tempts trusting souls into assuming unnecessary dependencies, and I chose tooling that is completely under my control.

What happens if I exceed the fair use policy?

From the Serverless Pricing and Terms page.

We want to offer a lot of value for free so you can get your idea off the ground, before introducing any infrastructure cost. The intent of the fair use policy is to ensure that we can provide a high quality of service without incurring significant infrastructure costs. The great majority of users will fall well within range of the typical usage guidelines. While we reserve the right to throttle services if usage exceeds the fair use policy, we do not intend to do so as long as we can deliver a high quality of service without significant infrastructure costs.If you anticipate your project will exceed these guidelines, please contact our support team. We’ll work with you on a plan which scales well.

AWS AppRunner

AWS just announced AppRunner. I wonder how suitable it is...