Published 2017-07-28.

Time to read: 4 minutes.

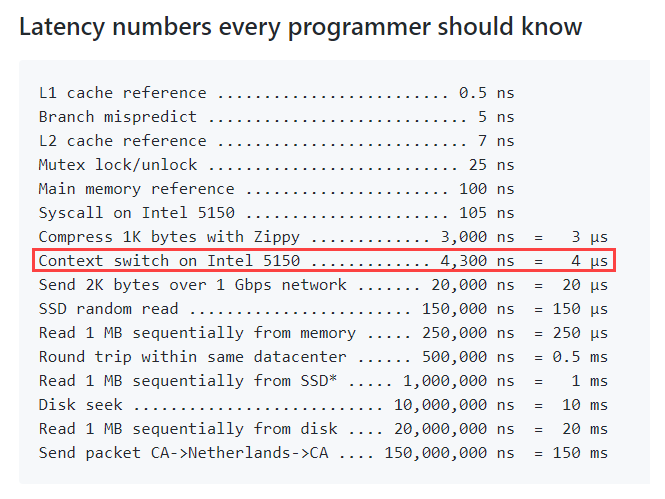

Context switches are expensive for CPUs to perform, and each incoming message in an Akka system requires a context switch when fairness (minimal latency deviation) is important [1]. In contrast, Apache Kafka Streams offers consistent processing times without requiring context switches, so Kafka Streams produces significantly higher throughput than Akka can when contending with a high volume of small computations that must be applied fairly.

Fairness

“Fairness” in a streaming system is a measure of how evenly computing resources are applied to each incoming message. A fair system is characterized by consistent and predictable latency for the processing of each message. An emergent effect of fair systems is that messages are journaled, processed, and transformed for downstream computation in approximately the order received. The output of a fair system roughly matches input in real time; a perfectly fair system would provide a perfect correspondence between input messages and output messages.

“Fair enough” systems have a some acceptable amount of jitter in the ordering of the input vs. output messages.

You might expect that streaming systems are generally fair, but systems based on Akka rarely are because of the implementation details of Akka's multithreaded architecture. Instead, Akka-based systems enqueue incoming messages for each actor, and the Akka scheduler periodically initiates the processing of each actor's input message queue. This is actually a type of batch processing. The reason Akka does this is so the high cost of CPU context switches can be amortized over several messages, to keep system throughput at an acceptable rate.

Context Switching

It is desirable for the primary type of context switching in Akka systems to be between tasks on a thread

because other types of context switching are more expensive.

However, switching tasks on a thread

costs a surprisingly large amount of CPU cycles.

To put this into context, the accompanying table shows various latencies compared to the amount of time necessary for a context switch on an

Intel Xeon 5150 CPU

As you can see, a lot of computing can be done during the time that a CPU performs a context switch. My Intermediate Scala course has several lectures that explore multithreading in great detail, with lots of code examples.

Building Distributed OSes is Expensive and Hard

Akka is rather low-level, and the actor model it is built around was originally conceived 45 years ago. Applications built using Akka require a lot of custom plumbing. Akka applications are rather like custom-built distributed operating systems spanning multiple layers of the OSI model, where application-layer logic is intertwined with transport-layer, session-layer and presentation-layer logic.

Debugging and tuning a distributed system is inherently difficult. Building an operating system that spans multiple network nodes and continues to operate properly in the face of network partitions is even harder. Because each Akka application is unique, customers find distributed systems built with Akka to be expensive to maintain.

Kafka vs. Akka

For most software engineering projects, it is better to use vendor-supplied or open-source libraries for building distributed systems because the library maintainers can amortize the maintenance cost over many systems. This allows users to focus on their problem, instead of having to develop and maintain the complex and difficult-to-understand plumbing for their distributed application.

Akka is a poor choice for apps that need to do small amounts of computation on high volumes of messages where latency must be minimal and roughly equal for all messages. Kafka Streams is a better choice for those types of applications because programmers can focus on the problem at hand, without having to build and maintain a custom distributed operating system. KTable is also a nice abstraction to work with.

Motivation

EmpathyWorks™ is a platform for modeling human emotions and network effects amongst groups of people as events impinge upon them. Each incoming event has the potential to cause a cascade of second-order events, which are spread throughout the network via people's relationships with one another. The actual processing required for each event is rather small, but given that the goal is to model everyone on earth (all 7.5 billion people), this is a huge computation to continuously maintain. For EmpathyWorks to function properly, incoming messages must be processed somewhat fairly.

KTables and Compacted Topics

Kafka Streams makes it easy to transform one or more immutable streams into another immutable stream. A KTable is an abstraction of a changelog stream, where each record represents an update. Instead of a using actors to track the state of multiple entities, a KTable provides an analogy to database tables, where the rows in a table contains the current state for each of the entities. Kafka is responsible for dealing with network partitions and data collisions, so the application layer does not become polluted with lower-layer concerns.

Kafka’s compacted topics are a unique type of stream that only maintains the most recent message for each key. This produces something like a materialized or table-like view of a stream, with up-to-date values (subject to eventual consistency) for all key/value pairs in the log.

[1] From the Akka blog: “... pay close attention to your ‘throughput’ setting on your dispatcher. This defines thread distribution ‘fairness’ in your dispatcher – telling the actors how many messages to handle in their mailboxes before relinquishing the thread so that other actors do not starve. However, a context switch in CPU caches is likely each time actors are assigned threads, and warmed caches are one of your biggest friends for high performance. It may behoove you to be less fair so that you can handle quite a few messages consecutively before releasing it.”

[2] Taken from Latency numbers every programmer should know. The Intel Xeon 5150 was released in 2006; for a more up-to-date information about CPU context switch time, please see this Quora answer. Although CPUs have gotten slightly faster since 2006, networks and SSDs have gotten much faster, so the relative latency penalty has actually increased over time.